EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

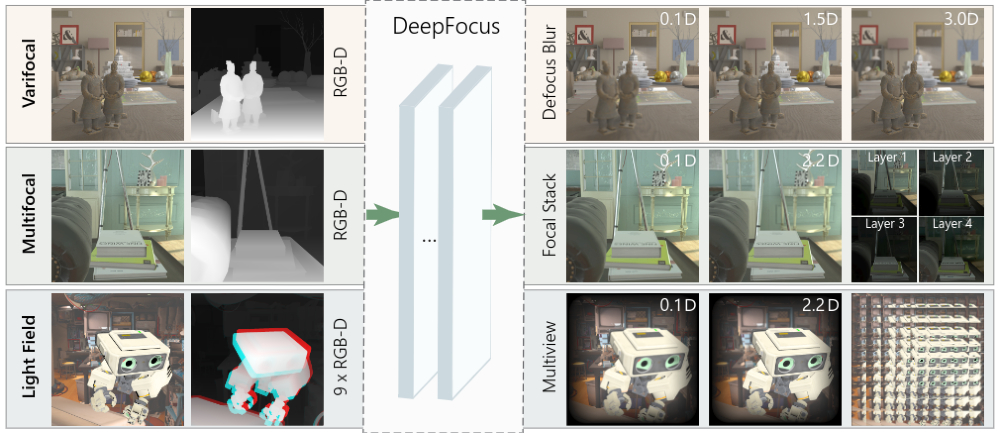

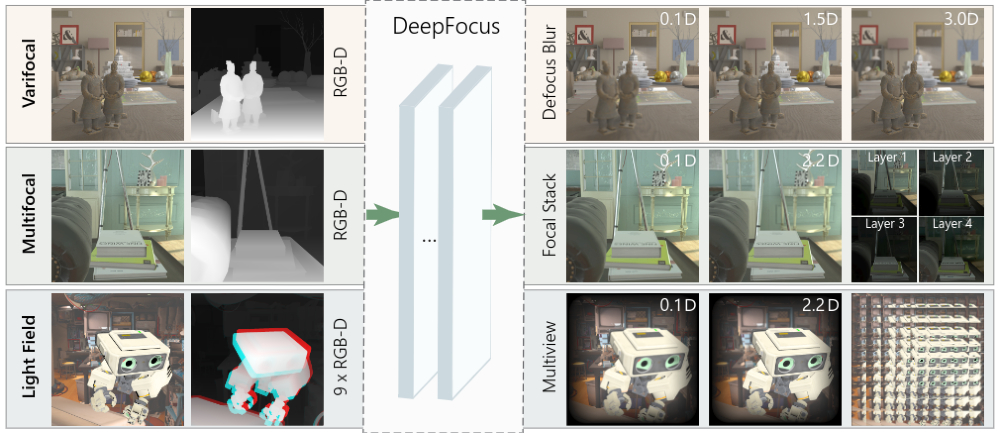

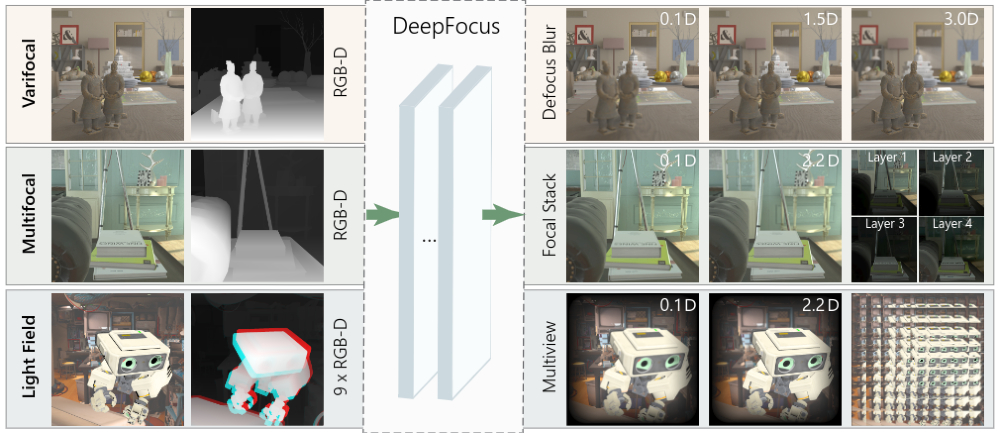

Facebook Inc.’s Oculus virtual reality team today open-sourced the code behind its DeepFocus software that’s used to create more realistic visuals for VR headsets.

The DeepFocus software first came to public attention in May, when Oculus showed off a new prototype VR headset called Half Dome. The prototype headset featured a “varifocal” display that moves the screens inside the device in order to mimic how the user’s eyes switch focus.

DeepFocus is trying to solve a phenomenon with VR headsets called the “vergence-accomodation conflict,” which is caused when a display is too close to the viewer’s eyes. This “conflict” occurs between where the eyes are pointed and where the lenses of the eyeballs are focused. That can limit the amount of time some people can wear a VR headset without feeling some kind of discomfort.

“Our eyes are like tiny cameras: When they focus on a given object, the parts of the scene that are at a different depth look blurry,” Marina Zannoli, a vision scientist at Facebook Reality Labs who worked on the DeepFocus project, wrote in a blog post by the Oculus team. “Those blurry regions help our visual system make sense of the three-dimensional structure of the world, and help us decide where to focus our eyes next. While varifocal VR headsets can deliver a crisp image anywhere the viewer looks, DeepFocus allows us to render the rest of the scene just the way it looks in the real world: naturally blurry.”

DeepFocus helps to remedy the VAC issue by using artificial intelligence software to create what’s called a “defocus effect,” which makes one part of an image appear sharper than the rest. This, Oculus’s researchers said, helps reduce unnecessary eye strain and visual fatigue and is vital for anyone hoping to immerse themselves in VR all day long.

Here’s a quick demo of the defocus effect:

Oculus said in a technical paper that DeepFocus is the first software that can generate a defocus effect in real time, creating realistic blurring of every part of the image the user isn’t currently focused on, similar to how the human eye actually works. The idea is to “faithfully reproduce the way light falls on the human retina,” Oculus researchers said.

Creating the defocus effect in real time involved pairing deep learning tools with color and depth data provided by VR game engines. The research team created 196,000 random images of objects, using them to train the system to render blur properly depending on which part of the image the headset wearer was focused.

“With deep learning, our system was able to grasp complex effects and relations, such as foreground and background defocusing, as well as correct blur at occlusion boundaries” said Oculus research scientist Anton Kaplanyan. “And by generating a rich database of ground truth examples, we were able to cover a much wider range of defocus effects and set a new bar for depth-of-field synthesis.”

The Oculus team said the decision to open-source DeepFocus was made so the wider community of VR system developers, vision scientists and perceptual researchers can benefit from its knowledge. The obvious benefit is that developers will be able to build better apps for VR systems, but it’s not clear when Oculus is planning to release its Half Dome headset. All Oculus would say is that the DeepFocus software should be useful for next-generation VR headset designs.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.