AI

AI

AI

AI

AI

AI

For all the promise and peril of artificial intelligence, there’s one big obstacle to its seemingly relentless march: The algorithms for running AI applications have been so big and complex that they’ve required processing on powerful machines in the cloud and data centers, making a wide swath of applications less useful on smartphones and other “edge” devices.

Now, that concern is quickly melting away, thanks to a series of breakthroughs in recent months in software, hardware and energy technologies that are rapidly coming to market.

That’s likely to drive AI-driven products and services even further away from a dependence on powerful cloud-computing services and enable them to move into every part of our lives — even inside our bodies. In turn, that could finally usher in what the consulting firm Deloitte late last year called “pervasive intelligence,” shaking up industries in coming years as AI services become ubiquitous.

By 2022, 80% of smartphones shipped will have AI capabilities on the device itself, up from 10% in 2017, according to market researcher Gartner Inc. And by 2023, that will add up to some 1.2 billion shipments of devices with AI computing capabilities built in, up from 79 million in 2017, according to ABI Research.

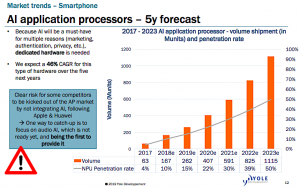

A lot of startups and their backers smell a big opportunity. According to Jeff Bier, founder of the Embedded Vision Alliance, which held a conference this past week in Silicon Valley, investors have plowed some $1.5 billion into new AI chip startups in the past three years — more than was invested in all chip startups in the previous three years. Market researcher Yole Développement forecasts that AI application processors will enjoy a 46% compound annual growth rate through 2023, when nearly all smartphones will have them, from fewer than 20% today.

A lot of startups and their backers smell a big opportunity. According to Jeff Bier, founder of the Embedded Vision Alliance, which held a conference this past week in Silicon Valley, investors have plowed some $1.5 billion into new AI chip startups in the past three years — more than was invested in all chip startups in the previous three years. Market researcher Yole Développement forecasts that AI application processors will enjoy a 46% compound annual growth rate through 2023, when nearly all smartphones will have them, from fewer than 20% today.

And it’s not just startups, as a series of announcements at the Computex computer conference in Taipei will reveal this week. Just today, Intel Corp. previewed its coming Ice Lake chips, which among other things have “Deep Learning Boost” software and other new AI instructions on a graphics processing unit. Early Monday, Arm Ltd. also unveiled a series of processors aimed at AI applications, including for smartphones and other edge devices. A few hours later, Nvidia Corp. announced its first platform for AI devices.

“Within the next two years, virtually every processor vendor will be offering some kind of competitive platform for AI,” Tom Hackett, principal analyst at IHS Markit, said at the alliance’s Embedded Vision Summit. “We are now seeing a next-generation opportunity.”

Those chips are finding their way into many more devices beyond smartphones. They’re also being used in millions of “internet of things” machines such as robots, drones, cars, cameras and wearables. Among the 75 or so companies developing machine learning chips, for instance, is Israel’s Hailo, which raised a $21 million funding round in January. In mid-May it released a processor that’s tuned for deep learning, a branch of machine learning responsible for recent breakthroughs in voice and image recognition.

Those chips are finding their way into many more devices beyond smartphones. They’re also being used in millions of “internet of things” machines such as robots, drones, cars, cameras and wearables. Among the 75 or so companies developing machine learning chips, for instance, is Israel’s Hailo, which raised a $21 million funding round in January. In mid-May it released a processor that’s tuned for deep learning, a branch of machine learning responsible for recent breakthroughs in voice and image recognition.

More compact and capable software that could pave the way for more AI at the edge is coming, judging from new research indicating the size of neural networks could be reduced tenfold and still achieve similar results. Already, some companies are managing to compress the size of software needed for AI.

Google LLC, for instance, debuted its TensorFlow Lite machine learning library for mobile devices in late 2017, enabling the potential for smart cameras that can identify wildlife or imaging equipment to can make medical diagnoses even where there’s no internet connection. Some 2 billion mobile now have TensorFlow Lite deployed on them, Google staff research engineer Pete Warden said at a keynote presentation at the Embedded Vision Summit.

And in March, Google rolled out an on-device speech recognizer to power speech input in Gboard, Google’s virtual keyboard app. The automatic speech recognition transcription algorithm is now down to 80 megabytes so it can run on the Arm Ltd. A-series chip inside a typical Pixel phone, and that means it works offline so there’s no network latency or spottiness.

Not least, rapidly rising privacy concerns about data traversing the cloud means there’s also a regulatory reason to avoid moving data off the gadgets.

“Virtually all the machine learning processing will be done on the device,” said Bier, who’s also co-founder and president of Berkeley Design Technology Inc., which provides analysis and engineering services for embedded digital signal processing technology. And there will be a whole lot of that hardware: Warden cited an estimate of 250 billion active embedded devices in the world today, and that number is growing 20% a year.

Google’s Pete Warden (Photo: Robert Hof/SiliconANGLE)

But doing AI off the cloud is no easy task. It’s more than just the size of the machine learning algorithms but the power it takes to execute them, especially since smartphones and especially IoT machines such as cameras and various sensors can’t depend on power from a wall socket or even batteries. “The devices will not scale if we become bound to changing or recharging batteries,” said Warden.

The radio connections needed to send data to and from the cloud also are energy hogs, so communicating via cellular or other connections is a deal breaker for many small, cheap gadget. The result, said Yohann Tschudi, technology and market analyst at Yole Développement: “We need a dedicated architecture for what we want to do.”

There’s also a need to develop devices that realistically must draw less than a milliwatt, and that’s about a thousandth of what a smartphone uses. The good news is that an increasing array of sensors and even microprocessors promises to do just that.

The U.S. Department of Energy, for instance, has helped develop low-cost wireless peel-and-stick sensors for building energy management in partnership with Molex Inc. and building automation firm SkyCentrics Inc. And experimental new image sensors can power themselves with ambient light.

And even microprocessors, the workhorses for computing, can be very low-power, such as those from startups such as Ambiq Micro, Eta Compute, Syntiant Corp., Applied Brain Research, Silicon Laboratories Inc. and GreenWaves Technologies. “There’s no theoretical reason we can’t compute in microwatts,” or a thousand times smaller than milliwatts, Warden said. That’s partly because they can be programmed, for instance, to wake up a radio to talk to the cloud only when something actionable happens, like liquid spilling on a floor.

Embedded Vision Summit (Photo: Robert Hof/SiliconANGLE)

All this suggests a vast new array of applications of machine learning on everything from smartphones to smart cameras and factory monitoring sensors. Indeed, said Warden, “We’re getting so many product requests to run machine learning on embedded devices.”

Among those applications:

Warden even anticipates that sensors could talk to each other, such as in a smart home where the smoke alarm detects a potential fire and the toaster replies that no, it’s just burned toast. That’s speculative for now, but Google’s already working on “federated learning” to train machine learning models without using centralized training data (below).

None of this means the cloud won’t continue to have a huge role in machine learning. All those examples involve running the models on the machines themselves, a process known as inference. The training of the models, on the other hand, still involves processing massive amounts of data on powerful clusters of computers, and companies such as Google, Amazon.com Inc. and Arm have all been offering AI chips, some via their cloud computing services, since at least last year.

But it’s now apparent that the next wave of AI services will come less from the cloud than from the edge.

THANK YOU