AI

AI

AI

AI

AI

AI

IBM Corp. wants to make artificial intelligence models more reliable by giving them the ability to express when they’re not sure of something.

The problem IBM is trying to tackle is that AI systems are sometimes too confident for their own good, and that means they make predictions that can be extremely unreliable in certain situations.

In a blog post today, IBM researchers Prasanna Sattigeri and Q. Vera Liao say this overconfidence can actually be extremely dangerous. For example, if a self-driving car misidentifies the side of an articulated bus as a brightly lit sky and refuses to brake or warn the driver, the consequences may well be devastating. Such accidents have happened before and they have even been fatal.

To try to prevent this from happening again, IBM has created the Uncertainty Quantification 360 toolkit and made it available to the open source community.

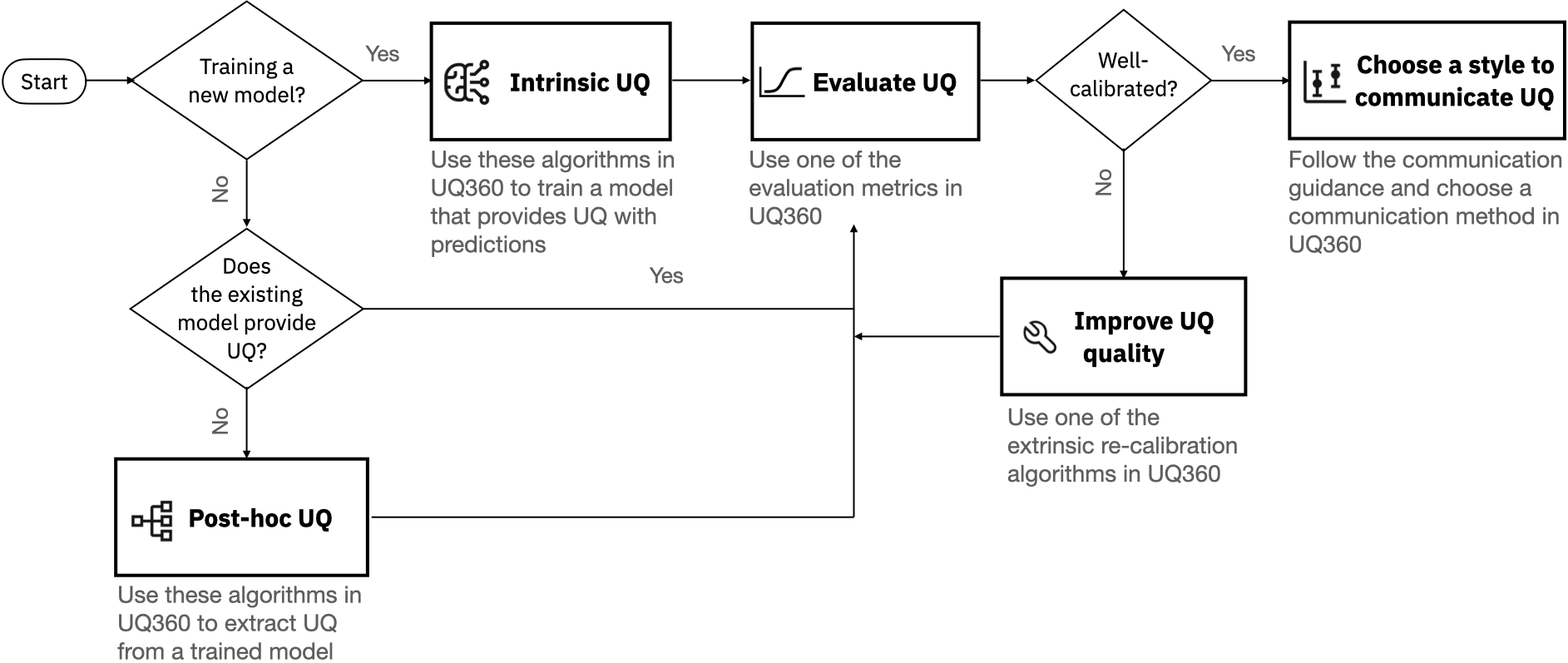

Announced at the IBM Digital Developer Conference today, the UQ toolkit is designed to boost the safety of AI models by giving them the “intellectual humility” they need to use when they’re unsure of something. It’s a collection of algorithms that can be used to quantify an AI model’s uncertainty. It also provides capabilities to measure and improve uncertainty quantification to streamline development processes, as well as taxonomy and guidance to help developers choose which capabilities are appropriate for specific models.

Sattigeri and Liao said UQ 360 can be used to improve hundreds of different kinds of AI models where safety is a paramount concern. A good example is AI that’s used to diagnose medical issues such as sepsis.

“Early detection of sepsis is important and AI can help, but only when predictions are accompanied by meaningful uncertainty estimates,” Sattigeri and Liao explained. “Only then can doctors immediately treat patients AI has confidently flagged as at-risk and prescribe additional diagnostics for those AI has expressed a low level of certainty about. If the model produces unreliable uncertainty estimates, patients may die.”

Knowing the margin of error might also be useful for real estate agents who use AI-based house price prediction models, or for models that try to predict the impact of new product features, the researchers said.

Uncertainty estimates can also improve human-AI collaboration too, the researchers explained. For example, if a nurse is using an AI system to try and diagnose skin disease and it expressed high confidence about its diagnosis, the nurse can accept the decision.

But if the confidence is low, that patient can be referred to a dermatologist instead. Drug design will benefit too, as it means research teams can focus their efforts on possibilities where the AI model has shown greater certainty that the desired outcome can be reached.

IBM’s UQ 360 toolkit provides an assortment of different algorithms that can be used to produce high-quality uncertainty quantification, because different kinds of AI models need different methods to establish this. Factors such as the underlying model, the task they’re designed to perform, for example regression or classification, the characteristics of the data and the user’s goal will all shape how reliable each UQ method is, Sattigeri and Liao said.

“Sometimes a chosen UQ method may not produce high-quality uncertainty estimates and could mislead users,” they wrote. “Therefore, it is crucial for model developers to always evaluate the quality of UQ, and improve the quantification quality if necessary, before deploying an AI system.”

IBM’s UQ 360 toolkit provides tools for quantification, measurement and improvement. It also provides a range of tools for communicating the uncertainty it quantifies.

“For every UQ algorithm provided in the UQ360 Python package, a user can make a choice of an appropriate style of communication by following our psychology-based guidance on communicating UQ estimates, from concise descriptions to detailed visualizations,” the researchers explained.

IBM said its UQ 360 toolkit is available to download now, and it’s asking the community to contribute to its development going forward to ensure that AI practitioners can understand and communicate the limitations of their algorithms.

“Uncertainty around the correctness of AI automation is pretty much innate to the AI system,” said Constellation Research Inc. analyst Holger Mueller. “To figure out confidence, more AI is needed that can either establish a confidence level, or the other way around, quantify the uncertainty of an AI Model.”

THANK YOU