NEWS

NEWS

NEWS

NEWS

NEWS

NEWS

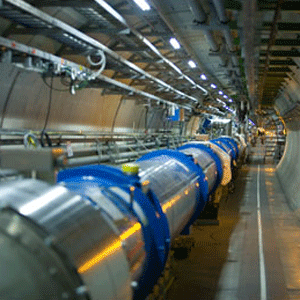

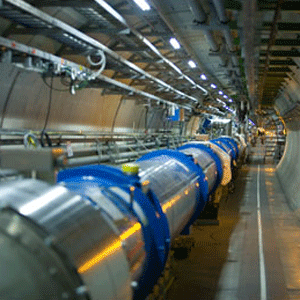

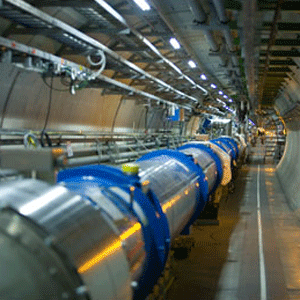

![]() We have seen Big Data utilized for several big computing applications, and it will now serve for computing for the Large Hadron Collider (LHC). It’s because the European Organisation for Nuclear Research (CERN) is facing a tough challenge in the discovery of a particle consistent with the Higgs Boson. CERN has to keep the Large Hadron Collider online for several months, in order to complete this discovery, but this is going to have huge computing needs. They need to collect a lot of event data to have a statistical chance of seeing the same outcome enough times to prove that what they have found is real.

We have seen Big Data utilized for several big computing applications, and it will now serve for computing for the Large Hadron Collider (LHC). It’s because the European Organisation for Nuclear Research (CERN) is facing a tough challenge in the discovery of a particle consistent with the Higgs Boson. CERN has to keep the Large Hadron Collider online for several months, in order to complete this discovery, but this is going to have huge computing needs. They need to collect a lot of event data to have a statistical chance of seeing the same outcome enough times to prove that what they have found is real.

Currently, they are operating at 4 TeV per beam in each direction, which means a collision generates a sum of 8 TeV. The discovery of Higgs Boson is important for IT sector as well as this will open up the data of huge data computing works for it. Going forward, the discovery of the new particle will require intense analysis work, and hence more data.

When asked about the current data processing requirements for the LHC experiments, CERN reported,

“When the LHC is working, there are about 600 million collisions per second. But we only record here about one in 1013 (ten trillion). If you were to digitize all the information from a collision in a detector, it’s about a petabyte a second or a million gigabytes per second.

There is a lot of filtering of the data that occurs within the 25 nanoseconds between each bunch crossing (of protons). Each experiment operates their own trigger farm – each consisting of several thousand machines – that conduct real-time electronics within the LHC.

Out of all of this comes a data stream of some few hundred megabytes to 1 GB per second that actually gets recorded in the CERN data centre, the facility we call ‘Tier Zero’.”

Such massive data calculations are possible using Big Data that enables fast production of results.

Not only this, Big Data is coming up in news several good reasons, especially astronomy. At Hadoop Summit 2012, NASA scientist Chris Mattmann discussed scientific use cases for Big Data, some of the massive computing challenges that Big Data can help solve, and how these relate to the world outside of NASA. Hadoop, which provides an open-source implementation of frameworks for reliable, scalable, distributed computing and data storage, also provides business, the solutions to the insoluble problems, especially the bankers. Banking industry has some inherent problems like eliminating fraud, mapping the spread of disease, understanding the traffic system, optimizing the energy grid. But now, Hadoop has made this possible to convert these into solvable issues, via its computing capabilities.

THANK YOU