CLOUD

CLOUD

CLOUD

CLOUD

CLOUD

CLOUD

Google Inc. is touting the beta test of a new cloud service that lets customers rent Nvidia Corp. graphics chips running in its data centers to do machine learning and other computing-heavy workloads.

The program, launched Tuesday, allows Google cloud customers in three U.S., Asia and Europe regions to spin up Graphics Processing Unit-based instances, or computing jobs, on the Google Compute Engine.

Google Product Manager John Barrus said in a blog post that the company has been working on delivering the Nvidia GPUs since last November, when it revealed plans to incorporate them into its cloud. GPUs are widely used to run deep learning neural networks, which borrow basic concepts of how the human brain works to improve speech and image recognition and related tasks.

Users need to request access to the GPUs first, the company said. “If your project has an established billing history, it will receive [GPU] quota automatically after you submit the request,” Barrus said.

Later, Google will add the option to fire up AMD FirePro S9300 x2 GPUs in its cloud for remote desktop workloads. Google reckons companies will see the benefit of running GPU-intensive tasks its on Nvidia-powered cloud instances, instead of forking out bundles of cash to build their own computing cluster on their premises.

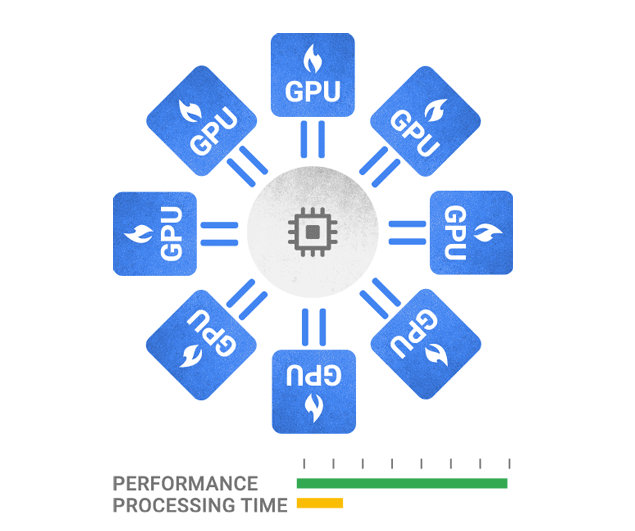

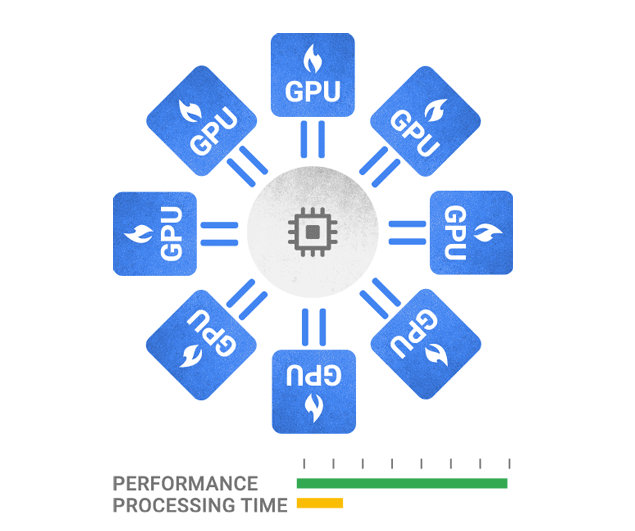

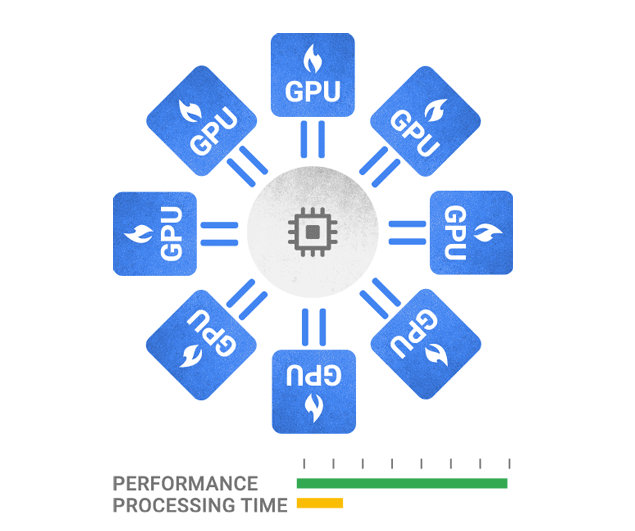

“If you need extra computational power for deep learning, you can attach up to eight GPUs (four K80 boards) to any custom Google Compute Engine virtual machine,” Barrus explained. He provided a list of example workloads suitable for GPU-based instances, including video and image transcoding, seismic analysis, molecular modeling, genomics, computational finance, simulations, high-performance data analysis, computational chemistry, finance, fluid dynamics and visualization.

Google is also offering GPU-equipped virtual machines for customers running machine learning workloads on its TensorFlow deep learning service. The company said its GPUs should dramatically increase the speed of operation and also the efficiency of code running on its cloud-based machine learning service.

Google is pricing its GPU processing services at 70 cents per GPU per hour for U.S. customers, and 77 cents GPU per hour for Asia- and Europe-based customers.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.