EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

IBM Corp. and Apple Inc. are expanding their existing four-year-old pact to bring business applications to iOS devices.

The crux of the announcement Tuesday at IBM’s Think conference in Las Vegas is that the companies are integrating their respective artificial intelligence and machine learning technologies in order to make iOS enterprise applications smarter. They’ve also built a new console for developers using Apple’s Swift programming language on IBM Cloud that they claim makes coding applications easier.

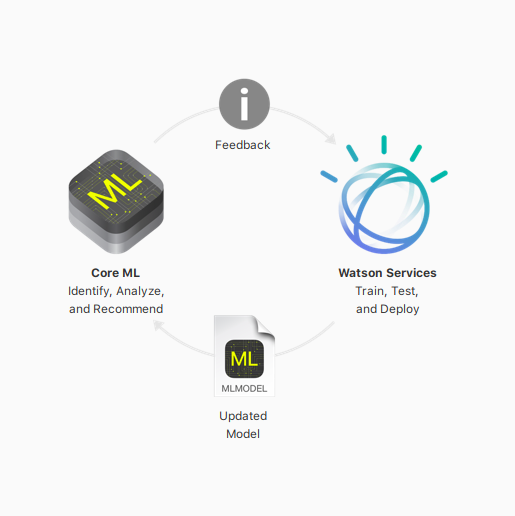

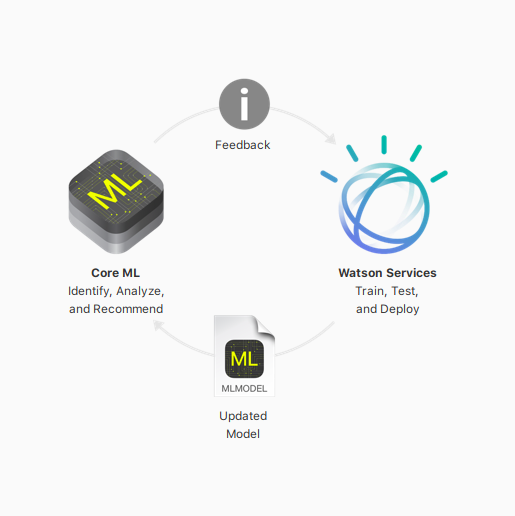

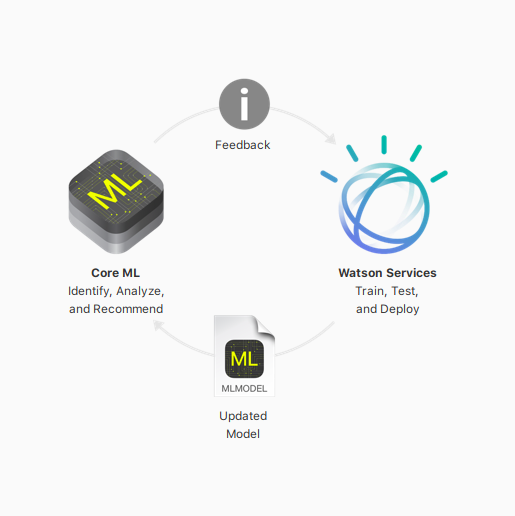

To foster more AI in enterprise apps, IBM’s Watson AI system is being integrated with Apple’s Core ML machine learning framework. The new product, called IBM Watson Services for Core ML, is designed to help developers build apps that can learn from user activity in order to become smarter the more they’re used.

The first available service is the Watson Visual Recognition Service, which is a tool that has been trained on thousands of images to recognize what they are. This model can be exported to Core ML in order to run on iOS devices, allowing developers to build applications with image recognition capabilities more easily.

“There’s an increased demand on enterprise developers to build smarter apps that put critical information at the fingertips of employees,” said Mahmoud Nagshineh, general manager of the Apple partnership at IBM. “In order to deliver on this demand, developers need resources and tools to simplify and speed their work like never before.”

With that in mind, the new developer console offers step-by-step instructions for developers on how to build enterprise apps for iOS. It also integrates with AI, data and mobile services that have been optimized for Swift.

“Watson Studio provides a complete suite of model development tools that are available in the new IBM Cloud Developer Console for Apple,” said Jim McGregor, principal analyst at TIRIAS Research, in an opinion piece in Forbes. “IBM will also provide access to Watson cloud resources and data resources for developing models. The only thing a developer needs to bring to the effort is an idea.”

Not everyone shared McGregor’s enthusiasm, however. Holger Mueller, principal analyst and vice president of Constellation Research Inc., said that the announcement was “overdue” given the length of the partnership between the two companies, wondering aloud why it took them so long to add these capabilities. Nonetheless, he said it was a positive development with regard to Swift.

“Both need more capabilities in Swift to make it more attractive as language and platform for next generation applications,” Mueller said. “Additionally, Apple wants to partner with more machine learning vendors, so Watson is a key step, especially in the enterprise.”

IBM said Coca-Cola Co. has been trying out the new machine learning capabilities for some time, testing new applications for visual recognition problem identification, cognitive diagnosis and augmented repair.

The expanded partnership is clearly beneficial for both companies. For IBM it means new markets for its Watson service, while Apple gets help making its devices more appealing for large enterprises. That’s important for Apple as it faces up shrinking demand for smartphones and tablets in the consumer market.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.