NEWS

NEWS

NEWS

NEWS

NEWS

NEWS

Five years after its Watson supercomputer beat the champions of Jeopardy, IBM aims to help the poster boy of artificial intelligence become a little more human.

This year, IBM will focus on a number of technologies intended to enable Watson to detect emotions in written human communications, Rob High, chief technology officer at IBM’s Watson Solutions, said today at the GPU Technology Conference in San Jose.

“Computers will communicate with us on our terms,” he said. “They will adapt to our needs, rather than us having to interpret and adapt to them.”

As AI starts to hit the mainstream via services such as speech and image recognition, language translation and autonomous cars, the idea of humans and computers working together is a theme that many companies are starting to explore.

But so far the focus has been chiefly on humans helping improve AI, or cognitive computing as IBM calls it, more than AI adapting to human needs. Google uses human raters to assess the quality of search results, and Facebook’s facial recognition software asks people to label photos to improve accuracy. Indeed, deep learning, a branch of AI responsible for many recent breakthroughs, generally requires a lot of human training of neural networks using hand-picked data sets.

The efforts by IBM, Google and others to humanize AI could fundamentally change the nature of computing, so it accommodates the way people work and communicate rather than the other way around. Such a pervasive change could ripple all the way from the way we use our phones to the kinds of technologies used to store and process data in data centers and the cloud.

“In less than 10 years, cognitive systems will be to computing what transaction processing is today,” High said. To usher in such a sweeping change, the job of Watson–and other computing and AI systems–will be to understand what humans want from the data they’re crunching and provide it in a way that is most meaningful to them.

“It’s about changing the roles between humans and computers,” High explained in an interview last month with SiliconANGLE. “We as humans have had to adapt to the computer. There’s only so much you can achieve with that two-dimensional experience.”

Specifically, IBM is offering application programming interfaces to software developers so they can use Watson to analyze emotions, tone and personality characteristics from text. For one, it offers a beta test version of a Watson emotion analysis service. It uses linguistic analytics to gauge emotions implied by a sample of text, returning confidence scores for anger, disgust, fear, joy and sadness. For instance, a customer review site such as Yelp could measure the level of disgust in a restaurant review site to help the business understand how it needs to improve.

Watson also has a tone analyzer API that can measure similar emotions in samples of text from a sentence to a single word. One example High cited was a dating site profile that contained the sentence, “I raised two kids and now I’m starting a new chapter in my life.” Based on psycholinguistics and emotion and language analysis, Watson could determine that about a quarter of people reading that would detect anger, suggesting it might be advisable to change the wording.

There’s also a personality insights service in the Watson Developer Cloud that can extract insights from a lengthy piece of text by a particular person, providing measurements of characteristics such as introversion or extroversion and conscientiousness. It could be used, for instance, to help recruiters match candidates to compatible companies.

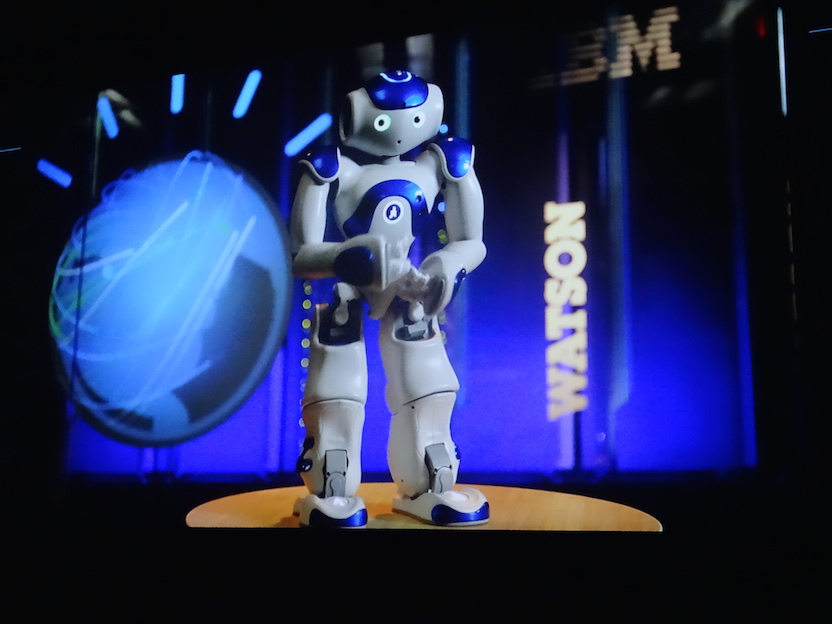

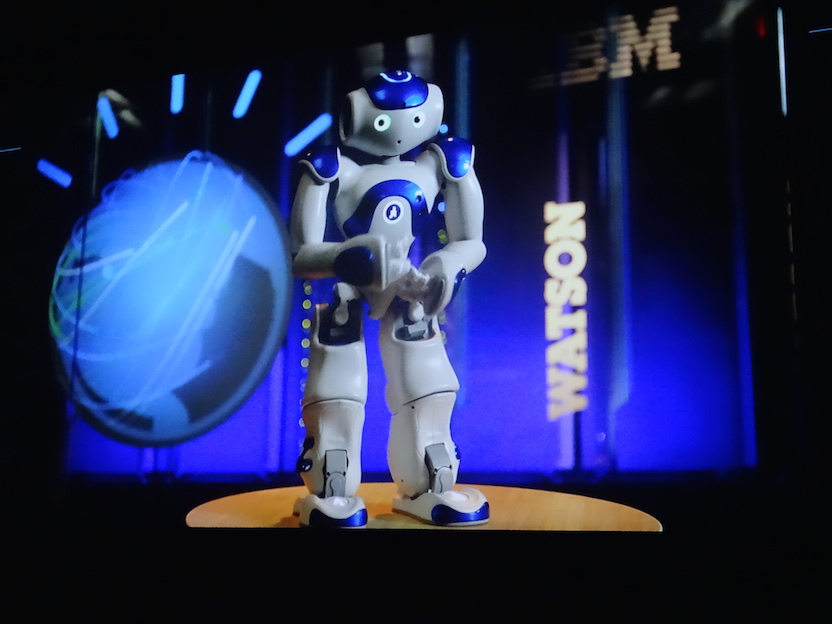

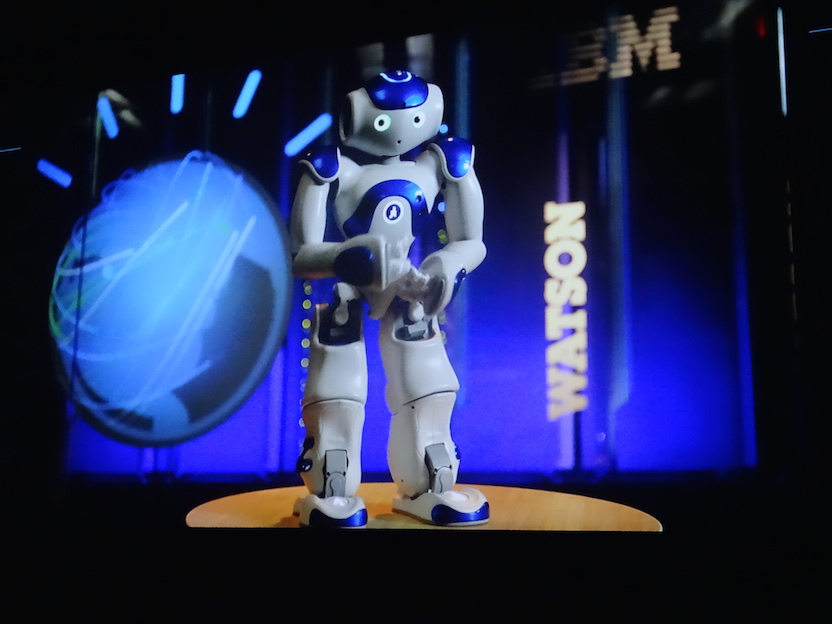

This kind of understanding, or at least analysis, of human emotions may find its most potent application in robots. IBM has experimented with Softbank’s Aldebaran Nao robots (pictured above) robots to give them more human-seeming characteristics, such as the ability to converse more naturally, gesture to punctuate key points–even sing a Taylor Swift song.

In fact, despite the assumption by many that robots will be mainly used for taking care of rote tasks, High said that in the short term, they may be more important for communications between people and computer systems or cloud applications.

“Robots give us the facility to leverage these other channels of communications, such as vocal inflections and motion,” he said. Along with other new interfaces on every connected device, he added, “Everywhere we go there will be a presence of cognitive computing that facilitates our life.”

THANK YOU