CLOUD

CLOUD

CLOUD

CLOUD

CLOUD

CLOUD

Microsoft Corp. today said it’s teaming up with Nvidia Corp. so that its Azure cloud customers can tap into the graphics chipmaker’s GPU Cloud in order to train deep learning models.

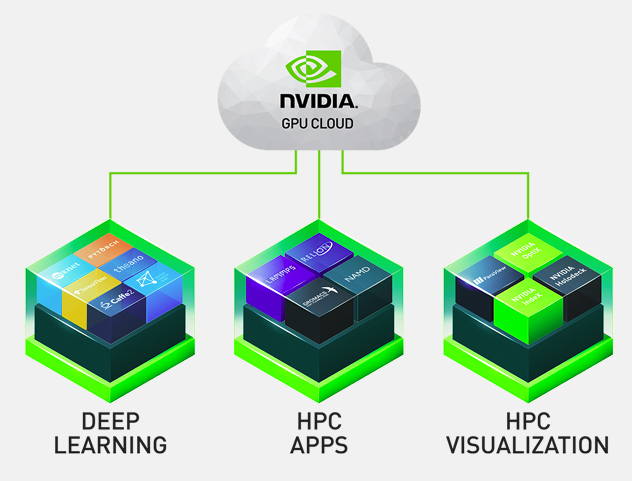

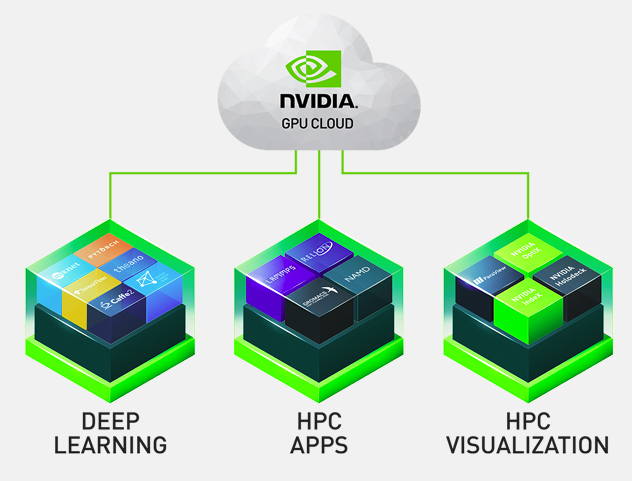

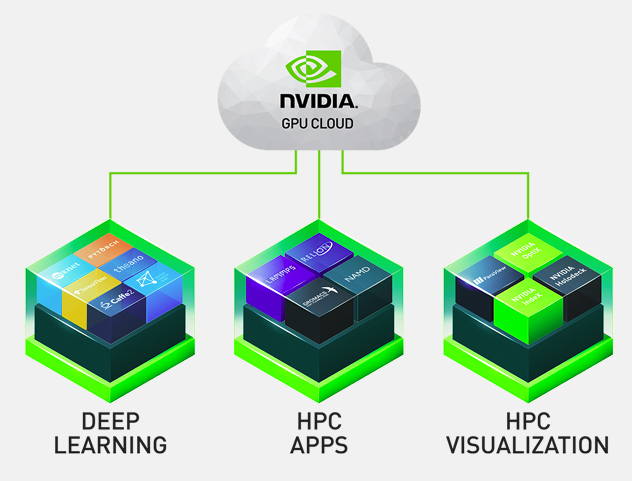

Nvidia’s GPU Cloud offers developers preconfigured containers with software accelerated by its graphics processing units, which offer better performance than standard central processing units when it comes to workloads such as artificial intelligence. The new offering on Azure means data scientists, developers and researchers can avoid a number of integration and testing steps before running high-powered computing tasks, the companies said.

Nvidia launched its GPU Cloud in 2017. Powered by Nvidia Volta and its Tensor Core GPU architecture, it supports a range of popular deep learning tools such as Microsoft’s Cognitive Toolkit, TensorFlow and PyTorch.

The Nvidia GPU cloud provides access to some of its most advanced GPUs, including its Tesla V100 chips that are used to power dozens of supercomputers around the world. The same chips are also used to deliver the intensive computing power needed to enable deep learning.

Microsoft is now offering access to 35 GPU-accelerated software containers for deep learning and HPC workloads on its cloud, which can run on the following Microsoft Azure instance types with Nvidia’s GPUs: the NCv3 (one, two or four Tesla V100 GPUs), the NCv2 (one, two or four Tesla P100 GPUs) and the ND (one, two or four Tesla P40 GPUs).

Staying with deep learning, Microsoft also announced the release of a new container image called “Nvidia GPU Cloud Image for Deep Learning and HPC on Azure Marketplace,” which is designed to make it easier to use Nvidia GPU Cloud containers on Azure. The software giant also said it’s making a high-performance computing cluster management tool called “Azure CycleCloud” generally available.

Today’s announcements come after Microsoft launched its Project Brainwave deep learning initiative in preview earlier this year. Project Brainwave is a cloud service for running AI models powered by Intel Corp.’s Styratix 10 field programmable gate arrays, or FPGA chips, which are hardware accelerators that can deliver better performance than both CPUs and GPUs.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.