AI

AI

AI

AI

AI

AI

Nvidia Corp. is claiming another big breakthrough in artificial intelligence, this time setting new records in language understanding that could enable real-time conversational AI in a variety of software applications.

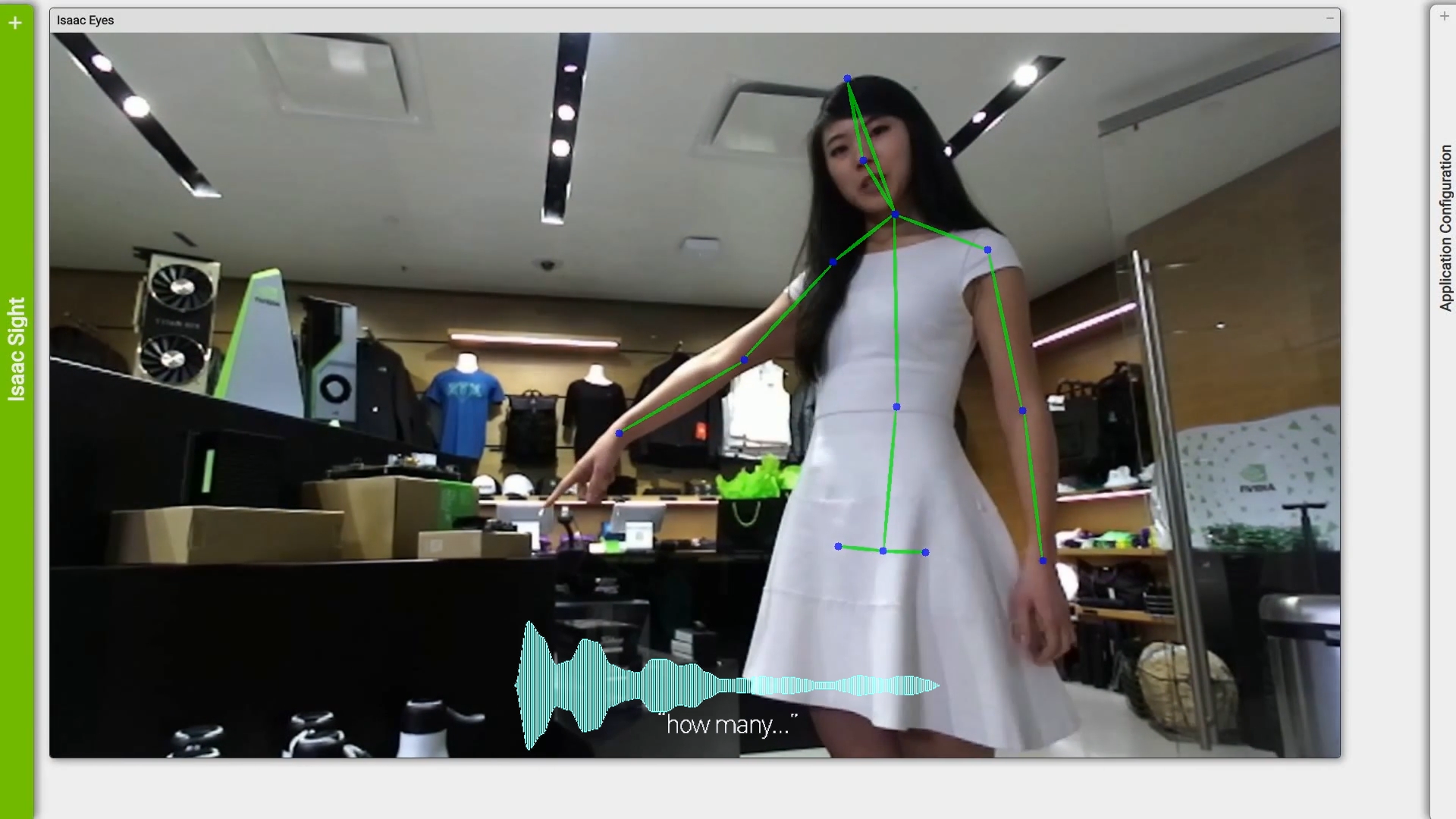

Nvidia said real-time conversational AI is a must-have for companies that want to build chatbots and virtual assistants that can have conversations with real people while displaying “human-level” comprehension.

“Conversational AI has tons of applications all over the world,” Bryan Catanzaro, vice president of applied deep learning research at Nvidia, said in a press briefing. “But it poses a lot of challenges. The industry has been moving toward much larger language models, but they’re difficult to train and difficult to deploy.”

The latest milestones include slashing the training time for one of the most advanced AI language models, Bidirectional Encoder Representations from Transformers, from several days to just 53 minutes. Nvidia’s systems were also able to cut the time it takes to complete AI inference to just over two milliseconds, which is more than enough to handle the kind of fast-paced conversation that a human would expect.

The company said it set a world record for training BERT-Base in under one hour, a process that can takes weeks, by using optimized software and its DGX SuperPOD system.

Catanzaro said Nvidia’s TensorRT platform set a world record for BERT inference with just two milliseconds latency, which is well within the 10 millisecond threshold required for human-level accuracy.

With the latest breakthroughs, Nvidia is aiming for nothing less than to power the “next wave of conversational AI,” and the company has already made some solid progress in that direction, Catanzaro said.

For example, Nvidia has been working closely with Microsoft Corp. to drive more accurate search results in Bing.

“In close collaboration with Nvidia, Bing further optimized the inferencing of the popular natural language model BERT using Nvidia GPUs, part of Azure AI infrastructure, which led to the largest improvement in ranking search quality Bing deployed in the last year,” Microsoft Bing Group Program Manager Rangan Majumder said in a statement. “We achieved two times the latency reduction and five times throughput improvement during inference using Azure Nvidia GPUs compared with a CPU-based platform, enabling Bing to offer a more relevant, cost-effective, real-time search experience for all our customers globally.”

Constellation Research Inc. analyst Holger Mueller said Nvidia’s progress in conversational AI was significant because the technology is changing the way that people communicate with software and devices, and will have a big impact on the future of work in enterprises.

“The race for AI platforms is on, and things such as model training and execution speeds are determining the winners,” Mueller said.

He also said the partnership with Microsoft was a key win for both companies. In Nvidia’s case, that’s because Google LLC and Amazon Web Services Inc. are both building their own AI chips and therefore unlikely to use its hardware. But getting its hardware adopted by public cloud companies is important for Nvidia’s long-term success, he said.

“As for Microsoft, it needs partners as its internal efforts at putting algorithms on silicon aren’t happening, at least not yet,” Mueller said. “The field programmable gate arrays that Microsoft has championed with Azure do not cover the fast developing realm of conversational AI.”

Nvidia said it made a number of optimizations to its AI platform in order to achieve its breakthroughs in conversational AI, which are now being made available to developers. The optimizations include new BERT training code with PyTorch, which is being made available on GitHub, and a TensorRT optimized BERT sample, which has also been made open-source.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.