AI

AI

AI

AI

AI

AI

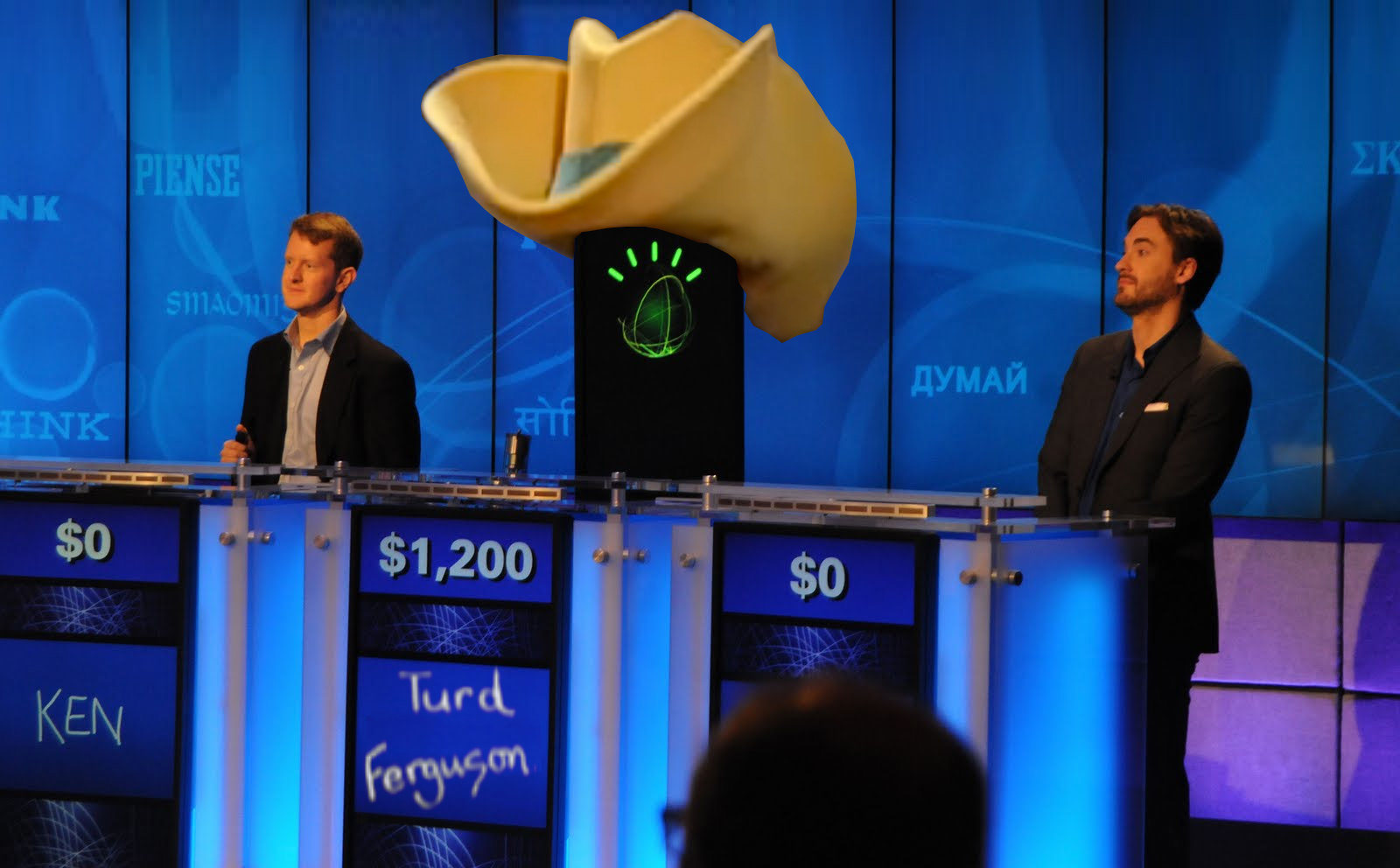

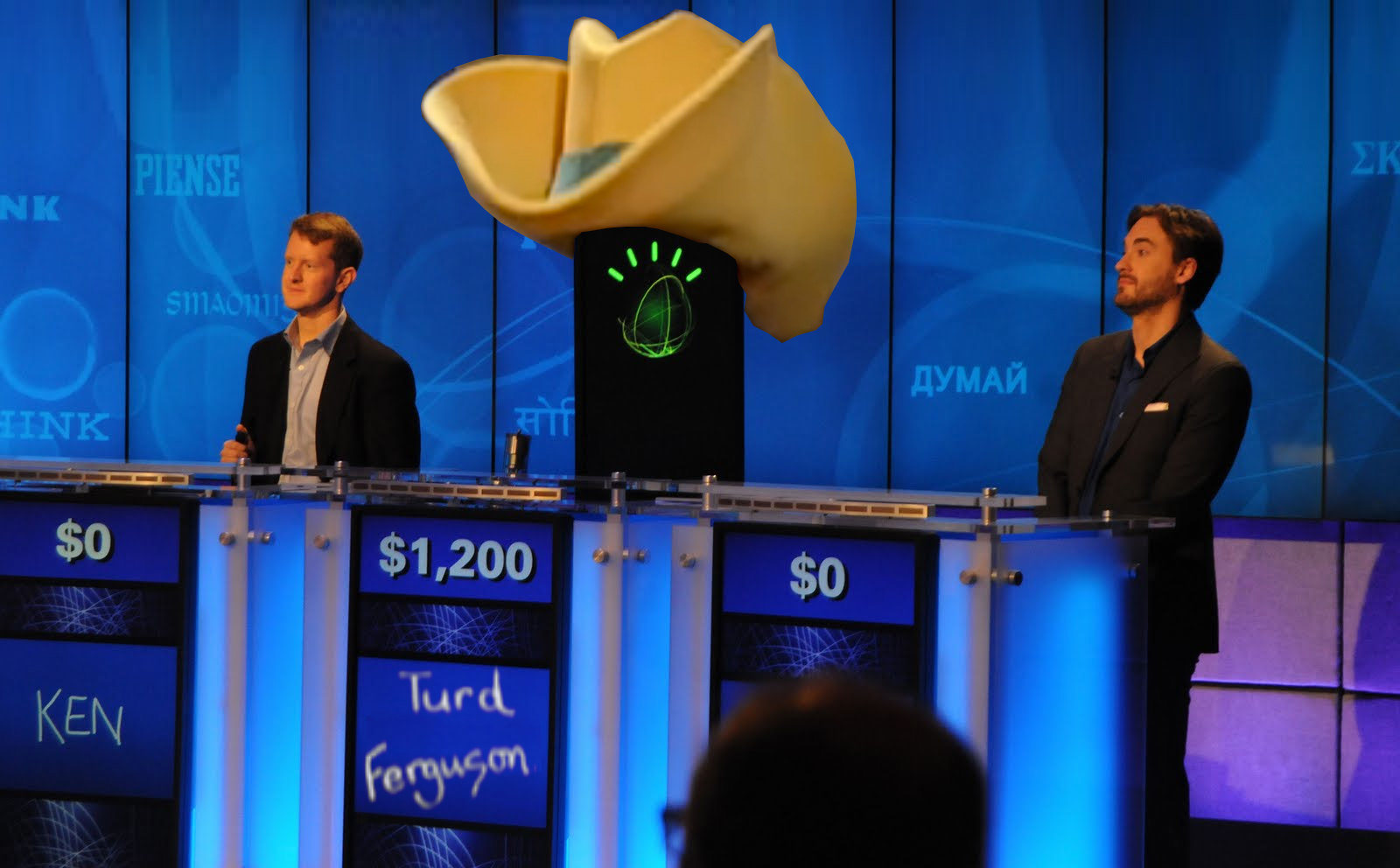

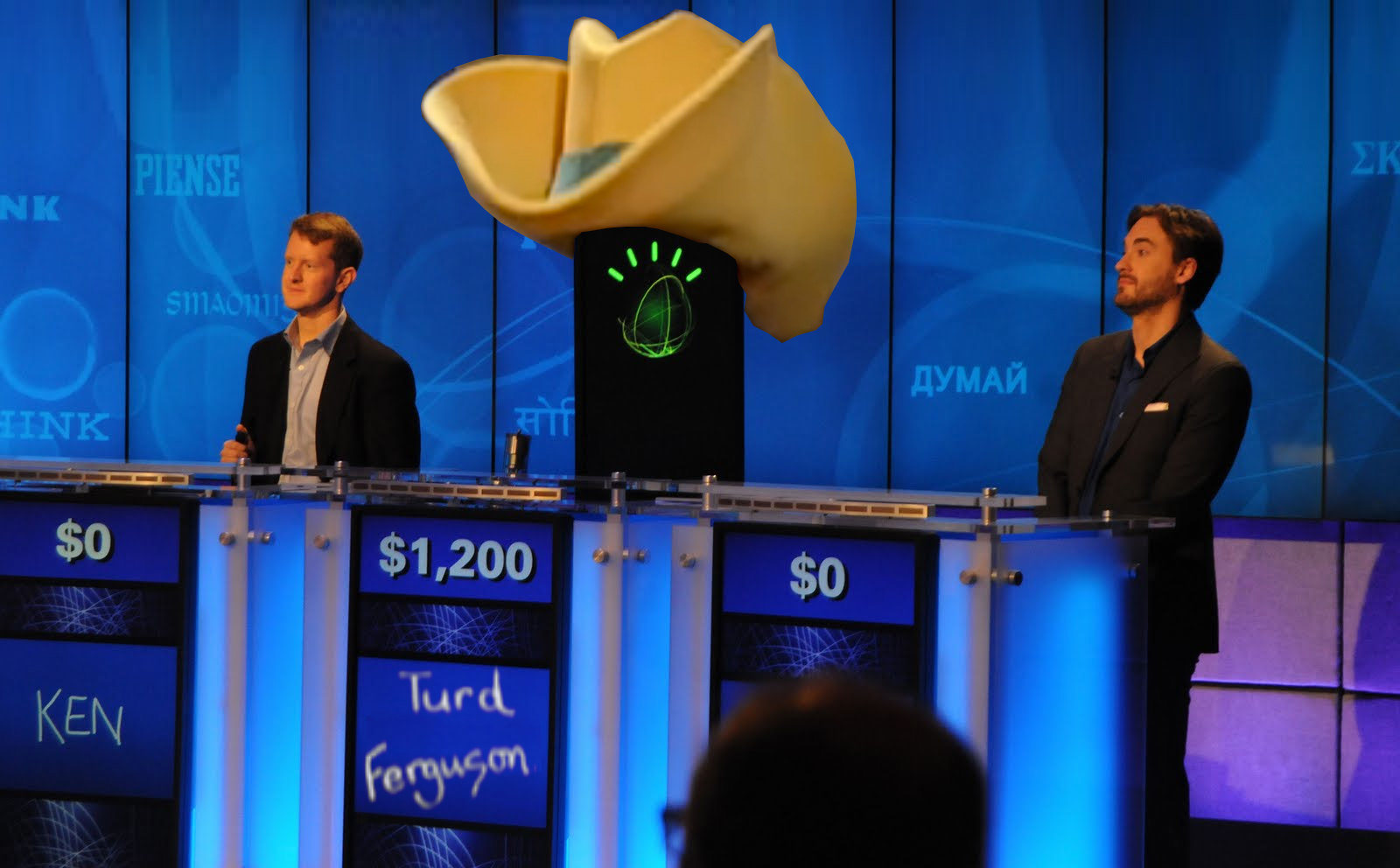

In 2011, the best game show player in the world lost to a computer, and the world has seemingly not been the same since.

The player was Ken Jennings (pictured, third from right) who had amassed a 74- game winning streak on the “Jeopardy!” TV quiz show, a feat which made him the best trivia game contestant at the time. The computer was fueled by IBM’s Watson artificial intelligence software. The match wasn’t even close.

The contest raised the possibility in the minds of the general public that if a computer could beat the world’s best quiz show contestant, it could probably take away a few manufacturing jobs too.

“I can speak to the personal feeling of being in that situation and watching the machine take your job on the assembly line and realizing that the thing you thought which made you special no longer exists,” said Jennings, author of several books including “Brainiac,” a recounting of the country’s trivia phenomenon. “If IBM throws enough money at it, your skill now is essentially obsolete. It was a disconcerting feeling.”

Jennings spoke with John Furrier (@furrier), host of theCUBE, SiliconANGLE Media’s mobile livestreaming studio, in Palo Alto, California, as part of an “Around theCUBE: Unpacking AI” panel discussion. He was joined by John Hinson (pictured, second from right), director of AI at Evoteck, and Charna Parkey (pictured, far right), applied scientist at Textio. They discussed the need for inspectable AI, the public’s negative and positive perceptions around the growing role of the technology and the importance of providing proper controls to ensure credibility and acceptance (see the full interview with transcript here). (* Disclosure below.)

A lot of water has flowed under the AI bridge since that fateful day in 2011. Adoption of AI technology has grown to where it is now used by an estimated 37 percent of enterprise firms.

Yet that growth has also highlighted social and ethical issues around the technology’s impact and role. As AI adoption increases, so does the need for transparency, an ability to inspect and validate how models are built and how AI operates.

“We’re just now getting into the phase where people are realizing that AI isn’t just replacement, it has to be augmentation,” Parkey said. “We can’t just use images to replace recognition of people, we can’t just have a ‘black box’ to give our FICO credit scores. It has to be inspectable.”

Despite a move towards inspectable AI, participants in the industry understand that concern around job loss or wholesale misuse of personal data creates an environment where the technology can easily get a bad rap, deservedly or not.

“We have to collectively start telling the right stories, we need better stories than ‘the robots are going to take over and destroy all of our jobs’,” Hinson said. “What about tailor made medicine that’s going to tell me exactly what the conditions are based off a particular treatment plan instead of guessing? These are things that AI can do.”

What AI can also do is create challenges for people who try to use the technology, but must navigate issues that arise when it is abused by others. This has been the case with the rise of voice-driven digital assistants in the home, according to Jennings.

“Every time I’m in a household that’s trying to use them, something goes terribly wrong,” Jennings said. “My friend had to rename his device because the neighbor kids kept telling it to do awful things.”

People who work in the AI industry are not blind to the concerns of users and many support the need for giving consumers control over how much data about their personal lives they want to share.

“We should have the option to opt out of any of these products and any surveillance whenever we can,” Hinson said. “We cannot get overindulgent in the fact that we can’t do it because we’re so fearful of the ethical outcomes. We have to find some middle ground and we have to find it quickly and collectively.”

Finding that middle ground may take on more urgency as government policymakers begin to take a more active role in the use of AI within society.

“Public policy has started to change,” said Parkey, who noted that California recently passed a law banning the use of facial recognition software in body cameras worn by police officers. “Part of what we’re missing is we don’t have enough digital natives in office to even attempt to predict what we’re going to be able to do with it.”

The “Around theCUBE” panel discussion featured a scoring system in which the panelists were awarded points throughout their conversations with Furrier. At the conclusion, Jennings had come in dead last.

“Thanks,” Jennings said. “I’ve been defeated by AI again.”

Watch the complete video interview below, and be sure to check out more of SiliconANGLE’s and theCUBE’s CUBE Conversations. (* Disclosure: Juniper Networks Inc. sponsored this segment of theCUBE. Neither Juniper nor other sponsors have editorial control over content on theCUBE or SiliconANGLE.)

THANK YOU