CLOUD

CLOUD

CLOUD

CLOUD

CLOUD

CLOUD

Nvidia Corp. today announced the availability of its latest high-performance graphics processing unit on Google LLC’s public cloud platform.

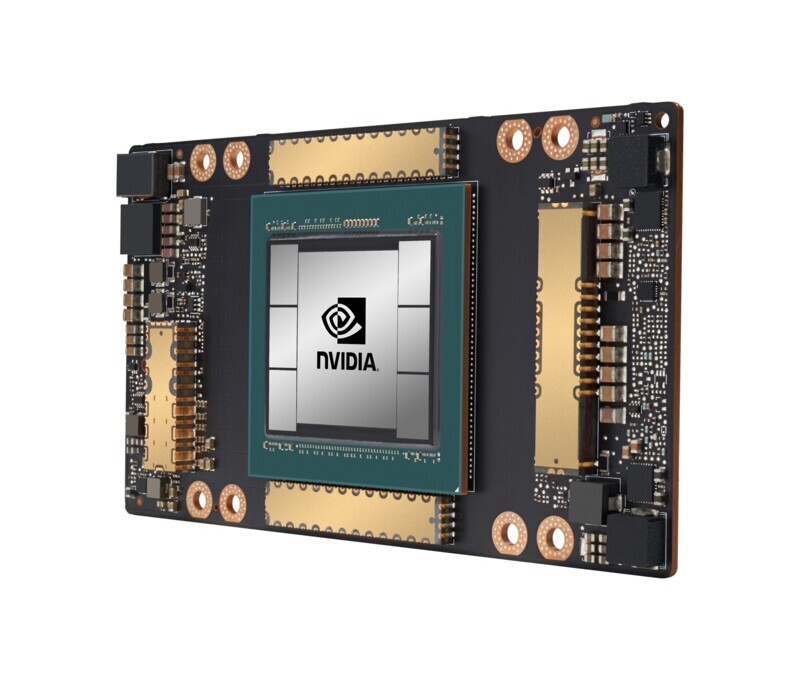

The Nvidia A100 Tensor Core GPU is now available in alpha as part of the new Accelerator-Optimized VM (A2) instance family on Google’s Compute Engine service.

The company claims the new chip, built using Nvidia’s next-generation Ampere architecture, is the most powerful GPU it has made. Designed primarily for artificial intelligence training and inference workloads, it delivers a a 20-times performance boost over its predecessor, the Volta GPU.

The A100 Ampere chip is also the largest Nvidia has ever built, made up of 54 billion transistors. Built using Nvidia’s third-generation tensor cores, it also features acceleration for sparse matrix operations that are especially useful for AI operations.

“Google Cloud customers often look to us to provide the latest hardware and software services to help them drive innovation on AI and scientific computing workloads,” said Manish Sainani, a director of product management at Google Cloud. “With our new A2 VM family, we are proud to be the first major cloud provider to market Nvidia A100 GPUs, just as we were with Nvidia’s T4 GPUs. We are excited to see what our customers will do with these new capabilities.”

In addition to AI workloads, the A100 chip is also designed to power data analytics, scientific computing, genomics, edge video analytics and 5G services, Nvidia said.

The A100 chip also has the ability to segment itself into several instances to carry out multiple tasks at once. In addition, it’s possible to connect multiple A100 chips via Nvidia’s NVLink interconnect technology for training large AI workloads.

Google is taking advantage of that last feature. The new Accelerator-Optimized VM (A2) instance family includes an a2-megagpu-16g option that allows customers to access up to 16 A100 GPUs at once, providing a total of 640 gigabytes of GPU memory and 1.3 terabytes of system memory, with up to 9.6 terabytes per second of aggregate bandwidth.

The A100 GPU is available in smaller configurations as well for customers running less demanding workloads, Nvidia said. Nvidia said the A100 GPU will also be coming to additional Google Cloud services in the near future, including Google Kubernetes Engine and Google Cloud AI Platform.

The availability of the A100 GPU on Google Cloud is a major win for users, since it means they have an easy way to access Nvidia’s latest chip via the public cloud, said Constellation Research Inc. analyst Holger Mueller.

“It’s a win for Nvidia too, as it allows it to move its newest chip beyond on-premises deployments, and it’s a win for Google as it becomes the first of the large cloud service providers to support Nvidia’s latest platform,” Mueller said. “Now it all comes down to data analysts, developers and data scientists to use the new chip power the AI component of their next-generation applications.”

THANK YOU