INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

IBM Corp. today revealed the Power10 chip, the latest addition to its homegrown server processor series, that packs several new technologies such as a specialized module that the company says will enable an up to 20-times increase in artificial intelligence performance.

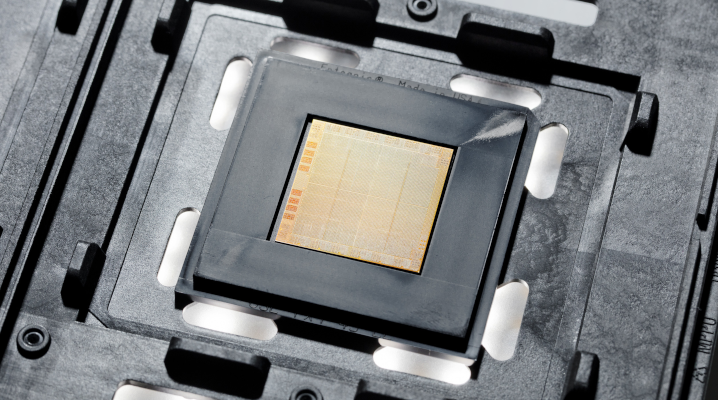

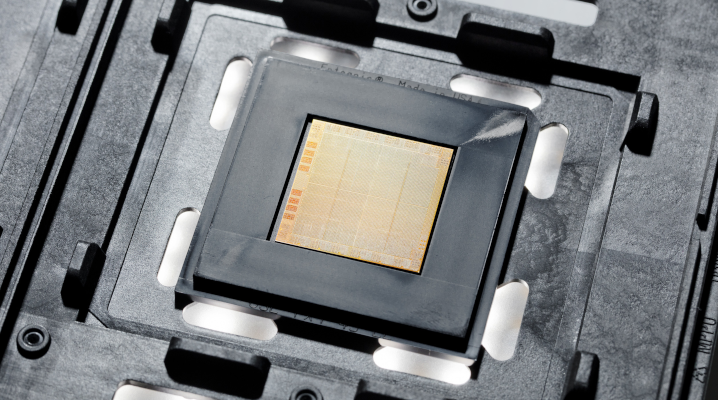

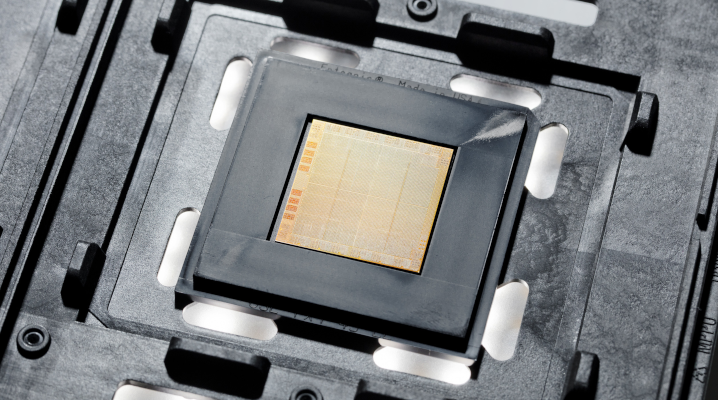

The chip (pictured), introduced at the annual Hot Chips conference that’s running online this year, will be manufactured by Samsung Electronics Co. Ltd. It’s about the size of a postage stamp and packs about 18 billion transistors organized into 30 cores, up from 12 cores in the previous-generation Power9 chip.

Samsung will manufacture IBM’s latest chips based on its new seven-nanometer process, which is expected to translate into significant efficiency improvements. IBM says an initial analysis showed Power10-based servers can run up to three times as many workloads as a Power9-based machine with the same energy footprint. It’s also promising significant speedups for specialized workload types such as AI models and encryption algorithms.

To support the growing use of AI in the enterprise, IBM has equipped its Power10 chips with what it calls a Matrix Math Accelerator. It’s an embedded hardware module designed to speed up matrix operations, a type of mathematical calculation machine learning models use to analyze data. IBM says the module is expected to enable an up to 20-times speedup for AI inference, or the processing that a model does on live data after it’s already trained and deployed in production.

On the encryption front, the Power10 chip has four times as many AES engines, specialized circuits for performing data encryption. These are necessary because modern cryptography algorithms can be resource-intensive to run on a large scale. According to IBM, the extra cryptography-optimized silicon will translate into a 40% performance improvement for companies when encrypting business records.

Another new feature introduced with the chip is something dubbed memory inception. Usually, every server in a data center is set up with its own isolated memory capacity, isolation that can make it difficult to manage that capacity and provision it to applications. The Power10 chip promises to simplify the task by allowing servers to combine their resources into one big centralized memory pool that is easier to work with.

“The applications can run on a single system but they can access the memory wherever it is just like it’s on the system it’s running on,” Stephen Leonard, general manager of IBM’s cognitive systems unit, detailed in a blog post. “Given the wide range of applications that are now memory-intensive — we’re talking CRM, ERP supply chain applications — this is expected to be a game changer for those environments.”

IBM said systems based on its processors are used by about 80% of the highest-revenue companies in the U.S. It has started sampling the new Power10 chips to early customers and plans to begin mass production in the second half of 2021.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.