AI

AI

AI

AI

AI

AI

The Lawrence Livermore National Laboratory said today it has integrated the National Nuclear Security Administration’s Lassen supercomputer with the world’s largest computer chip.

The integration means the Lassen system (pictured) is the first supercomputer in the world that combines artificial intelligence technology with high-performance computing modeling and simulation capabilities.

LLNL said the system has been designed to enable what it calls “cognitive simulation,” so its researchers can investigate novel approaches to predictive modeling. The initiative has several goals, including aiding fusion implosion experiments performed at the National Ignition Facility, materials science and the rapid development of new drugs to treat COVID-19 and cancer through the Accelerating Therapeutic Opportunities in Medicine project.

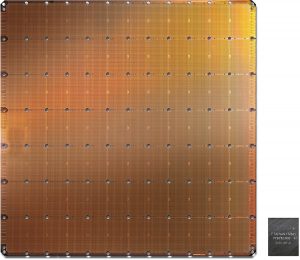

Lassen is currently ranked as the 14th most powerful supercomputer in the world, with more than 23 petaflops or 23 quadrillion floating-point operations per second, a standard measure of processing power. LLNL said the system has been integrated with Cerebras Systems Inc.’s CS-1 accelerator hardware system that’s powered by the Wafer Scale Engine (pictured here), a dedicated AI chip that’s 57 times the size of a standard data center graphics card and packs more than 1.2 trillion transistors on its circuitry.

Lassen is currently ranked as the 14th most powerful supercomputer in the world, with more than 23 petaflops or 23 quadrillion floating-point operations per second, a standard measure of processing power. LLNL said the system has been integrated with Cerebras Systems Inc.’s CS-1 accelerator hardware system that’s powered by the Wafer Scale Engine (pictured here), a dedicated AI chip that’s 57 times the size of a standard data center graphics card and packs more than 1.2 trillion transistors on its circuitry.

Announced by Cerebras last year, the WSE chip is organized into 400,000 processing cores that have been specifically optimized for AI workloads, and it comes with a massive 18 gigabytes of high-speed onboard memory.

The initiative was funded by the NNSA’s Advanced Simulation and Computing program, which said the newly enhanced system will be used to accelerate a range of Department of Energy and national security mission applications over the coming decade.

LLNL Chief Technology Officer Bronis R. de Supinski said the initiative was driven by a need for greater computational requirements. They’re simply not available through conventional means, as demand has rapidly outpaced Moore’s Law, by which the density of transistors on a given chip doubles every two years.

“Cognitive simulation is an approach that we believe could drive continued exponential capability improvements, and a system-level heterogeneous approach based on novel architectures such as the Cerebras CS-1 are an important part of achieving those improvements,” he said.

The approach will allow researchers to explore a new concept known as “heterogeneity,” wherein different elements of the supercomputer contribute to different aspects of a particular workload. According to de Supinski, that makes it possible to run operations such as data generation and error correction concurrently, creating a more efficient and cost-effective solution to various scientific problems.

“Having a heterogeneous system is motivating us to identify where in our applications multiple pieces can be executed simultaneously,” said LLNL computer scientist Ian Karlin. “For many of our cognitive simulation workloads, we will run the machine learning parts on the Cerebras hardware, while the HPC simulation piece continues on the GPUs, improving time-to-solution.”

That means researchers will be able to skip a lot of unnecessary processing in workflows and accelerate their deep learning neural networks. It also minimizes the need to “slice and dice” some problems into smaller jobs.

“Either we can do scientific exploration in a shorter amount of compute time, or we could go more in-depth in the areas where the science is less certain, using more compute time but getting a better answer,” said LLNL computer scientist Brian Van Essen.

Van Essen is heading up a research team that has selected two AI models to run on the CS-1 system. The team’s preliminary work is focused on learning from up to five billion simulated laser implosion images in order to optimize fusion targets that are used in experiments by the National Ignition Facility, with the end goal being to achieve high energy output and robust fusion implosions for stockpile stewardship applications.

Meanwhile, LLNL and Cerebras will create a new Artificial Intelligence Center of Excellence that aims to determine the optimal parameters for cognitive simulation. The research could lead to LLNL adding more CS-1 systems to Lassen, and other supercomputer platforms.

“I’m a computer architect by training, so the opportunity to build this kind of system and be the first one to deploy these things at this scale is absolutely invigorating,” said Van Essen, who is leading the AICoE effort. “Integrating and coupling it into a system like Lassen gives us a really unique opportunity to be the first to explore this kind of framework.”

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.