AI

AI

AI

AI

AI

AI

Amazon.com Inc. today debuted a new iteration of its bestselling Echo smart speaker with a custom-made artificial intelligence processor for processing voice commands.

The Echo is the flagship device in Amazon’s eponymous family of smart speakers. Since introducing the product line in 2014, the company is estimated to have sold tens of millions of devices worldwide, making it the largest player by market share.

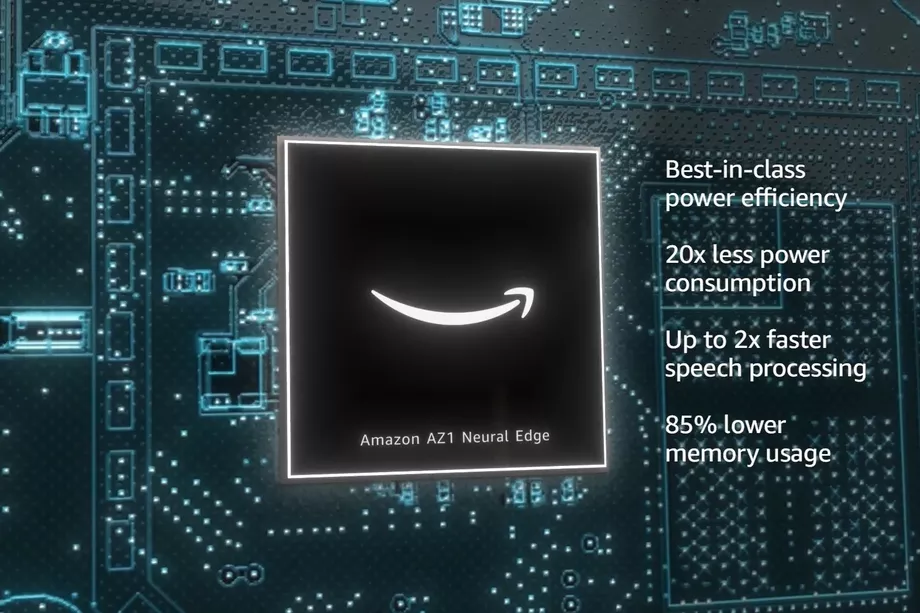

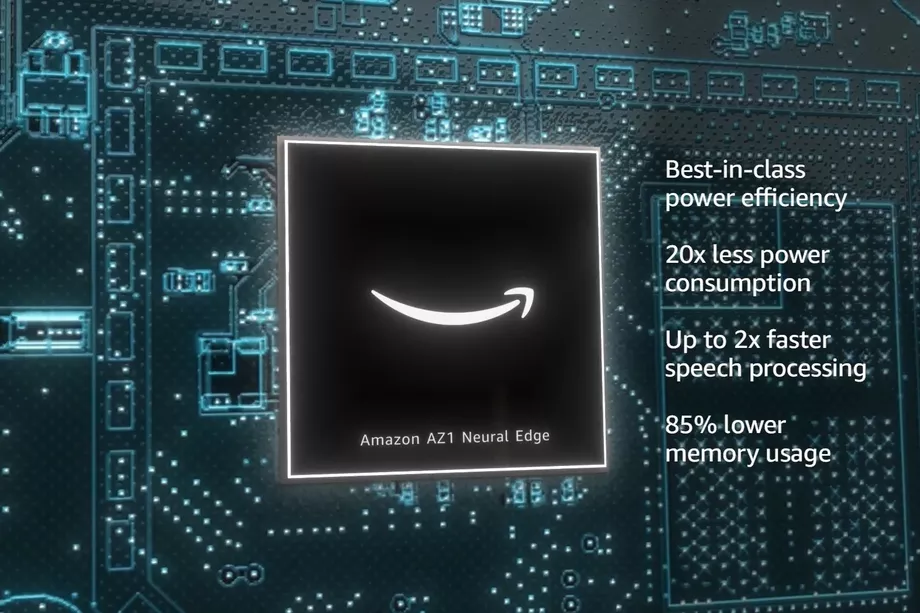

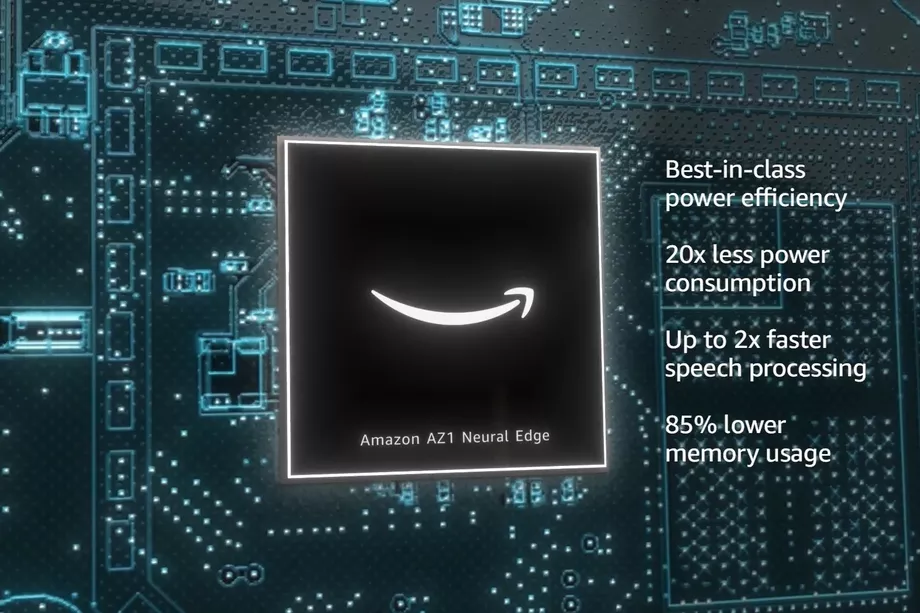

The AI processor in the new Echo model is called the AZ1 Neural Edge and was developed as part of a collaboration with semiconductor maker MediaTek Inc., Amazon executives said today. It will enable the Echo to answer user questions faster by processing voice commands locally. Earlier smart speakers had to send voice commands to the cloud for processing and then wait until the results came back, which delayed Alexa responses.

In practice, Amazon claims, the addition of the AZ1 will lead to a speedup of hundreds of milliseconds per response, which is noticeable by users.

The company’s engineers made big design improvements under the hood to facilitate the performance boost. The AZ1 is described as capable of providing double the performance of Amazon’s previous-generation silicon for speech processing tasks using 20 times less power. Moreover, it has 85% lower memory usage, which further contributes to hardware efficiency.

Amazon can potentially harness the Echo’s new ability to process voice commands locally to provide improved support for offline use. Since requests don’t have to be sent to the cloud for the processing, the AZ1 processor could theoretically allow smart speakers to function even in environments with spotty or no connectivity.

In the future, Amazon may also have an incentive to add the AZ1 to more Echo devices. Offline voice command processing could be a powerful addition to Echo devices designed for on-the-go and outdoors use such as the Echo Auto, which don’t always have access to a steady wireless connection.

The broad consumer adoption of voice assistants has also led other consumer device makers besides Amazon to prioritize AI speech processing hardware. Google LLC’s latest Pixel 4 smartphone, for example, packs a chip called the Neural Core that can run voice recognition algorithms locally. Apple Inc., in turn, recently debuted a new iPad Air with an AI module twice as fast the module inside the previous version of the tablet.

Further up the supply chain, several chip startups have emerged to capitalize on the demand for speech processing silicon. One player called Syntiant Inc. recently raised $35 million for a chip it says is 100 times more power-efficient than rivals’.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.