AI

AI

AI

AI

AI

AI

Nvidia Corp. opened its virtual GPU Technology Conference today by introducing a host of new artificial intelligence technologies, including an infrastructure offering that will allow customers to build a supercomputer optimized for deep learning in just a few weeks.

The infrastructure offering made its debut alongside a miniature AI development device and a service called Maxine for optimizing video call quality.

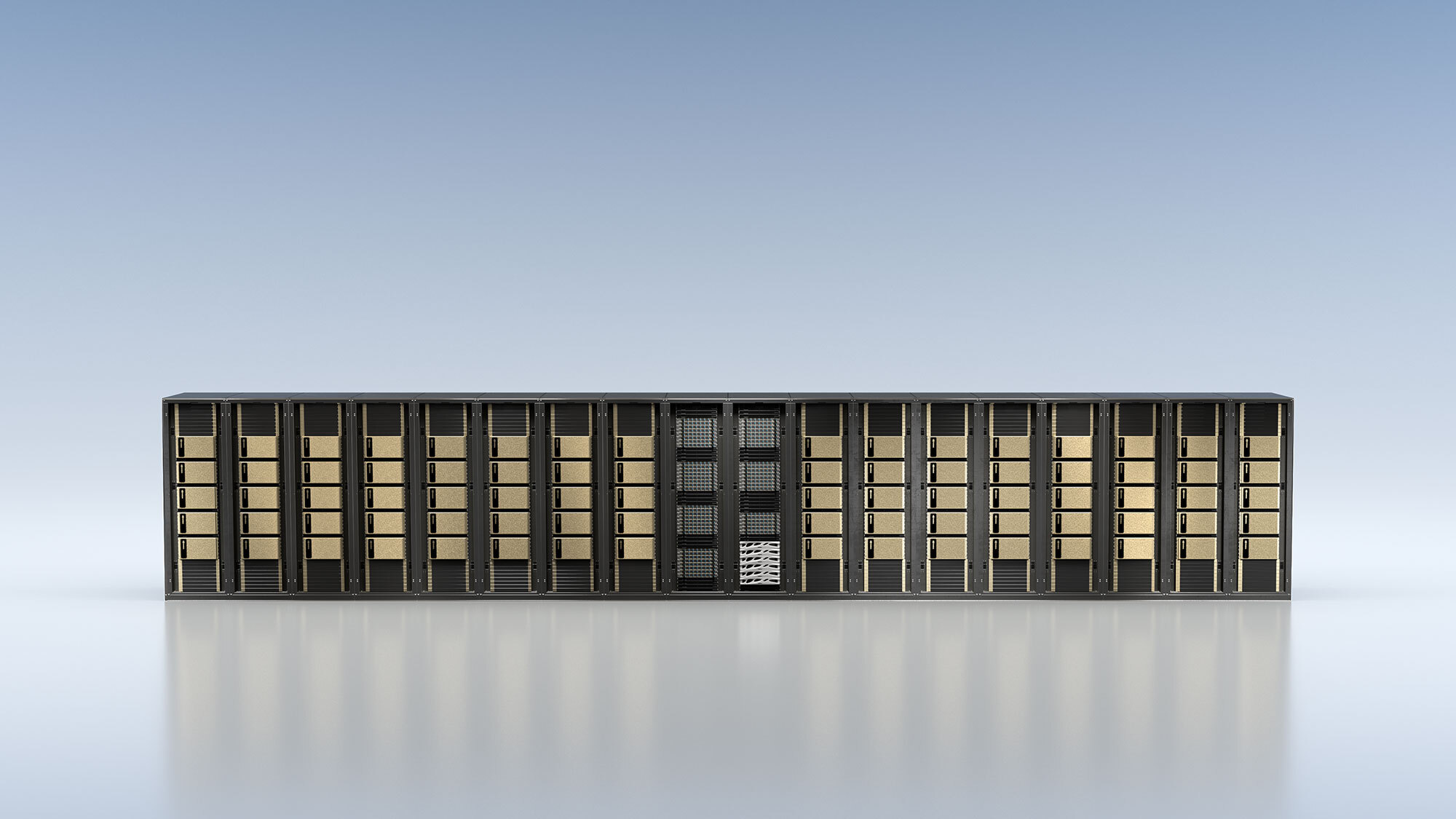

Nvidia’s graphics cards can be found in a sizable portion of the world’s fastest supercomputers. With SuperPod Solution for Enterprise, the new offering announced today, the company hopes to drive more chip sales in this market by lowering the barrier to building a supercomputer. SuperPod makes it possible to cobble together a multipetaflop system in a matter of weeks from Nvidia compute appliances and networking gear from its Mellanox subsidiary, according to the chipmaker.

The basic building block of a SuperPod supercomputer is Nvidia’s DGX A100 appliance. It pairs eight of the chipmaker’s top-of-the-line A100 data center graphics processing units with two central processing units and a terabyte of memory. SuperPod allows customers to link between 20 and 140 DGX A100 appliances in a network via Mellanox’s HDR InfinityBand switches to create a supercomputer with 100 to 700 petaflops of processing power, Nvidia claims. One petaflop equals a million billion computing operations per second.

“Traditional supercomputers can take years to plan and deploy, but the turnkey NVIDIA DGX SuperPOD Solution for Enterprise helps customers begin their AI transformation today,” said Charlie Boyle, Nvidia’s vice president and general manager of DGX systems.

Alongside the product announcement itself, Nvidia shared details about two of the first projects being built with SuperPod. One of the projects is being led by the chipmaker itself. Nvidia is building a SuperPod-based system in Cambridge, U.K., at the cost of about $51.7 million that is expected to rank as Britain’s most powerful supercomputer when it comes online later this year.

The chipmaker said that the system will provide 400 petaflops of “AI performance” and 8 petaflops of performance as measured with the Linpack benchmark. This latter metric would rank it as the 29th most powerful system on the TOP500 list of the world’s most powerful supercomputers. U.K. pharmaceutical companies, academic intuitions and others will use the system for healthcare research.

Another upcoming supercomputer Nvidia detailed today is being commissioned by the Ministry of Electronics and Information Technology in India. The Ministry’s SuperPod will consist of 42 DGX A100 appliances that will make it India’s fastest and largest HPC-AI supercomputer, Nvidia said. Researchers will use the system for projects in a variety of areas ranging from healthcare to energy.

On the opposite end of the computing spectrum from the SuperPod is the Jetson Nano 2GB, a $59 miniature AI device that Nvidia also announced today. It’s a small circuit board with four CPU cores based on Arm Ltd.’s Cortex-A57 architecture and 128 GPU Cores based on Nvidia’s own Maxwell architecture. Also included is a 2-gigabyte memory drive.

The Jetson Nano 2GB’s low price point makes it useful as an AI education tool. Schools can employ it to teach students the basics of building and deploying machine learning models, while developers looking to learn the ropes of AI development could potentially also put it to use. Additionally, the Jetson Nano 2GB lends itself to certain types of software testing, such as trialing a voice recognition vision model being developed to run on smart speakers with limited onboard computing power.

Nvidia capped off the AI hardware updates at its event today with a software announcement. The chipmaker introduced Maxine, a cloud-based AI service that promises to reduce the amount of bandwidth required for video calls by no less than 10 times on the high end.

Nvidia says that Maxine works by analyzing “key facial points” of every participant in a video call. Then, instead of sending the full live video feed of each participant, Maxine sends only data about those key facial points and uses the data to artificially animate their faces. This approach essentially trades computing power for bandwidth, reducing the net amount of data that has to travel across the network during a call.

The benefit to users is higher call quality in scenarios where connectivity is limited or unreliable. For videoconferencing providers, in turn, compressing video data with Maxine could be a way to reduce their cloud infrastructure bills. That’s on top of the competitive advantages from the ability to offer sharper video for uses.

“These days lots of companies want to turn bandwidth problems into compute problems because it’s often hard to add more bandwidth and easier to add more compute,” explained Andrew Page, a director of advanced products in NVIDIA’s media group.

Nvidia has also equipped Maxine with other AI features in a bid to win over videoconferencing providers. The service can adjust footage of call participants’ faces to make it appear as if they are looking at each other and includes a noise cancellation capability to reduce video distortions. It’s also capable of performing a number of additional similar optimizations, allowing customers to pick and choose which effects to use based on their specific needs.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.