CLOUD

CLOUD

CLOUD

CLOUD

CLOUD

CLOUD

Amazon Web Services Inc. said today that its next-generation of graphics processing unit-based compute instances for high-performance computing and machine learning workloads are now generally available for all customers.

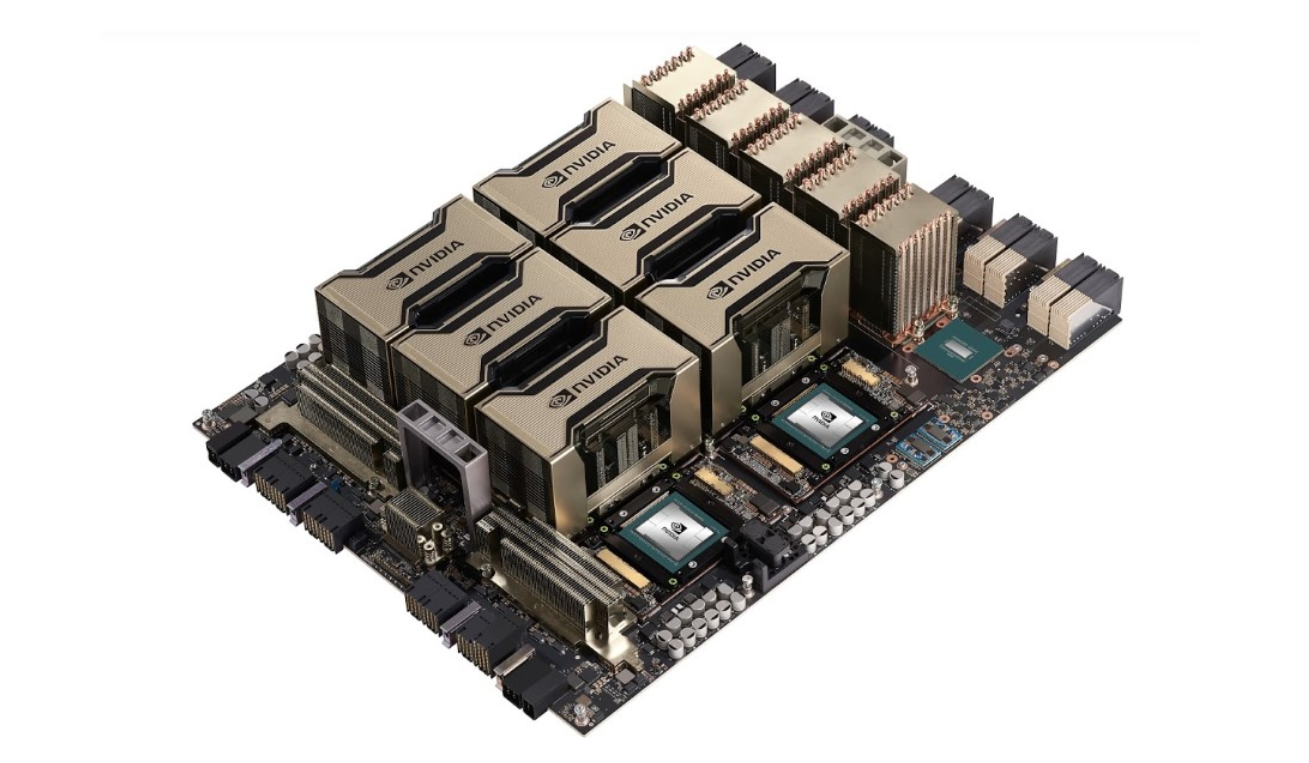

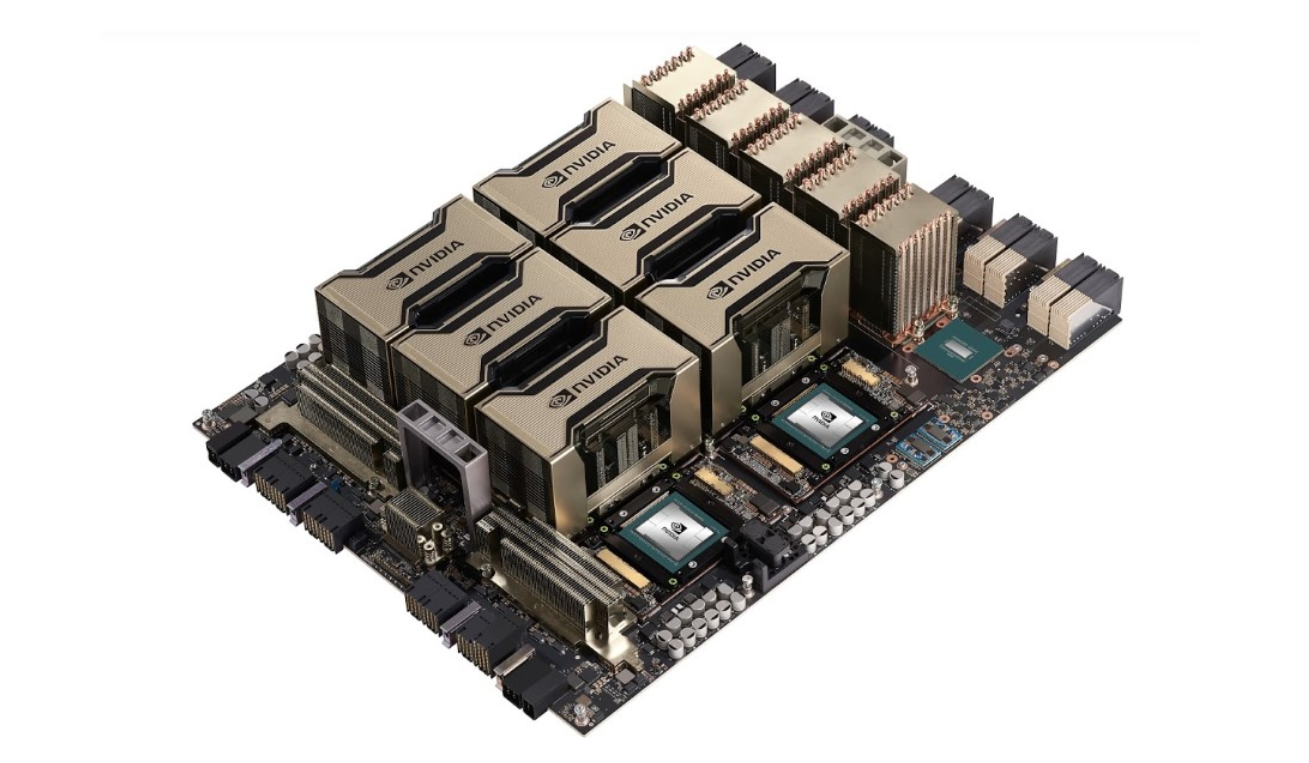

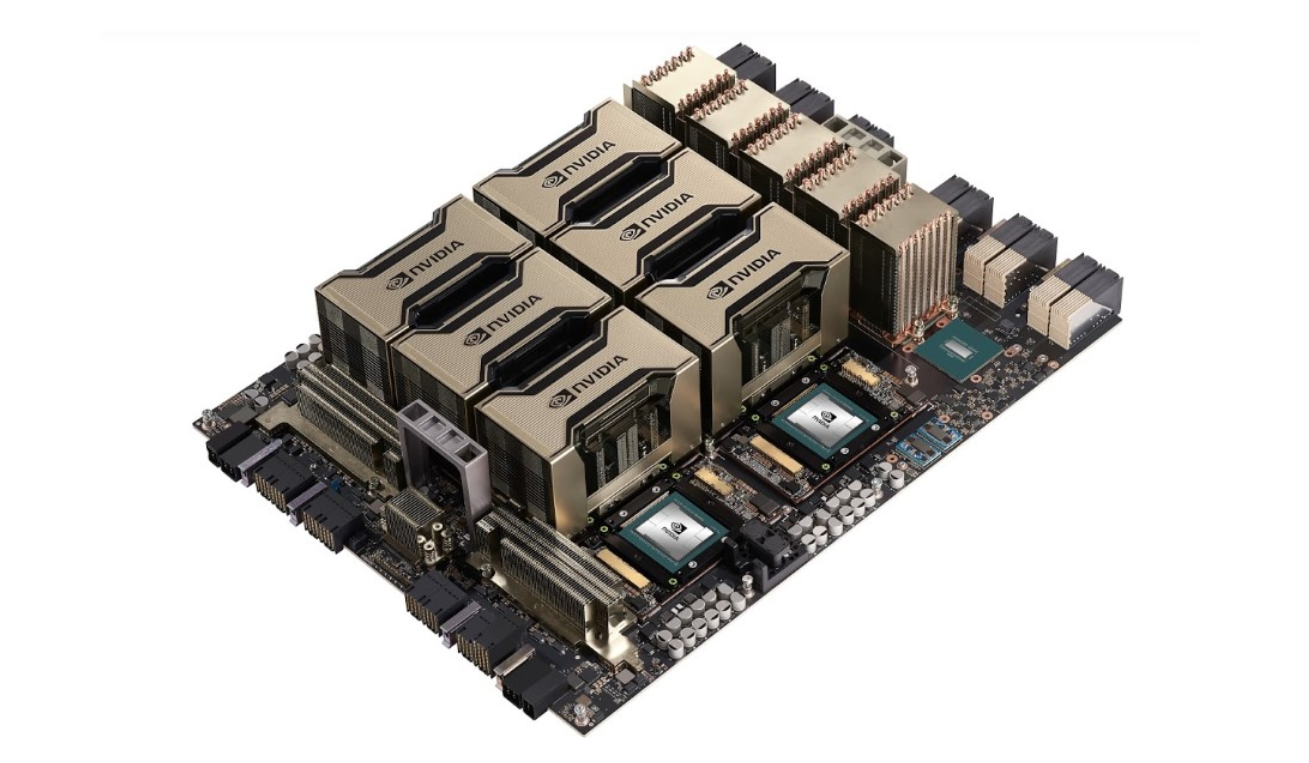

The Amazon EC2 P4d instances are powered by Nvidia Corp.’s newest and most powerful A100 Tensor Core GPU (pictured) and are designed for advanced cloud applications such as natural language processing, object detection and classification, seismic analysis and genomics research, which require massive amounts of computing power.

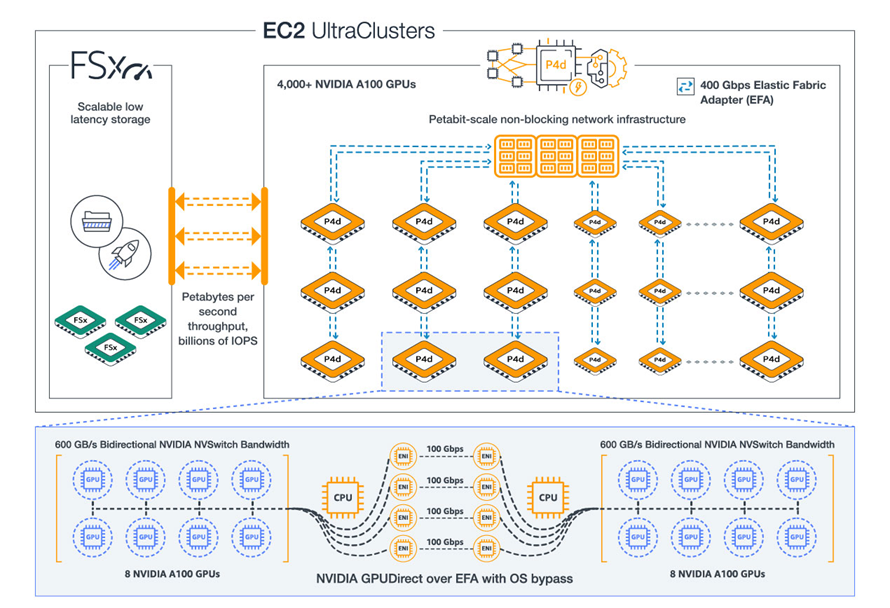

They’re extremely scalable too. Amazon Chief Evangelist Jeff Barr said in a blog post the EC2 P4d instances provide up to 400 gigabits-per-second instance networking and support both Elastic Fabric Adapter and Nvidia GPUDirect remote direct memory access to enable the efficient scale-out of multinode machine learning training and HPC workloads.

The sheer compute power of Nvidia’s latest A100 GPU means the instances can reduce the cost of training machine learning models by up to 60% compared with its previous-generation P3 instances, and deliver 2.5-times better deep learning performance, with more than double the amount of memory and twice the double precision floating point performance, Barr said. They also provide 16 times as much network bandwidth and four-times as much local NVMe flash-based SSD storage compared to the P3 instances.

Proof of the Nvidia A100 GPUs’ exceptional performance in AI inference came last week, when they set new records in all six application areas for data center and edge computing systems in the second version of the MLPerf Inference benchmarks. Those tests are considered to be the industry standard for establishing how fast different AI systems can come to a conclusion or result based on the information they digest.

To ensure that customers have enough compute power for the most demanding workloads, Barr said AWS is deploying the P4d instances in hyperscale EC2 UltraClusters (below). Each of the UltraClusters packs more than 4,000 A100 GPUs, supported by petabit-scale nonblocking networking infrastructure and high throughput, low-latency storage. The EC2 UltraClusters are essentially supercomputers in the cloud that Amazon is making available to everyday developers, data scientists and researchers.

“Using these EC2 UltraClusters, developers can scale their multi-node ML training or HPC applications to thousands of GPUs to solve their most complex problems, or scale down to just a few instances, paying only for the instances they use,” Barr said.

Amazon said the P4d instances are available now in the AWS US East (N.Virginia) and US West (Oregon) regions, and can be paid for on-demand, as part of AWS Savings Plans, as Reserved Instances or as Spot Instances.

Machine learning and orchestration services such as Amazon SageMaker, Amazon Elastic Kubernetes Service, Amazon Elastic Container Service, AWS ParallelCluster and AWS Batch will all add support for the new instances in the coming weeks, Amazon said.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.