AI

AI

AI

AI

AI

AI

Facebook Inc. is helping artificial intelligence systems use their memory more effectively by teaching them to forget unimportant memories that they don’t need to know.

Teaching AI models stuff is easy. AI models are simply fed labeled dataset

As they process the data, they remember everything within it, it’s pretty simple stuff. But teaching them to forget some of the things they’ve learned is an entirely different ballgame that hasn’t been possible up until now.

Humans can easily forget things, and in fact it happens all of the time. For example, how many people can remember what clothes they were wearing on Jan. 4 this year? Unless something memorable happened that day, chances are most people can’t remember.

Neural networks are different. In a blog post, Facebook’s AI team explained that they process the information they’re fed indiscriminately and remember every last piece of it.

“At a small scale, this is functional,” the researchers said. “But current AI mechanisms used to selectively focus on certain parts of their input struggle with ever larger quantities of information, like longform books or videos, incurring unsustainable computational costs.”

In other words, AI models, when used at scale, can quickly become overwhelmed with unimportant details they have learned that slow down their ability to process new information and perform the tasks they were designed for. It also leads to higher compute costs.

To remedy that, Facebook has come up with Expire-Span, a method that teaches deep learning models how and when to forget certain details at scale. Expire-Span works by predicting which bit of information it has learned is most relevant to the task it’s programmed to do. Then, based on the context, Expire-San assigns each piece of information an expiration date, after which it gradually fades from its memory.

“When the date has passed, the information gradually expires from the AI system,” Facebook’s researchers explained. “Intuitively, more relevant information is retained longer, while irrelevant information expires more quickly. With more memory space, AI systems can process information at drastically larger scales.”

Expire-Span is modeled on how the human brain retains memories. It makes room for important knowledge by ensuring that information is easy to recollect, rather than getting overwhelmed with every single detail it has learned. Expire-Span works the same way, keeping data that’s useful and forgetting the stuff that isn’t.

Facebook explains how Expire-Scan works in more detail:

“Picture a neural network presented with a time series of, for example, words, images, or video frames. Expire-Span calculates the information’s expiration value for each hidden state, each time a new piece of information is presented, and determines how long that information is preserved as a memory. This gradual decay of some information is key to keeping important information without blurring it. And the learnable mechanism allows the model to adjust the span size as needed. Expire-Span calculates a prediction based on context learned from data and influenced by its surrounding memories. As an example, if the model is training to perform a word prediction task, it’s possible to teach AI to remember rare words such as names, but forget very common, filler words such as the and of. By looking at previous, contextual content, it predicts if something can be forgotten or not. By learning from mistakes — over time — Expire-Span figures out what information is important.”

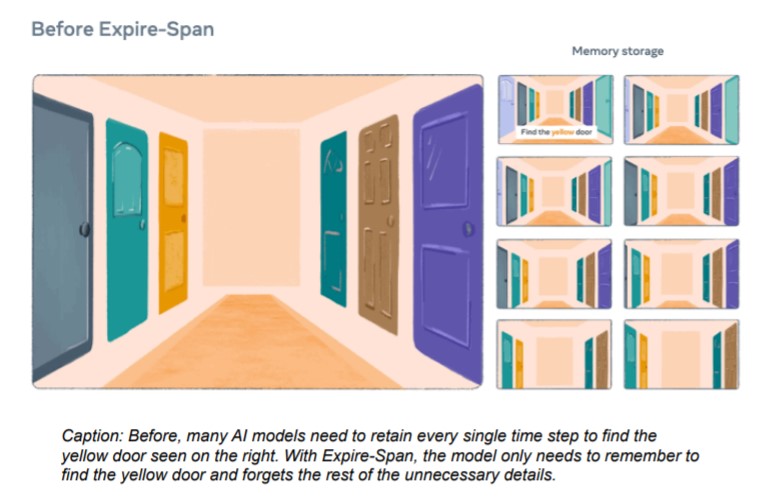

As an example, Facebook tasked an AI agent with finding a yellow door within a picture of several different-colored doors. Whereas older AI models would remember each of the time steps involved (shown on the right) in searching for the correct door, a model that uses Expire-Span will remember only the first frame that contains the task description.

Facebook said Expire-Span improves efficiency for AI in several different long-context tasks across language modeling, reinforcement learning, object collision and algorithmic tasks. Moreover, it can scale to tens of thousands of bits of information and retain less than a thousand of those bits, yet still achieve a stronger performance with greater efficiency than older AI models that don’t use Expire-Span.

Constellation Research Inc. analyst Holger Mueller said another possible benefit of Expire-Scan is it could teach AI to forget certain things that might be considered too private, such as personal information.

“Making AI models efficient and faster is a key challenge in advancing AI, and forgetting information that is not used or less relevant is one way to do this,” Mueller added. “Facebook’s Expire-Scan is a very interesting approach and it will be interesting to see how the company employs it in Facebook itself.”

The researchers said that more broadly, Expire-Span is just one aspect of creating more humanlike AI systems. Human memories come in many different forms. For instance, semantic memory serves to store general, factual information. Facebook said it’s studying how to create AI that can differentiate between different types of memories, with the goal of creating systems that can learn much faster than is possible today.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.