AI

AI

AI

AI

AI

AI

One of the world’s fastest supercomputers designed specifically to handle artificial intelligence workloads came online today at the National Energy Research Scientific Computing Center based at the Lawrence Berkeley National Lab in California.

The Perlmutter system, according to Nvidia Corp., whose graphics chips it uses in large numbers, is the “fastest on the planet” when it comes to handling the 16- and 32-bit mixed-phase precision math that’s used by AI applications. It will be tasked with tackling some of the most difficult science challenges in astrophysics and climate science, such as creating a 3D map of the universe and probing subatomic interactions for green energy sources, Nvidia said.

The system is a Hewlett Packard Enterprise Co.-built Cray supercomputer that boasts some serious processing power. It’s powered by a whopping 6,159 Nvidia A100 Tensor Core graphics processing units, which are the most advanced graphics processing units Nvidia has built.

That, Nvidia said, makes Perlmutter the largest A100 GPU-powered system in the world, capable of delivering almost 4 exaflops, or quintillion floating-point operations per second, a standard of AI performance. “We’re in the exascale era of AI,” Dion Harris, a senior product marketing manager at Nvidia focused on accelerated computing for HPC and AI, said in a press briefing.

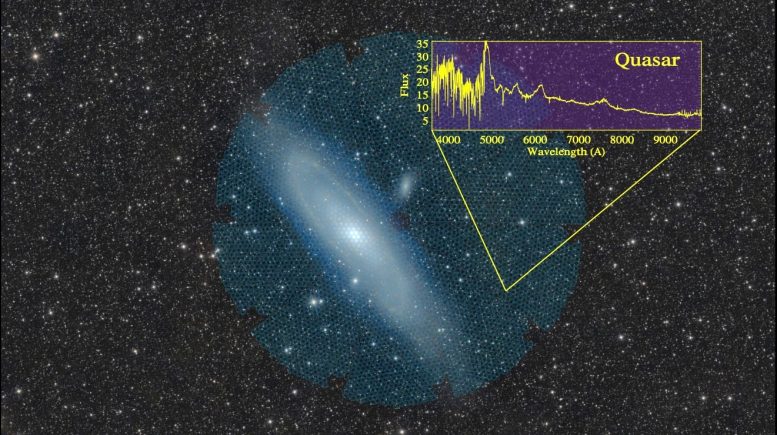

In a blog post, Harris said Perlmutter will be used by researchers to assemble what will be the largest 3D map of the universe ever made by processing data from the Dark Energy Spectroscopic Instrument. DESI, as it’s known, can capture images of up to 5,000 galaxies in a single exposure.

The idea is that by building a 3D map of the universe, scientists will be able to learn more about “dark energy,” which is the mysterious physics that’s said to be responsible for the accelerating expansion of the universe. The supercomputer is fittingly named after astrophysicist Saul Perlmutter, who won a Nobel Prize for his work that led to the discovery of dark energy in 2011.

Harris said Perlmutter’s incredible processing power will be used to analyze dozens of exposures from DESI each night, in order to help astronomers decide which part of the sky to point it at the following day.

“Preparing a year’s worth of the data for publication would take weeks or months on prior systems, but Perlmutter should help them accomplish the task in as little as a few days,” Harris said.

That’s just one task Perlmutter will be used for. NERSC is planning to make its new supercomputer available to more than 7,000 researchers around the world to advance projects in dozens of other scientific fields. One interesting area is materials science, where researchers want to discover and understand the atomic interactions that can help to create better batteries and new biofuels.

Atomic interactions are an incredibly tough challenge even for regular supercomputers, Harris said.

“Traditional supercomputers can barely handle the math required to generate simulations of a few atoms over a few nanoseconds with programs such as Quantum Espresso,” he explained. “But by combining their highly accurate simulations with machine learning, scientists can study more atoms over longer stretches of time.”

Perlmutter’s A100 Tensor Cores are uniquely suited to help with this, as they’re able to accelerate both the double-precision floating point math for simulations, and the mixed-precision calculations required for deep learning,” Harris said.

Wahid Bhimji, acting lead for data and analytics services at NERSC, said AI for science is a huge growth area and that proof of concepts are moving rapidly into production use cases in fields like particle physics and bioenergy.

“People are exploring larger and larger neural-network models and there’s a demand for access to more powerful resources, so Perlmutter with its A100 GPUs, all-flash file system and streaming data capabilities is well timed to meet this need for AI,” he added.

NERSC said researchers who believe they have an interesting challenge that Perlmutter can help to crack can submit a request to use the supercomputer.

Perlmutter is up and running now and will get even more processing power in the near future with a “second phase” that will add more GPUs scheduled for later this year.

Nvidia has played a big part in the creation of many of the world’s most powerful supercomputers. Other systems that use Nvidia’s A100 GPUs include the new Hawk system at the High-Performance Computing Center Stuttgart in Germany, the Selene supercomputer that’s used by the Argonne National Laboratory to research ways to combat COVID-19, and another AI-focused supercomputing machine called Leonardo that’s installed at the Italian inter-university consortium CINECA’s research center.

With reporting from Robert Hof

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.