INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

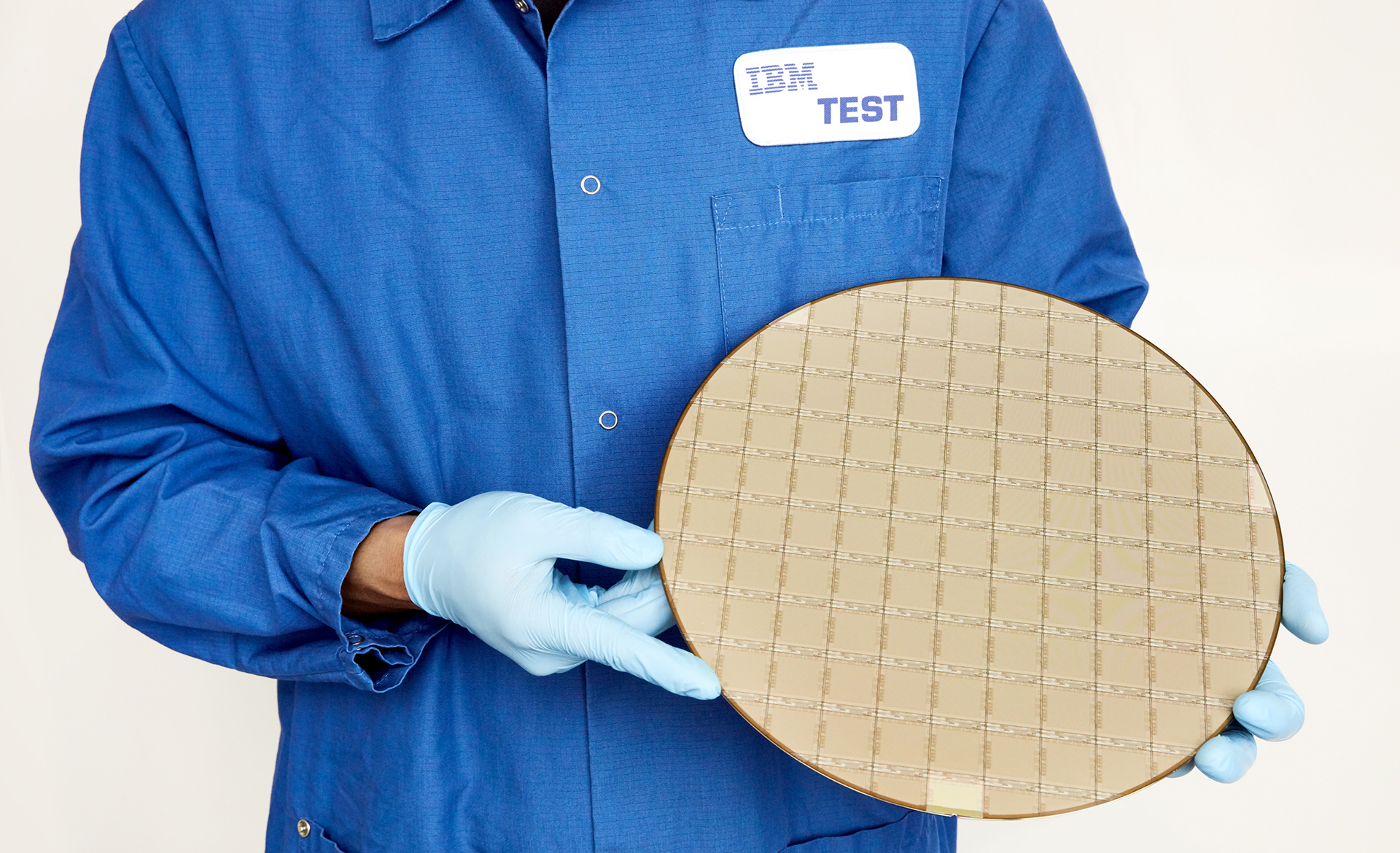

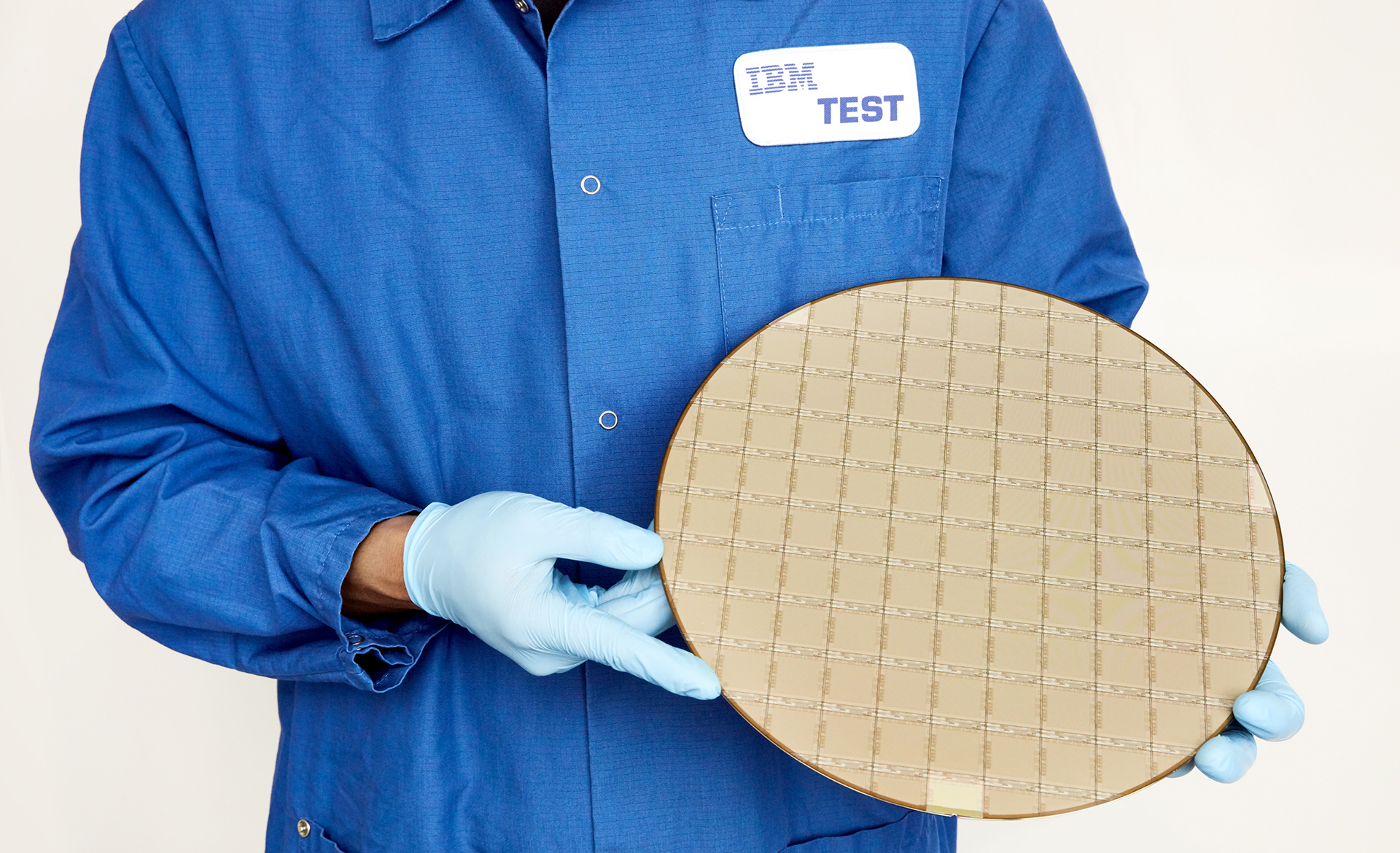

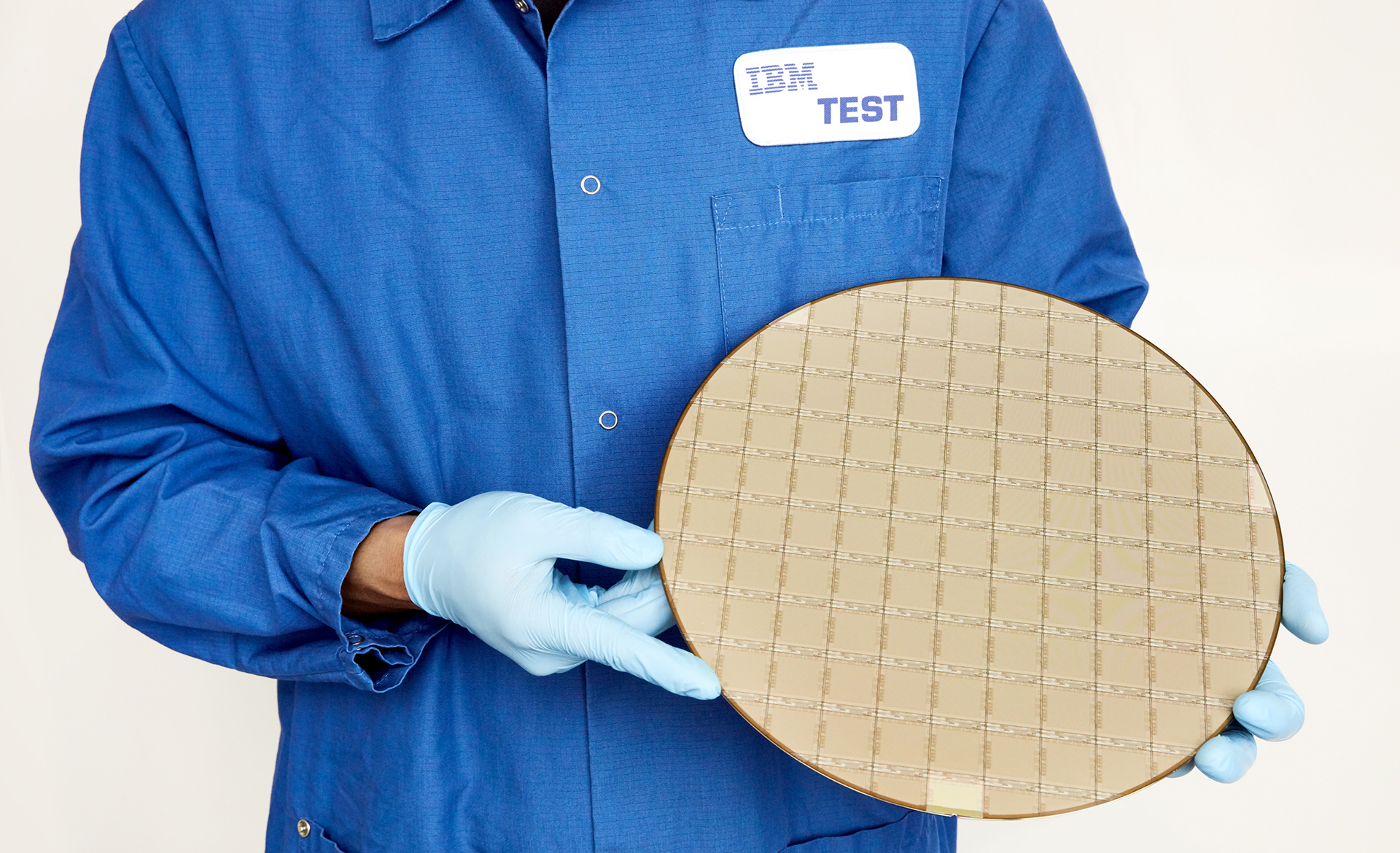

IBM Corp. showed off a new chip at the Hot Chips event today that can perform deep learning predictions on enterprise workloads in real time to address fraud.

The new Telum chip is IBM’s first processor that features on-chip acceleration for artificial intelligence inferencing, or the process of making predictions on data, while transaction are taking place. It’s designed to power a new generation of mainframes used to run banking, finance, trading and insurance workloads, plus customer interactions, the company said.

IBM added that Telum has been under development for three years and that its first Telum-based systems will launch in 2022.

Telum’s blueprint enables applications to run efficiently in cloud proximity to where the data they use resides. That eliminates the need to devote significant amounts of resources for memory and data movement to handle inferencing in real time.

IBM said that by putting AI acceleration closer to the data, enterprises will be able to conduct high-volume inferencing in real time on sensitive transactions without resorting to off-platform AI tools that can hurt performance. Customers will still be able to build and train AI models off-platform, then deploy the finished articles on IBM Telum systems for real-time workload analysis.

IBM said the key advantage is that customers will be able to shift from their current focus on fraud detection toward fraud prevention without affecting service-level agreements.

Analyst Patrick Moorhead of Moor Insights & Strategy wrote in Forbes that there are two scenarios where having inference embedded directly into the workload can have a transformative effect.

“The first is where AI is used on business data to derive insights such as fraud detection on credit card transactions, customer behavior prediction, or supply chain optimization,” Moorhead explained.

That will enable fraudulent transaction to be detected before the transaction completes, he wrote. That isn’t possible with off-platform AI systems, he said, because shifting data outside of the system inevitably means coming up against network delays that would lead to unacceptably slow transaction times.

“Off platform, you literally need to move the data from Z to another platform,” he said. “Low latency is needed to score every transaction consistently. With spikes in latency, some transactions will go unchecked, and some customers only achieve 70% of the transactions leaving 30% of transactions unprotected.”

IBM said it has already partnered with an unnamed international bank on exactly this scenario, enabling it to detect fraud as credit card transaction authorization is being processed. It said the client is aiming to be able to attain submillisecond response times while maintaining the scale it needs to process up to 100,000 transactions per second, which would be nearly 10 times what it can achieve using its existing transactional infrastructure.

“The client wants consistent and reliable inference response times, with low millisecond latency to examine every transaction for fraud,” IBM engineers Christian Jacobi and Elpida Tzortzatos explained in a blog post. “Telum is designed to help meet such challenging requirements, specifically of running combined transactional and AI workloads at scale.”

The other scenario where inference on chip will have benefits is when using AI to make infrastructure more intelligent, for example, workload placement, anomaly detection for security, or database query plans based on AI models, Moorhead said.

IBM said the Telum chip is built on Samsung Electronics Ltd.’s seven-nanometer process and contains eight processor cores with a deep, super-scalar out-of-order instruction pipeline that run with over 5 gigahertz cloud frequency and is optimized for the demands of heterogenous enterprise-class workloads.

Telum also boasts a completely redesigned cache and chip interconnection infrastructure that delivers 32 megabytes cache per core, capable of scaling up to 32 Telum chips, IBM said.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.