AI

AI

AI

AI

AI

AI

Cerebras Systems Inc. today announced that its CS-2 hardware system is now capable of training neural networks with up to 20 billion parameters, which the startup says represents an industry record.

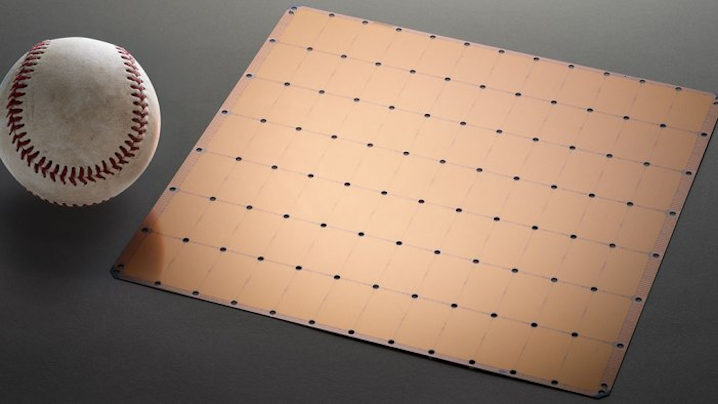

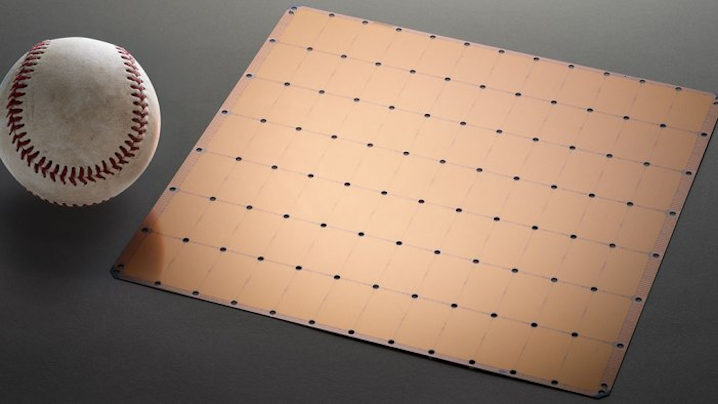

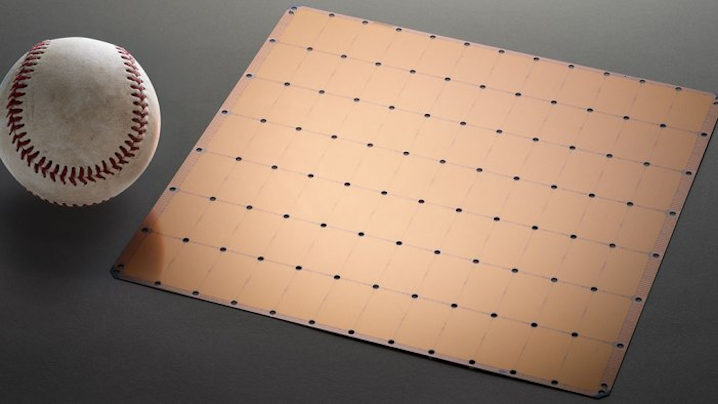

The CS-2 is a data center appliance powered by Cerebras’ WSE-2 chip. The WSE-2 (pictured) features a so-called wafer-scale architecture optimized for AI workloads and includes 2.6 trillion transistors, or 2.55 trillion more than the most advanced graphics processing unit on the market. Cerebras Systems says that chip is the world’s fastest AI processor.

The startup’s CS-2 system combines a single WSE-2 chip with cooling equipment, networking hardware and other supporting components. The system makes it easier to deploy the WSE-2 by removing the need for customers to install the necessary supporting components on their own.

One of the most advanced neural networks in the open-source ecosystem is a natural language processing model called GPT-NeoX. It features 20 billion parameters, the settings that determine how an AI model processes data. Training such a complex neural network network involves so many computations that the task can usually only be performed using a large number of GPUs.

Cerebras said today that its customers can train GPT-NeoX using just a single CS-2 appliance. According to the startup, it’s now the only market player that offers the ability to train a neural network with up to 20 billion parameters using one device. The CS-2 can also be used to train other advanced natural language processing models such as GPT-3XL and GPT-J, which feature 1.3 billion and 6 billion parameters, respectively.

The CS-2’s ability to perform a task that usually requires multiple GPUs is facilitated by its onboard WSE-2 chip. According to Cerebras, the WSE-2’s 2.6 trillion transistors are organized into 850,000 cores, which is 100 times higher than the number of cores found in the fastest GPU on the market. There’s also 40 gigabytes of onboard memory for storing the neural network that the chip is running and the data being processed.

The chip’s memory pool can store many types of neural networks. However, some AI models with upwards of a billion parameters require more than the 40 gigabytes of onboard capacity that the WSE-2 includes.

To accommodate such advanced neural networks, Cerebras has developed a technology called Weight Streaming. The technology makes it possible to attach up to 2.4 petabytes of external memory to the WSE-2, which enables the chip to run more complex AI models than could be supported otherwise. According to the startup, Weight Streaming in theory makes it possible to run AI models with upwards of one trillion parameters.

“In NLP, bigger models are shown to be more accurate,” said Cerebras co-founder and Chief Executive Officer Andrew Feldman. “But traditionally, only a very select few companies had the resources and expertise necessary to do the painstaking work of breaking up these large models and spreading them across hundreds or thousands of graphics processing units.”

Cerebras says its CS-2 system’s newly detailed ability to train AI models with up to 20 billion parameters will ease customers’ machine learning projects in several ways.

Training a neural network with 20 billion parameters typically requires using a large number of servers equipped with GPUs. If the servers are being deployed on-premises, setting them up can take a significant amount of work. Provisioning GPU-equipped compute instances in the public cloud is faster, but the training process can still take months, according to Cerebras.

Training an AI model with multiple GPUs is time-consuming because the task often requires a significant amount of custom code. Developers must implement software that distributes the computations involved in the AI training process across the GPUs. Moreover, the software must be extensively optimized to ensure that computations are carried out efficiently.

According to Cerebras, its CS-2 system eases developers’ work because it contains a single WSE-2 chip instead of multiple processors. Developers don’t have to write complex software to distribute computing tasks across multiple chips. The result, according to the startup, is that AI models can be trained more efficiently.

The startup has managed to incorporate 2.6 trillion transistors into a single chip by developing an interconnect technology that makes it possible to combine 84 smaller chips into a single system. It says its chips have been adopted by more than a dozen customers including enterprises and research institutions.

Cerebras has raised $720 million from investors and received a $4 billion valuation after its most recent funding round last November.

THANK YOU