AI

AI

AI

AI

AI

AI

Meta Platforms Inc. today unveiled an advanced “generative artificial intelligence system” that’s designed to help artists better showcase their creativity.

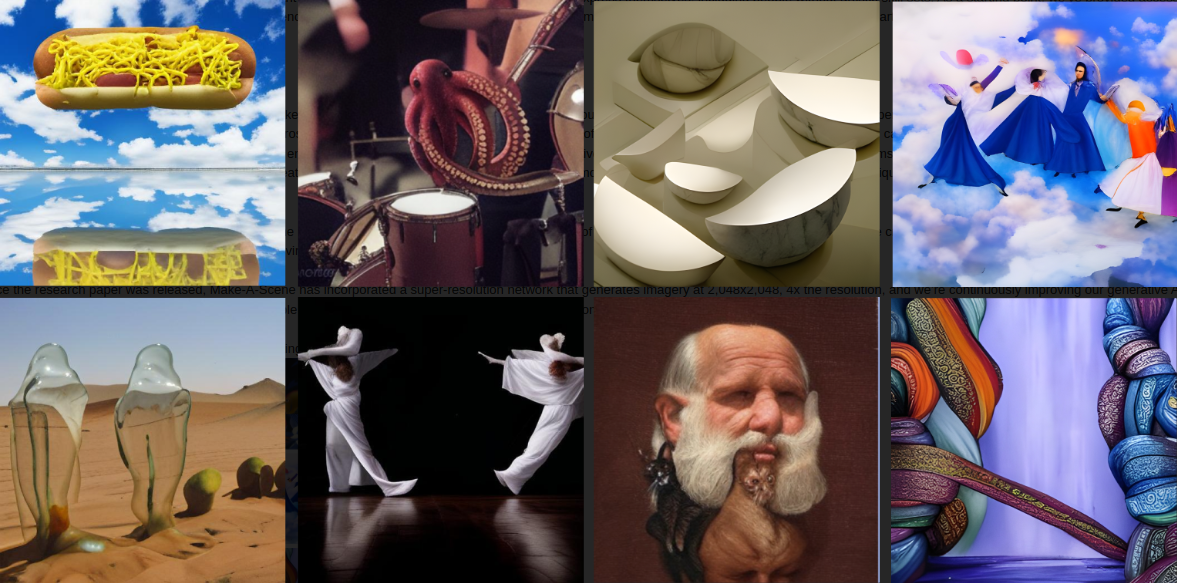

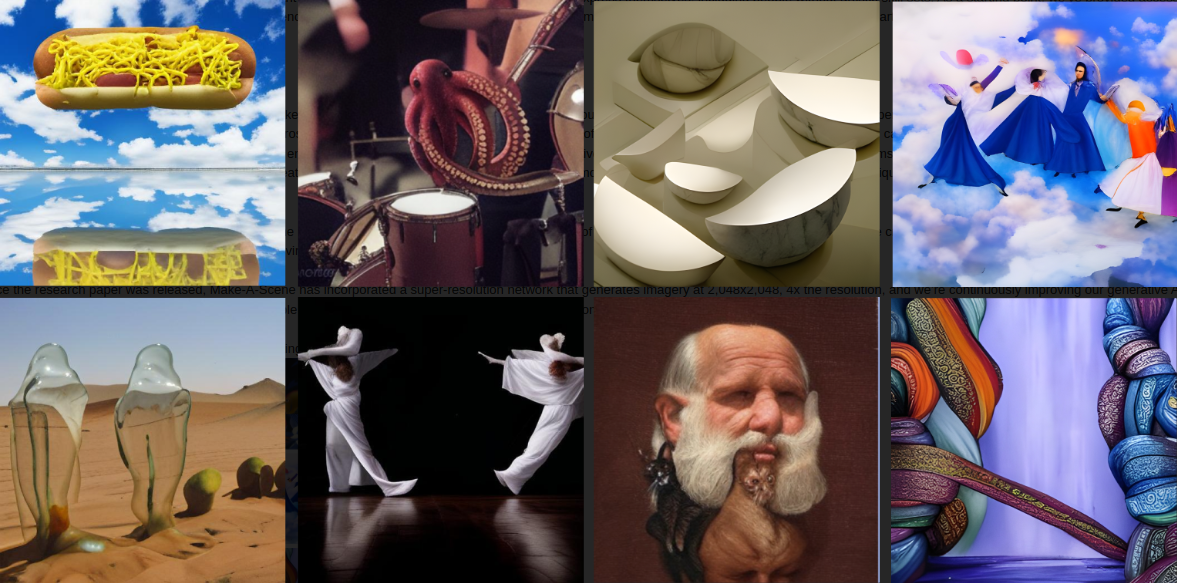

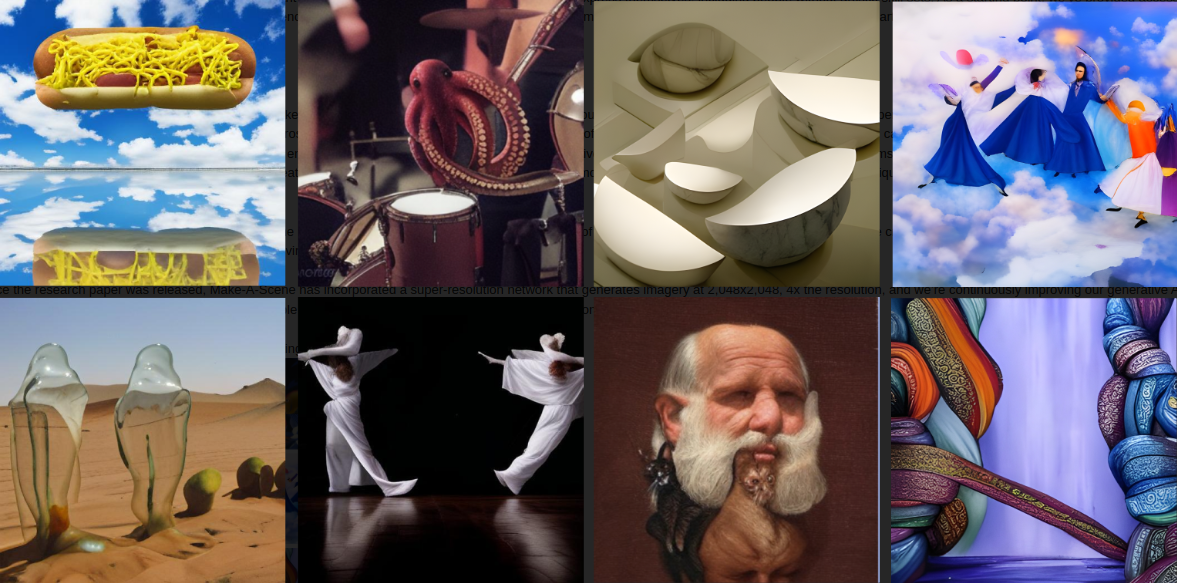

The system, called “Make-A-Scene,” is meant to demonstrate how AI has the potential to empower anyone to bring their imagination to life. The user can simply describe and illustrate their vision through a combination of text descriptions and freeform sketches, and the AI will come up with a stunning representation of it.

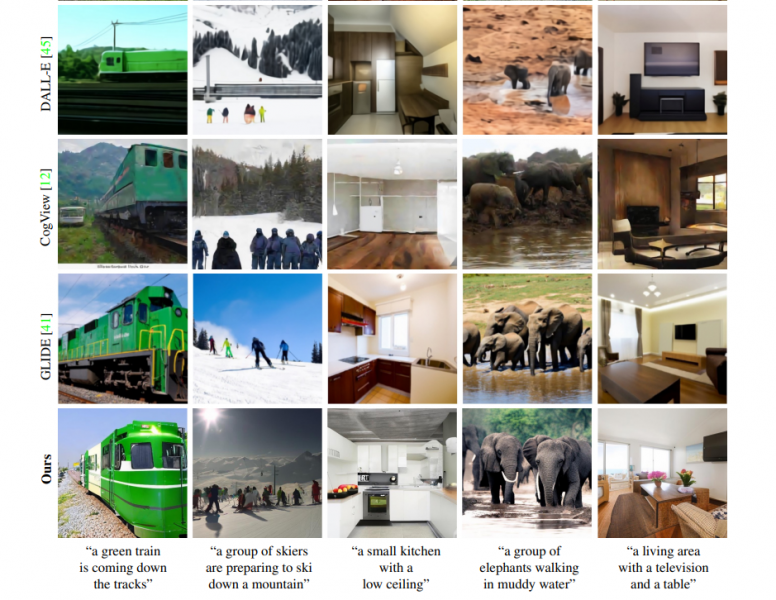

As the company explains in a blog post, generative AI is already used by a number of artists to augment their creativity. Examples include expressive avatars, animating children’s drawings, creating virtual worlds in the metaverse and producing digital artworks using only text-based descriptions. However, existing systems are still pretty basic and they don’t give artists much control over their final output.

The problem is that they’re primarily text-based. So a text prompt such as “a picture of a zebra riding a bike” can be interpreted in many ways. The AI might put the zebra on the left or right side of the image, or it might be much bigger or smaller than the bicycle. It could be facing the camera, or sideways. Much of the generative process is random.

“It’s not enough for an AI system to just generate content, though,” Meta AI’s research team explained. “To realize AI’s potential to push creative expression forward, people should be able to shape and control the content a system generates. It should be intuitive and easy to use so people can leverage whatever modes of expression work best for them, whether speech, text, gestures, eye movements, or even sketches, to bring their vision to life in whatever mediums work best for them, including audio, images, animations, video, and 3D.”

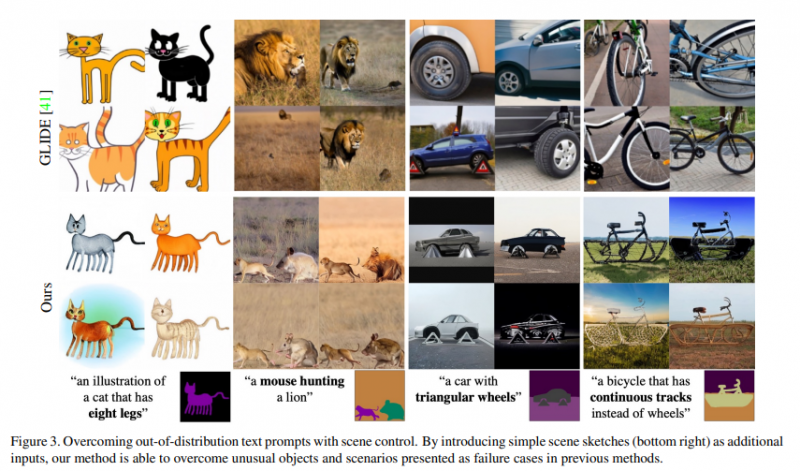

This is where Make-A-Scene comes in, allowing artists to use both text prompts and simple sketches to more accurately convey their vision. The system works like other generative AI models, learning the relationship between visuals and text by training on millions of example images.

Once trained, Make-A-Scene use a novel intermediate representation to capture the scene layout as depicted in a rough sketch, then focuses on filling the details based on the text prompts. It has also been trained to focus on key aspects of the imagery that are likely to be more important to the creator, such as animals, people or objects. The result is that users have far greater control over the image they ask the system to generate.

Make-A-Scene was put to the test by a number of well-known AI artists who have worked with generative AI before, including Sofia Crespo, Scott Eaton and Alexander Reben.

Crespo is a generative artist who focuses on the intersection of nature and technology. She used Make-A-Scene to render various artificial lifeforms that have never existed anywhere except in her own imagination. Her sketches and text prompts were fed into Make-A-Scene, which came up with various hybrid creatures, including a jellyfish shaped like a flower.

Crespo said the advantage of using freeform drawing is that she can iterate new ideas far faster than before. “It’s going to help move creativity a lot faster and help artists work with interfaces that are more intuitive,” she explained.

Meanwhile, Eaton, a “creative technologist” and contemporary artist, used Make-A-Scene to compose scenes deliberately while exploring variations using different text prompts, such as “skyscrapers sunken and decaying in the desert,” as a way of highlighting climate change.

“Most text-to-image models are very good at giving you some aspect of what you asked for, but without spatial control,” Eaton said. “If you want to create an AI-generated image with deliberate composition, this is a very powerful way to interact with the model.”

Meta said Make-A-Scene is part of a wider research project aimed at exploring multiple ways in which AI can empower creativity, such as bringing 2D sketches to life or using natural language to create 3D objects or even an entire virtual space.

“It could one day enable entirely new forms of AI-powered expression while putting creators and their vision at the center of the process — whether that’s an art director ideating on their next creative campaign, a social media influencer creating more personalized content, an author developing unique illustrations for their books and stories, or someone sharing a fun, unique greeting for a friend’s birthday,” Meta AI’s researchers said.

Meta Chief Executive Mark Zuckerberg said he’s jazzed about AI-generated art, particularly when it comes to his overriding focus, the metaverse. “Our research team built a prototype that lets you use a simple sketch alongside text to generate images,” he said. “Tools like this will be great for creators, especially as they build out immersive 3D worlds.”

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.