AI

AI

AI

AI

AI

AI

Meta Platforms Inc., the parent company of Facebook, today said it’s open-sourcing a new piece of software that will allow other internet firms to prevent the spread of terrorist-related material, including violent and extremist images and videos, across the web.

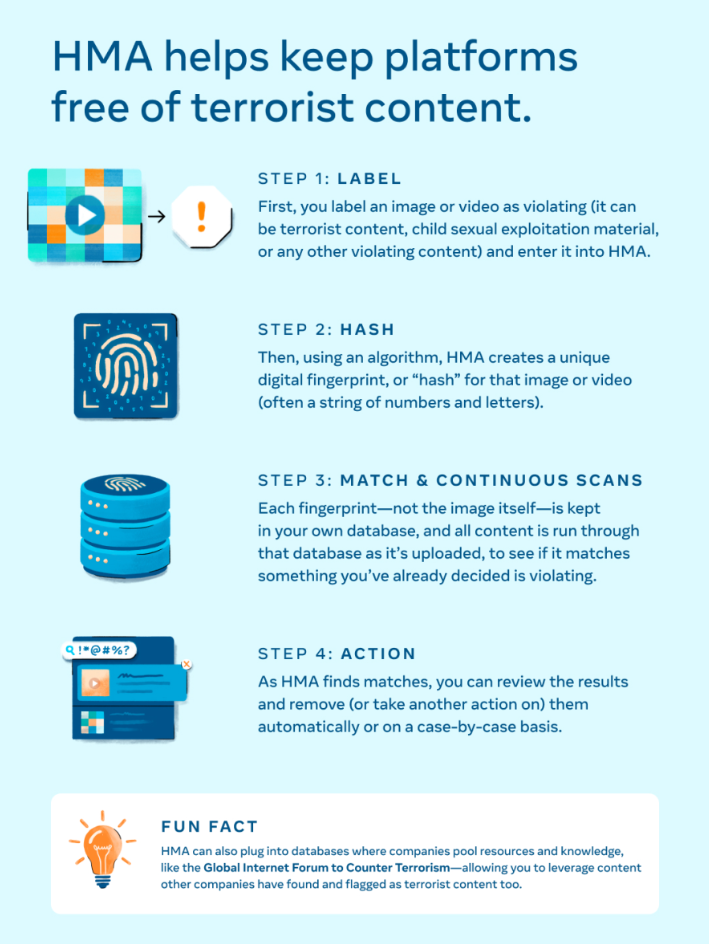

The software is known as the Hasher-Matcher-Actioner tool. It will be shared with other social media platforms and internet companies ahead of Meta’s year-long chairmanship of the Global Internet Forum to Counter Terrorism, which begins in January.

Meta was one of the co-founders of the GIFCT in 2017, alongside Microsoft Corp., Twitter Inc. and YouTube. The group has since evolved to become a nongovernmental organization focused on tackling terrorism and extremist content online.

According to Meta, the HMA tool will be made available to other internet firms for free as part of its efforts to prevent the spread of terrorist content online. That’s in line with Meta’s own vow to boost content moderation on its own platforms.

In a blog post, Meta President of Global Affairs Nick Clegg said the company is committed to tackling terrorist content as part of a wider approach to protecting users from harmful content on its services. “We’re a pioneer in developing AI technology to remove hateful content at scale,” Clegg said. “We’ve learned over many years that if you run a social media operation at scale, you have to create rules and processes that are as transparent and evenly applied as possible.”

HMA improves on Meta’s existing open-source imaging and video-matching software, and the company said it can be used against any content that violates its policies. The main purpose of the tool is that it can identify prohibited content quickly, almost as soon as it appears online, and then take it offline if need be. What’s more, it can do this across multiple platforms.

The tool is powered by artificial intelligence and relies on “hashes,” which are a digital fingerprint found in images and videos, in order to find duplicates posted elsewhere on the web. It should make it much easier for companies to keep track of and remove terrorist-related content, Meta said. Once some offending material has been found, the algorithm develops a series of letters and numbers specific to that piece of content, which can be used to identify copies.

HMA is being made available as a free and open-source content moderation tool and will “help platforms identify copies of images or videos and take action against them en masse,” Clegg said.

The HMA tool is just one initiative being pursued by Meta to improve online safety and security. The company said it has spent more than $5 billion globally on such measures in the last year and has more than 40,000 employees working in this area. Within that group, hundreds are said to have experience in law enforcement, national security, counterterrorism intelligence and with studies on radicalization.

“What we’re hoping to do is lift up our baseline best practices for the entire industry,” Dina Hussein, Meta’s counterterrorism and dangerous organizations policy lead, told ABC News. “As long as this kind of content exists in the world, it’ll manifest on the internet. And it’s only through collectively working together that we can really keep this content off the internet.”

Support our open free content by sharing and engaging with our content and community.

Where Technology Leaders Connect, Share Intelligence & Create Opportunities

SiliconANGLE Media is a recognized leader in digital media innovation serving innovative audiences and brands, bringing together cutting-edge technology, influential content, strategic insights and real-time audience engagement. As the parent company of SiliconANGLE, theCUBE Network, theCUBE Research, CUBE365, theCUBE AI and theCUBE SuperStudios — such as those established in Silicon Valley and the New York Stock Exchange (NYSE) — SiliconANGLE Media operates at the intersection of media, technology, and AI. .

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a powerful ecosystem of industry-leading digital media brands, with a reach of 15+ million elite tech professionals. The company’s new, proprietary theCUBE AI Video cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.