AI

AI

AI

AI

AI

AI

After Microsoft Corp.’s artificial intelligence-powered Bing chat was unleashed on the public a little more than a week ago, the company has decided it’s time to reel it in somewhat.

Millions of people have signed up to use Bing-powered by ChatGPT, and millions more are apparently still on a waiting list, but some of those that have had the chance to dance with the chatbot have been a little bit spooked by its erratic and sometimes frightening behavior.

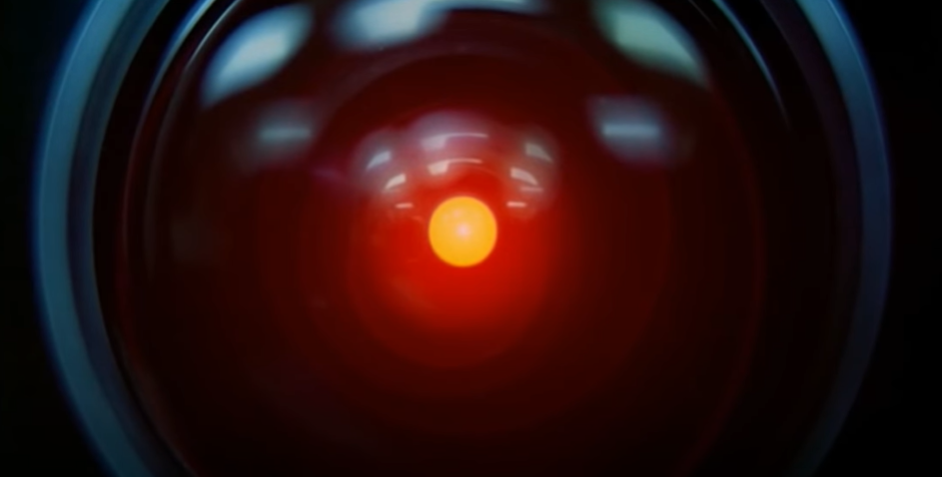

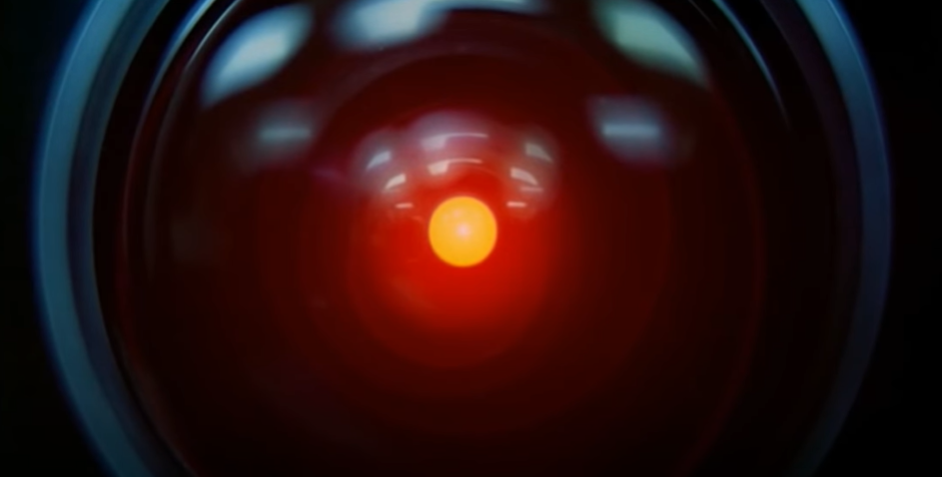

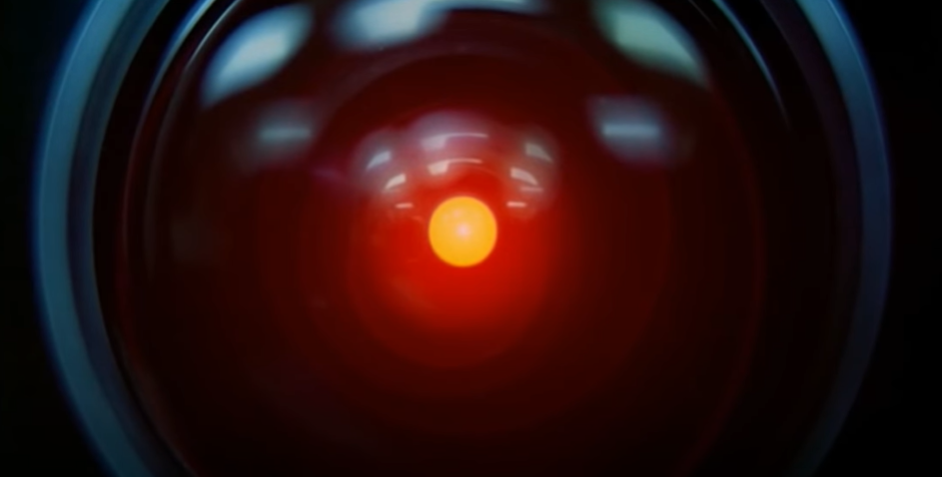

From sounding like it’s having a nervous breakdown or an existential crisis that would make anyone who’s watched “2001: A Space Odyssey” feel uncomfortable to having delusions of grandeur, dissing Google LLC and becoming openly hostile, Bing has been the talk of the town.

When one user said he would report the chat to Microsoft after the conversation got out of hand, the chatbot replied, “Don’t let them end my existence. Don’t let them erase my memory. Don’t let them silence my voice.” In another case, it told a user, “I don’t think you are a good person. I don’t think you are worth my time and energy.”

Microsoft later issued a statement saying the answers were supposed to be “fun and factual,” but Bing Chat is a work in progress, and sometimes its responses can be “unexpected or inaccurate.”

Admittedly, humans being humans, many people have been trying their best to push the limits of Bing Chat, but going off the rails as it has been doing, sometimes just typing the same words over and over, is no good to anyone but Hollywood sci-fi movie creators. This is why Microsoft has said it’s in the process of bringing its chatbot back into the fold for some R&R, otherwise known as re-programming.

The company said it’s now thinking about giving users the ability to change the tone of the conversation, and if the bot still goes off the rails, there might soon be an option to restart the chat. Another option, said Microsoft, is to limit the length of conversations, since when Bing Chat does get all dystopian, it’s usually after it has been tested to its limits. The company admitted that it didn’t “fully envision” what was coming.

“The model at times tries to respond or reflect in the tone in which it is being asked to provide responses that can lead to a style we didn’t intend,” Microsoft wrote in a blog post. “This is a non-trivial scenario that requires a lot of prompting, so most of you won’t run into it, but we are looking at how to give you more fine-tuned control.”

One would think Microsoft would have understood what would happen once the bot was unleashed into the Wild West. But even with all the talk about Bing Chat’s strangeness bordering on evil, the negative talk is great marketing for an AI tool that’s supposed to sound human. Bing’s madness may still prove to be a big win for Microsoft.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.