BIG DATA

BIG DATA

BIG DATA

BIG DATA

BIG DATA

BIG DATA

Google Cloud is looking to accelerate adoption of its big-data ecosystem with a host of new product innovations and partner offerings announced today at its third annual Google Data Cloud & AI Summit.

According to Google, the new capabilities are designed to help companies optimize the price-performance of their big data projects, embrace open data ecosystems and bring the magic of artificial intelligence and machine learning to existing data, while taking security to the next level.

Most of the announcements today affect in some way Google’s fully managed, serverless data warehouse, BigQuery. So it seems entirely appropriate that Google is helping to reduce the operating costs for many customers that are using the service with new pricing editions and features around autoscaling and compressed storage billing.

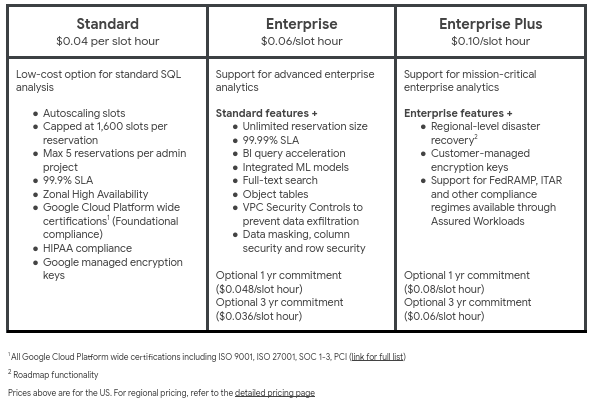

With BigQuery editions, users now have more flexibility to select the exact feature set they need for their specific workloads, Google executives Gerrit Kazmaier and Andi Gutmans explained in a blog post. Customers are now free to mix and match among the Standard, Enterprise and Enterprise Plus editions to achieve the optimal price/performance for predictable workloads, with single- or multiyear commitments available at reduced prices.

For unpredictable workloads, customers can instead choose to pay based on the amount of compute capacity used. “This is a unique new model … for spiky workloads,” Kazmeier, who’s vice president and general manager of data analytics, said in a press briefing.

Furthermore, users will be able to provision additional capacity, should they need it, in granular increments, helping them avoid overpaying for underused capacity, Google said.

BigQuery isn’t the only way for companies to reduce their big data costs though. In addition, Google reckons customers can maximize cost efficiency by moving from expensive legacy databases to its cloud-hosted AlloyDB service. However, many say they’re unable to do so because of regulatory or data sovereignty issues that prevent them from moving data into the cloud. It’s these issues that Google claims it can solve starting today.

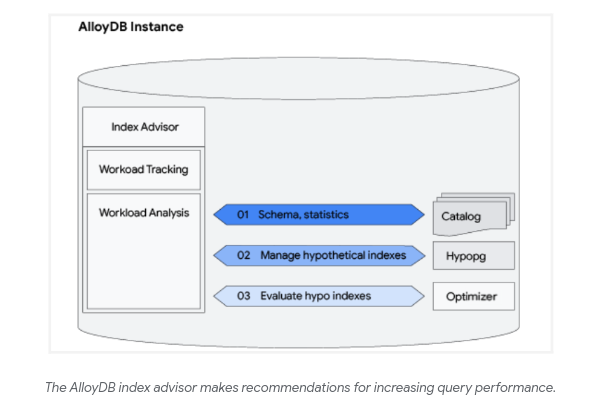

The company announced a technology preview of AlloyDB Omni, which is a downloadable edition of AlloyDB that can be run on-premises, at the network edge, on multiple cloud platforms and even developer laptops, Google said. With AlloyDB, customers get all the benefits of the standard AlloyDB database — such as high performance, PostgreSQL compatibility and Google Cloud support — at a much lower cost than legacy databases. According to Google’s internal tests, AlloyDB has shown itself to be twice as fast as standard PostgreSQL on transactional workloads, and up to 100 times faster with analytical queries.

If that wasn’t enough encouragement to think about migrating to AlloyDB, Google also introduced a new Database Migration Assessment tool that customers can use to understand how much effort will be required to shift their workloads.

“We want to meet customers where they need us,” said Gutmans, vice president and general manager of databases at Google Cloud. “We will be the single throat to choke for customers.”

When it comes to business intelligence, companies need to know they can fully trust their data. To ensure they can, Google announced a new service called Looker Modeler that allows users to define specific metrics about their business via Looker‘s semantic modeling layer. The idea is that Looker Modeler will become the single source of truth for all database metrics, which can then be shared with any BI tool.

On the data privacy side, Google announced BigQuery data clean rooms, a new service designed to help organizations share and match datasets across different companies while ensuring that data remains secure. It’ll be available in the third quarter, when users will be able to upload first-party data and combine it with third-party datasets for marketing purposes.

Google’s vision for data clean rooms is also being expanded through partnerships with firms like Habu Inc., which will integrate with BigQuery to support privacy safe data orchestration, and LiveRamp Holdings Inc., to enable privacy-centric data collaboration and identity resolution in BigQuery.

The final updates pertain to BigQuery ML, a service that makes it possible to use machine learning via existing SQL tools and skills. Google wants to bring machine learning even closer to users’ data, and to that end BigQuery is getting new capabilities that will make it possible to import models such as PyTorch, host remote models on Google’s Vertex AI service, and run pretrained models from Vertex AI.

In addition, Google announced a number of machine learning-focused integrations for BigQuery. For instance, BigQuery now integrates with DataRobot Inc.’s machine learning operations platform so that customers can use notebook environments to build ML models and deploy their predictions within BigQuery. It’s all about helping developers experiment with machine learning more rapidly, Google explained.

A second partnership involves Neo4j Inc., creator of the Neo4j graph database management platform. Now, users can extend SQL analysis with graph-native data science and machine learning, Google said. Finally, Google is integrating BigQuery, LookML and Google Sheets with ThoughtSpot Inc.’s BI analytics platform to power AI-driven natural language search and make it easier for users to extract insights from business data.

With reporting from Robert Hof

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.