AI

AI

AI

AI

AI

AI

Researchers from Meta Platforms Inc.’s artificial intelligence research unit today announced a couple of key developments around adaptive skill coordination and visual cortex replication that they say will allow AI-powered robots to operate in the real world but without any real-world data.

The developments are said to be major advancements toward the creation of general-purpose “embodied AI agents” capable of interacting with the real world without human intervention. The first announcement pertains to the creation of an artificial visual cortex, called VC-1, trained on the Ego4D dataset that consists of thousands of videos of people performing everyday tasks.

As the researchers explained in a blog post, the visual cortex is the region of the brain that enables organisms to convert vision into movement. An artificial visual cortex is therefore a key requirement for any robot that would need to perform tasks based on what it sees in front of it. Because VC-1 needs to work well for a diverse set of sensorimotor tasks in a wide range of environments, the Ego4D dataset proved especially useful as it contains thousands of hours of wearable camera video of research participants around the world performing daily activities, such as cooking, cleaning, sports and crafts.

“Biological organisms have one general-purpose visual cortex, and that is what we seek for embodied agents,” the researchers said. So they set out to create one that performs well on multiple tasks, starting with Ego4D as the core dataset and experimenting by adding additional datasets to improve VC-1. “Since Ego4D is heavily focused on everyday activities like cooking, gardening, and crafting, we also consider egocentric video datasets that explore houses and apartments,” the researchers wrote.

The visual cortex is just one element of embodied AI, however. For a robot to work completely autonomously in the real world, it must also be capable of manipulating real-world objects. Robots need to be able to navigate to an object, pick it up, bring it to another location and place the object — and do all of this based on what it sees and hears.

To solve this, Meta’s AI experts collaborated with researchers at the Georgia Institute of Technology to develop a new technique known as “adaptive skill coordination” where robots are trained entirely in simulations, with those skills then transferred to the real-world robot.

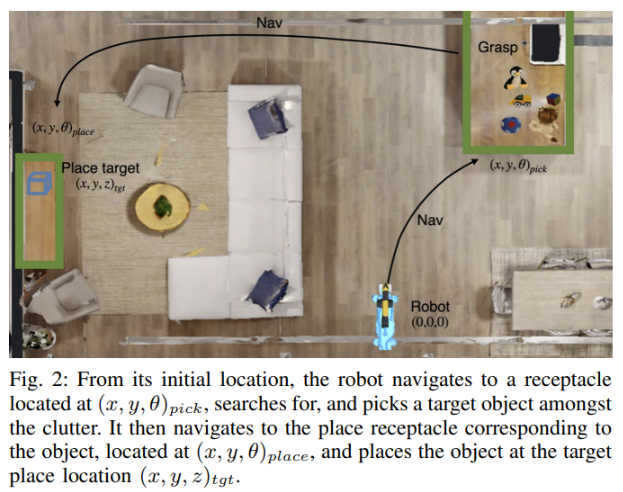

Meta demonstrated the effectiveness of its ASC technique in collaboration with Boston Dynamics Inc. The ASC model was integrated with Boston Dynamics’ Spot robot, which possesses robust sensing, navigation and manipulation capabilities, albeit only with a great deal of human intervention.

“For example, picking an object requires a person to click on the object on the robot’s tablet,” the researchers wrote. “Our aim is to build AI models that can sense the world from onboard sensing and motor commands through Boston Dynamics APIs.”

ASC was tested on Spot using the Habitat simulator, in environments built using the HM3D and ReplicaCAD datasets, which contain indoor 3D scans of more than 1,000 homes. The simulated Spot robot was then taught to move around a previously unseen home, pick up out-of-place objects, carry them to and then put them down in the right location. Later, this knowledge was transferred to real-world Spot robots, which then carried out the same tasks automatically, based on their learned notion of what houses look like.

“When we put our work to the test, we used two significantly different real-world environments where Spot was asked to rearrange a variety of objects — a fully furnished 185-square-meter apartment and a 65-square-meter university lab,” the researchers wrote. “Overall, ASC achieved near-perfect performance, succeeding on 59 of 60 episodes, overcoming hardware instabilities, picking failures, and adversarial disturbances like moving obstacles or blocked paths.”

Meta’s researchers say they’re open-sourcing the VC-1 model today, sharing their detailed learnings on how to scale model size, dataset sizes and more, in an accompanying paper. Meanwhile, the next focus for the team will be to try to integrate VC-1 with ASC to create a single system that gets closer to true embodied AI.

THANK YOU