INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

Nvidia Corp. announced early Monday that its most powerful artificial intelligence chip yet, the GH200 Grace Hopper Superchip, is now in full production.

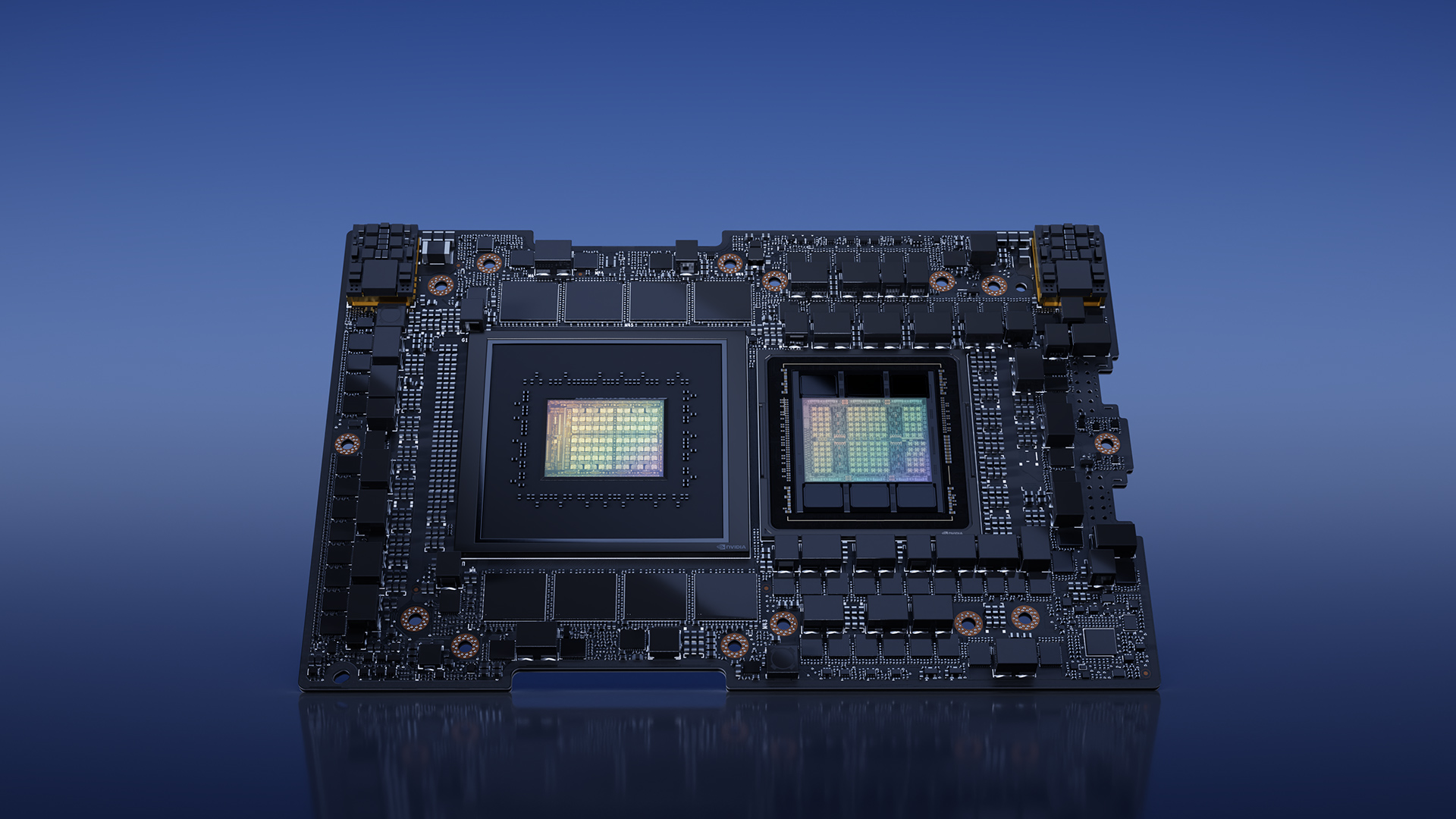

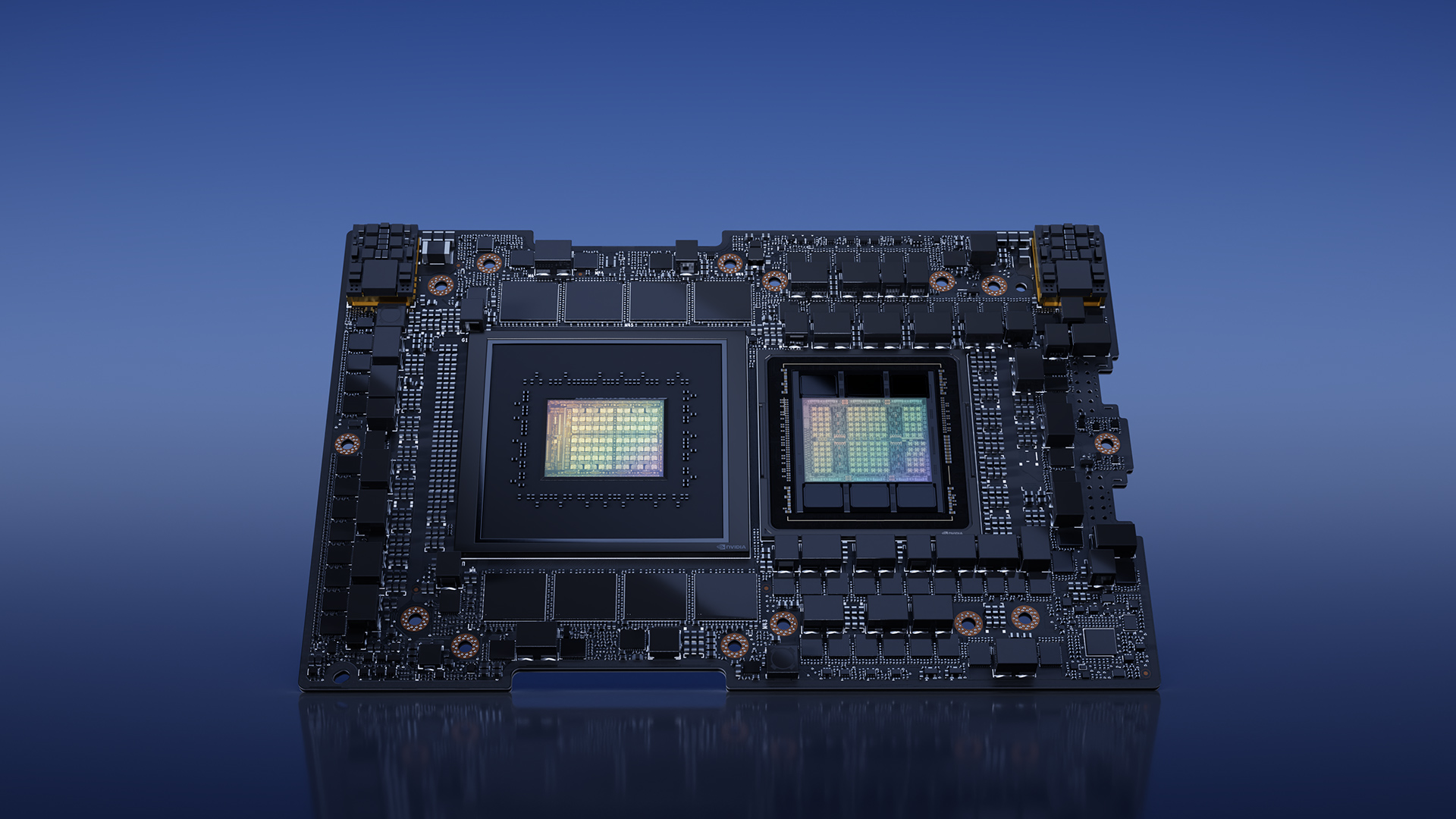

The Nvidia GH200 Superchip (pictured) has been designed to power systems that will run some of the most sophisticated and complex AI workloads yet, including training the next generation of generative AI models.

It was announced at the COMPUTEX 2023 event in Taiwan by Nvidia Chief Executive Jensen Huang, who also revealed the first computer systems that will be powered by the Superchip. Huang explained that the GH200 combines Nvidia’s Arm-based Grace central processing unit and Hopper graphics processing unit architectures into a single chip, using Nvidia’s NVLink-C2C interconnect technology.

The new chip boasts total bandwidth of 900 gigabytes per second, which is seven times higher than the standard PCIe Gen5 lanes found in today’s most advanced accelerated computing systems. The Superchip also uses five times less power, Nvidia said, enabling it to address demanding AI and high-powered computing applications more efficiently.

The Nvidia GH200 Superchip is especially promising for generative AI workloads epitomized by OpenAI LP’s ChatGPT, which has taken the technology industry by storm thanks to its almost humanlike ability to generate new content from prompts.

“Generative AI is rapidly transforming businesses, unlocking new opportunities and accelerating discovery in healthcare, finance, business services and many more industries,” said Ian Buck, Nvidia’s vice president of Accelerated Computing. “With Grace Hopper Superchips in full production, manufacturers worldwide will soon provide the accelerated infrastructure enterprises need to build and deploy generative AI applications that leverage their unique proprietary data.”

One of the first systems to integrate the GH200 Superchip will be Nvidia’s own next-generation, large-memory AI supercomputer, the Nvidia DGX GH200 (below). According to Nvidia, the new system uses the NVLink Switch System to combine 256 GH200 superchips, allowing them to function as a single GPU that provides 1 exaflops of performance, or 1 quintillion floating-point operations per second, and 144 terabytes of shared memory.

That means it has almost 500 times more memory than Nvidia’s previous generation DGX A100 supercomputer, which launched in 2020. It’s vastly more powerful too, with the DGX A100 combining just eight GPUs into a single chip.

Nvidia’s DGX GH200 Supercomputer

“DGX GH200 AI supercomputers integrate Nvidia’s most advanced accelerated computing and networking technologies to expand the frontier of AI,” Huang said.

The DGX GH200 AI supercomputers also will come with a complete full-stack software for running AI and data analytics workloads, Nvidia said. For instance, they’re powered by Nvidia’s Base Command software that provides AI workflow management, cluster management, libraries to accelerate compute and storage, plus networking infrastructure and system software. Meanwhile, Nvidia AI Enterprise is a software layer that contains more than 100 AI frameworks, pretrained models and development tools to streamline the production of generative AI, computer vision, speech AI and other kinds of models.

Holger Mueller of Constellation Research Inc. said Nvidia is effectively combining two really solid products into one by merging its Grace and Hopper architectures with NVLink. “The result is higher performance and capacity and a simplified infrastructure for building AI-powered applications,” he said. “Users will gain immensely from the ability to treat so many GPUs and their power as one logical GPU.”

When you combine two good things in the right way, good things happen. This is the case for NVidia, combining orders Grace and Hopper chip architectures with NVLink. The result is not only higher performance and capacity, it is also simplification to build AI peered next generation applications, as it bring the ability to treat all these GPUs and their power as on logical GPU.

Nvidia said some of the first customers to get their hands on the new DGX GH200 AI supercomputers will be Google Cloud, Meta Platforms Inc. and Microsoft Corp. It will also provide the DGX GH200 design as a blueprint for cloud service providers that wish to customize it for their own infrastructure.

“Training large AI models is traditionally a resource- and time-intensive task,” said Girish Bablani, corporate vice president of Azure Infrastructure at Microsoft. “The potential for DGX GH200 to work with terabyte-sized datasets would allow developers to conduct advanced research at a larger scale and accelerated speeds.”

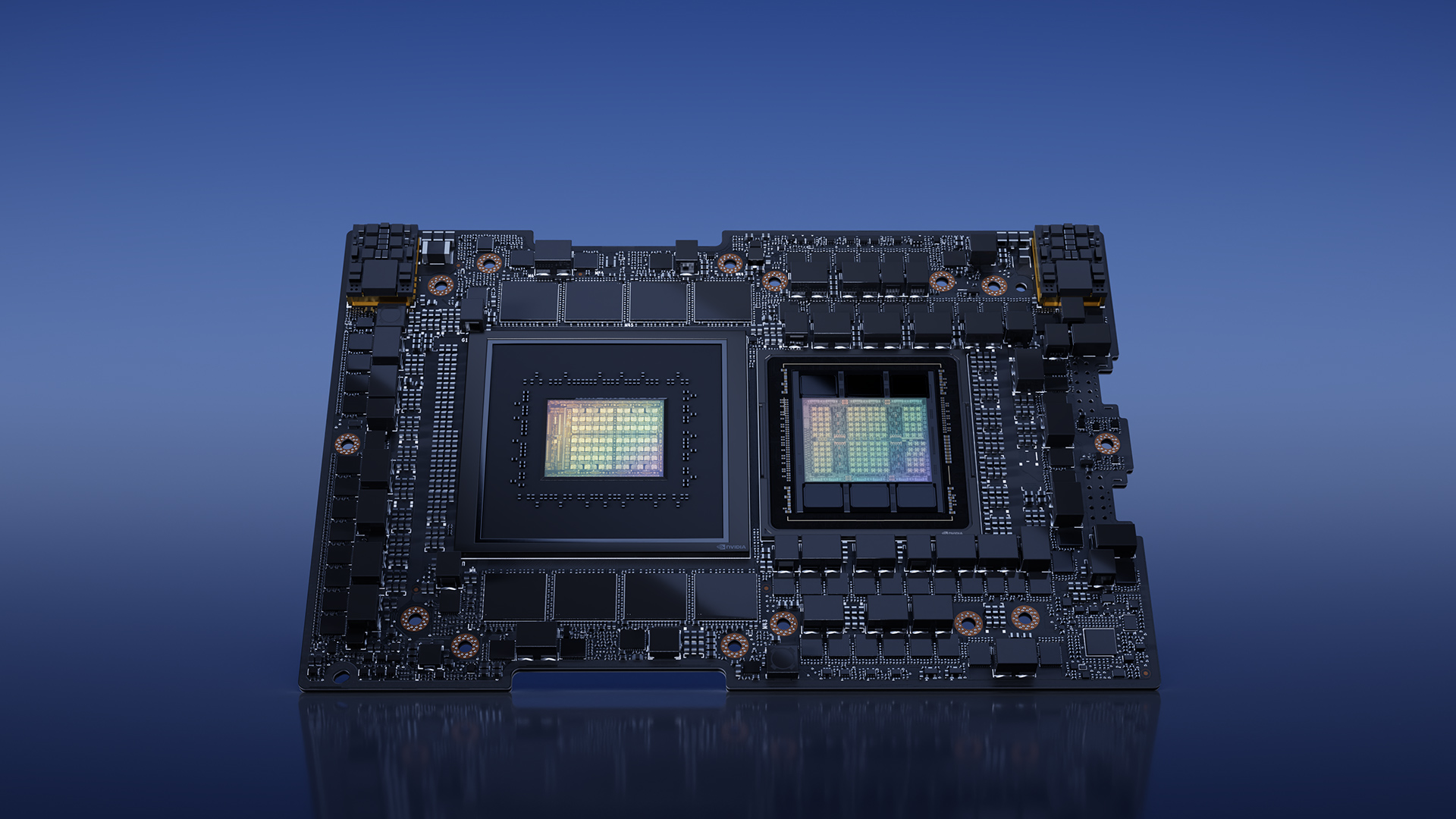

The DGH200 Superchip will be used to advance generative AI applications

Nvidia said it will also build its very own DGX GH200-based AI supercomputer for its own research and development teams. That machine will be called Nvidia Helios, and will combine the power of four DGX GH200 systems, interconnected using Nvidia’s Quantum-2 Infiniband networking technology. Helios will encompass a total of 1,024 GH200 superchips when it comes online by the end of the year.

Last but not least, Nvidia’s server partners are planning to create their own systems based on the new GH200 Superchip. One of the first such systems to launch will be Quanta Computer Inc.’s S74G-2U system, which was announced today and will be made available later this year.

Other partners, including ASUSTek Computer Inc., Aetina Corp., AAEON Technology Inc., Cisco Systems Inc., Dell Technologies Inc. GIGA-BYTE Technology Co., Hewlett Packard Enterprise Co., Inventec Corp. and Pegatron Corp. will also launch systems powered by the GH200.

Nvidia said its server partners have adopted the new Nvidia MGX server specification that was also announced Monday. According to Nvidia, MGX is a modular reference architecture that enables partners to quickly and easily build more than 100 server variations based on its latest chip architecture to suit a wide range of AI, high-performance computing and other types of workloads. By using NGX, server makers can expect to reduce development costs by up to three-quarters, with development time reduced by two-thirds, to about six months.

THANK YOU