CLOUD

CLOUD

CLOUD

CLOUD

CLOUD

CLOUD

Google Cloud is beefing up its cloud-based artificial intelligence computing infrastructure, adding new tensor processing unit and graphics processing unit-based virtual machines it says are optimized to power the most demanding AI workloads.

The new AI-optimized VMs were announced at Google Cloud Next 2023, alongside the launch of an updated Google Distributed Cloud offering that makes it possible to run AI and data analytics workloads in any location, including at the network edge. In addition, Google debuted a new, enterprise-grade edition of Google Kubernetes Engine for container-based applications.

In a blog post, Amin Vahdat, Google’s general manager of machine learning, systems and cloud AI, said customers are demanding more powerful VMs to deal with the exponentially growing demands of new workloads such as generative AI and large language models. “The number of parameters in LLMs has increased by 10x per year over the past five years,” he said. “As a result, customers need AI-optimized infrastructure that is both cost-effective and scalable.”

To meet this need, Google has conceived the Cloud TPU v5e, available now in preview. It’s said to be the most cost-efficient, versatile and scalable cloud TPU it has ever devised, providing integration with GKE, Google’s machine learning framework Vertex AI and various leading AI frameworks, such as PyTorch, TensorFlow and JAX. It’s said to be designed for medium and large-scale AI training and inference applications, delivering up to two times faster training performance per dollar and up to 2.5 times the inference performance per dollar for LLMs and generative AI models, compared with the previous-generation Cloud TPU v4.

The good news is that customers don’t have to sacrifice performance or flexibility for these cost efficiency gains, Vahdat said. He said the TPU v5e pods offer a perfect balance of performance with flexibility and efficiency, enabling up to 256 chips to be interconnected with bandwidth of greater than 400 terabytes per second and 100 petaOps of performance. Customers can choose from eight different VM setups, ranging from a single chip to more than 250 in a single slice. It gives customers superior flexibility to train and run a wide variety of LLMs and AI models, Vahdat added.

With the launch of the Cloud TPU v5e VMs, Google is also making a new Multislice technology available in preview, which makes it possible to combine tens of thousands of the TPU v5e chips or Google’s older TPU v4 chips. Previously, customers were limited to a single slice of TPU chips, which meant they were capped at a maximum of 3,072 chips with the TPU v4. Multislice allows developers to work with tens of thousands of cloud-based chips connected via an interchip interconnect.

Alongside the TPU v5e VMs, Google announced its new A3 VMs based on Nvidia Corp.’s latest H100 GPU, saying they are purpose-built for the most demanding generative AI workloads. According to Google, they offer a huge leap forward in terms of performance versus the prior-generation A100 GPUs, with three times faster training and 10 times more networking bandwidth. Because of the greater bandwidth, customers can scale up their models to tens of thousands of H100 GPUs, Vahdat said.

Now in preview, a single A3 VM packs eight H100 GPUs alongside 2 terabytes of host memory, and also contains Intel’s latest 4th Gen Xeon Scalable central processing units for offloading other application tasks.

Google said the generative AI startup Anthropic AI, which is a rival to OpenAI LP, is one of the earliest adopters of its new TPU v5e and A3 VMs It has been using them in concert with Google Kubernetes Engine to train, deploy and share its most advanced models.

“GKE allows us to run and optimize our GPU and TPU infrastructure at scale, while Vertex AI will enable us to distribute our models to customers via the Vertex AI Model Garden,” said Anthropic co-founder Tom Brown. “Google’s next-generation AI infrastructure will bring price-performance benefits for our workloads as we continue to build the next wave of AI.”

Google Distributed Cloud gives customers a way to bring Google Cloud’s software stack in their own data centers, similar to Microsoft Corp.’s Azure Stack. In that way, they can run various on-premises applications with the same Google Cloud application programming interfaces, control planes, hardware and tooling they use with their cloud hosted apps. “A lot of customers like that full management, Sachin Gupta, vice president and general manager of Google Cloud’s Infrastructure and Solutions Group, told SiliconANGLE in an exclusive interview.

With today’s updates, Google Distributed Cloud is being adapted to run data and AI workloads in any location, including at the network edge, Google said. These capabilities are being enabled by an integration with Google’s Vertex AI platform, which brings multiple machine learning services into customer’s own data centers for the first time.

Vertex AI on GDC Hosted offers pretrained models for speech, translation, optical character recognition and more, and customers can use the platform to optimize them for more specific workloads using their own data. Other capabilities on Vertex AI on GDC Hosted include Vertex Prediction, which helps automate serving custom models, and Vertex Pipelines, to manage machine learning operations at scale, Google said.

Google Distributed Cloud also gains support for AlloyDB Omni, a new managed database service that’s now in preview, bringing all of the benefits of the cloud-based AlloyDB offering to customer’s on-premises data centers. As a PostgreSQL-compatible database, AlloyDB Omni is ideal for both transactional workloads and also AI training data, thanks to its support for vector embeddings, which are numerical representations of data generated by LLMs.

Finally, Google is launching new hardware for customers to run Google Distributed Cloud onsite. That includes a new hardware stack that is said to be its most powerful yet, featuring Intel’s 4th Gen Xeon CPUs combined with Nvidia’s A100 GPUs and a high-performance networking fabric with up to 400 gigabytes per second throughput. It offers improved virtual CPU performance, higher memory, Gen5 PCIe input/output operations performance and 400G/100G network links.

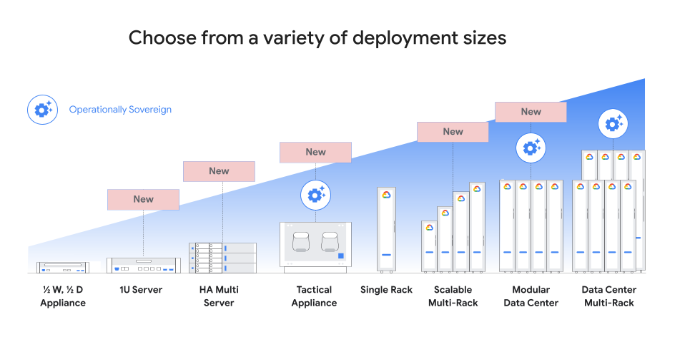

Meanwhile, Google announced three new GDU Edge platforms, based on small form-factor servers (small, medium and large) with different vCPU, RAM and storage capacities and a rugged design that makes them ideal for use in locations such as retail stores and restaurants. “We need a variety of form factors for all these options,” Gupta said.

Google Kubernetes Engine is Google’s platform for managing containers, which host the components of modern applications, and customers are getting new capabilities with the availability of GKE Enterprise from today.

In a blog post, Google explained that the GKE Enterprise edition supports multicluster fleets, making it possible to group similar workloads into dedicated clusters for the first time, apply custom configurations and policy guardrails to those fleets, and isolate sensitive workloads. It also adds new managed security features such as advanced workload vulnerability insights and governance and policy controls, plus a managed service mesh.

Google said GKE Enterprise is fully managed and integrated, meaning customers will spend less time and effort on managing the platform and more time on building applications and experiences for their users. Notably, GKE Enterprise also supports hybrid and multicloud platforms, making it possible to run container workloads in any location, including other public clouds or on-premises.

“In short, GKE Enterprise makes it faster and safer for distributed teams to run even their more business-critical workloads at scale, without growing costs or headcount,” said Chen Goldberg, general manager and vice president of Google Cloud Runtimes. She claimed that customers have seen “amazing results” in early deployments, improving productivity by 45% and speeding up software deployment times by more than 70%.

With reporting from Robert Hof

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.