AI

AI

AI

AI

AI

AI

Low-code machine learning development startup Predibase Inc. has announced the availability of a new software development kit that it promises will make it easy for developers to train small, task-specific large language models.

What’s more, they’ll be able to do so using the cheapest and most readily available cloud-based hardware. Once the models have been fine-tuned, Predibase says, they can then be served using its lightweight, modular LLM architecture that can dynamically load and unload artificial intelligence models in seconds.

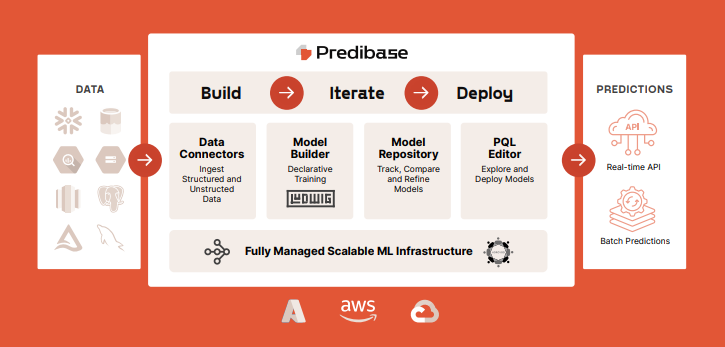

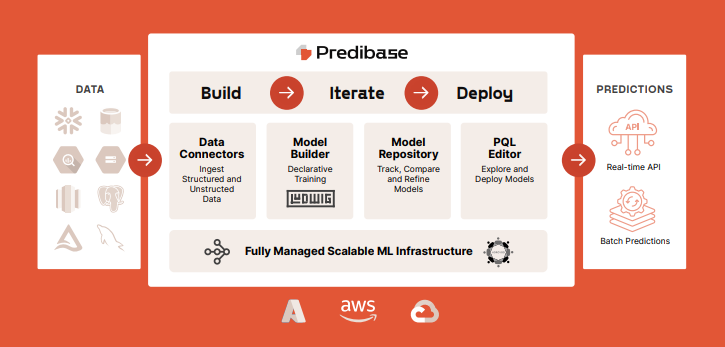

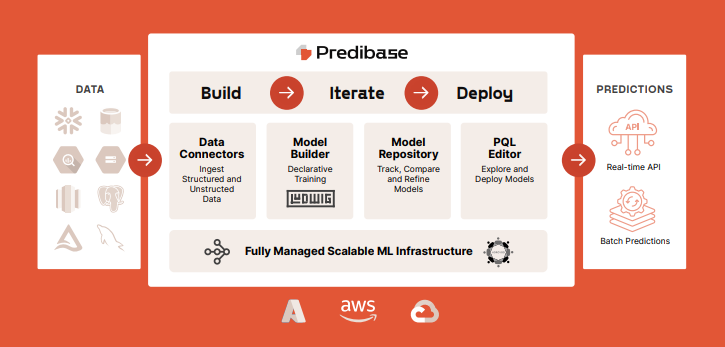

Predibase, which recently closed on a $12.2 million expansion to its Series A funding round, offers a machine learning platform that makes it simpler for developers to build, iterate and deploy highly capable AI models and applications. It says its aim is to help smaller companies compete with the big boys, such as OpenAI LP and Google LLC, by removing the need for them to use complex machine learning tools, and instead use low-level machine learning frameworks.

With Predibase’s platform, teams define what they want to predict using a selection of prebuilt large AI models, and let the platform do the rest. It says novice users can leverage recommended model architectures to get started, while more experienced practitioners can use the platform to fine-tune any model parameter. In this way, Predibase claims, it can reduce the time it takes to deploy machine learning models from months to just a few days.

Predibase said its new SDK simplifies AI model deployment and provides optimizations that enable training jobs to run efficiently on any kind of hardware. As a result, it claims to democratize access to LLMs at a time when high-end graphics processing units are in big demand.

Co-founder and Chief Executive Dev Rishi said the launch of the SDK means his company can now tackle two key problems that prevent wider adoption of AI. According to Rishi, studies show that more than 75% of enterprises refuse to use commercial LLMs in production due to concerns around ownership, privacy, cost and security. So the answer, he said, is open-source LLMs, but they carry their own problems.

“Even with access to high-performance GPUs in the cloud, training costs can reach thousands of dollars per job due to a lack of automated, reliable, cost-effective fine-tuning infrastructure,” Rishi explained. “Debugging and setting up environments require countless engineering hours. As a result, businesses can spend a fortune even before getting to the cost of serving in production.”

With the Predibase SDK, companies can take any open-source LLM and make it trainable on commodity GPUs such as the Nvidia T4. Because its SDK is built atop of the open-source Ludwig framework for declarative model building, users only need to specify the base model, the dataset they want to use, and provide a prompt template. Predibase then applies 40-bit quantization, low-rank adaptation, memory paging/offloading and other optimizations to ensure the model can be trained on whatever hardware is available, at the fastest speeds possible.

Predibase is so confident in the efficiency of its approach that it’s offering customers unlimited fine-tuning and serving based on the open-source Llama-2-13B model for free in a two-week trial.

As an added benefit, Predibase’s SDK can actually find the most cost-effective hardware available in the cloud for each training job, using its purpose-built orchestration logic. This is one of the main features of Predibase AI Cloud, a brand new service that supports multiple cloud environments and regions. It can automatically identify a wide range of cloud resources for each training job, enabling users to choose the best combination of training hardware based on their cost and performance requirements.

Omdia Chief Analyst Bradley Shimmin said Predibase is one of the first platforms to implement recent innovations such as parameter efficient fine-tuning and model quantization. “Companies are achieving great results on more commodity hardware by fine-tuning smaller, often open source LLMs using a limited amount of highly curated data,” he said. “The challenge that remains, however, is how to operationalize these methods during development and then bring the final results forward into production in a cost-effective yet performant manner.”

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.