INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

Microsoft Corp. is looking to fuel the artificial intelligence and cloud computing ambitions of its customers with the launch of new, dedicated silicon for AI and other workloads.

The new chips announced today at Ignite 2023 include Microsoft’s first custom AI accelerator available on Azure, called Azure Maia, which is designed to support workloads such as Large Language Models and GitHub Copilot. They also include the company’s first custom, in-house series of central processing units, Azure Cobalt, which is built on an Arm architecture to deliver optimal performance and energy efficiency for common workloads.

Check out our full coverage of Microsoft Ignite in these stories:

- First dedicated AI accelerators and in-house Arm processors unveiled for Microsoft Azure

- Microsoft turbocharges AI development with Fabric, Azure AI Studio and database updates

- AI-powered Microsoft Copilot expands with new features and customization

- Broad collection of security products announced at Microsoft Ignite

- Microsoft debuts new AI tools for Windows developers and IT professionals

- Nvidia announces generative AI foundry on Azure and new foundational models

- Microsoft brings generative AI copilots to sales and service teams

- Exclusive: Informatica and Microsoft team on native data integration for Fabric analytics

In a blog post, Omar Khan, general manager of Azure infrastructure marketing at Microsoft, said AI transformation is one of the central themes of this year’s edition of Ignite. He said AI has advanced rapidly this year and is driving a wave of innovation that’s quickly changing what applications look like, and how they’re built and delivered. However, companies are struggling to build upon this innovation while juggling priorities such as rising costs and sustainability challenges.

“Today’s customers are looking for AI solutions that will meet all their needs,” Khan said. “In this new era of AI, we are redefining cloud infrastructure, from silicon to systems, to prepare for AI in every business, in every app, for everyone.”

Khan explained that the Azure Maia accelerator chip is customized for AI workloads such as LLMs, Bing and ChatGPT. It’s the first generation of a planned series of accelerators, boasting 105 billion transistors, making it one of the most powerful chips ever built on a five-nanometer process. The chip was designed in collaboration with OpenAI, which provided its deep insights into how its LLMs run on Azure’s cloud infrastructure to ensure it provides optimal performance.

According to OpenAI Chief Executive Sam Altman, his company’s experts have collaborated with Microsoft on every aspect of Azure’s AI infrastructure layer. “We’ve worked together to refine and test it with our models,” he said. “Azure’s end-to-end AI architecture, now optimized down to the silicon with Maia, paves the way for training more capable models and making those models cheaper for our customers.”

The Maia 100 AI Accelerator has also been built specifically to fit in with the rest of the Azure hardware stack, said Microsoft technical fellow Brian Harry. The alignment of the chip’s design with Azure’s larger AI infrastructure will yield huge gains in performance and efficiency, he promised.

The Cobalt CPU is a 64-bit, 128 core chip that’s said to deliver a 40% improvement over current-generation Azure Arm chips, designed to power Microsoft services such as Teams and Azure SQL. Arm-based chips, known for their energy-efficient design, can help customers to meet their sustainability goals while ensuring their cloud-native applications perform as expected. Much of the focus was on optimizing the chip’s performance-per-watt, in order to squeeze the maximum power out of it for every unit of energy it consumes.

“The architecture and implementation is designed with power efficiency in mind,” Harry said. “We’re making the most efficient use of the transistors on the silicon. Multiply those efficiency gains in servers across all our data centers, it adds up to a pretty big number.”

According to Rani Borkar, corporate vice president for Azure hardware systems and infrastructure, Microsoft opted to design its own, in-house chips to ensure that the silicon is tailored perfectly for its cloud infrastructure and the AI workloads it runs. The chips will be integrated with customized server boards and placed within tailor-made server racks that easily fit inside the company’s data centers. They will then work hand-in-hand with Microsoft’s specially designed data center software. The result, Borkar said, is a hardware system that provides maximum flexibility and can be optimized for power, performance, sustainability or cost.

“At Microsoft we are co-designing and optimizing hardware and software together so that one plus one is greater than two,” Borkar said. “We have visibility into the entire stack, and silicon is just one of the ingredients.”

Khan said the new chips represent the last piece of the puzzle for Microsoft’s next-generation cloud infrastructure and will start rolling out to customers early next year. They’ll initially power services including Microsoft Copilot and Azure OpenAI Service, before being expanded to other kinds of workloads.

Holger Mueller of Constellation Research Inc. said it makes sense for Microsoft to develop its own, customized hardware for AI as it already offers some of the most comprehensive software for developing and running these workloads. “The combination of performance and power, coupled with the networking capabilities of Azure makes these custom chips very interesting offerings for enterprises,” he said. “Enterprises will be very excited to see what Azure Maia and Azure Cobalt can deliver.”

Alongside the new hardware, Microsoft has made great efforts to improve the networking infrastructure that links them. With its Hollow Core Fiber technology and a new service called Azure Boost, which is generally available today, the company said it will be able to massively accelerate networking and storage. The combination of the two will enable customers to achieve up to 12.5 gigabytes-per-second throughput, 650,000 input/output operations per second in remote storage performance, and up to 200 gigabits per second in networking bandwidth to support the most data-intensive workloads.

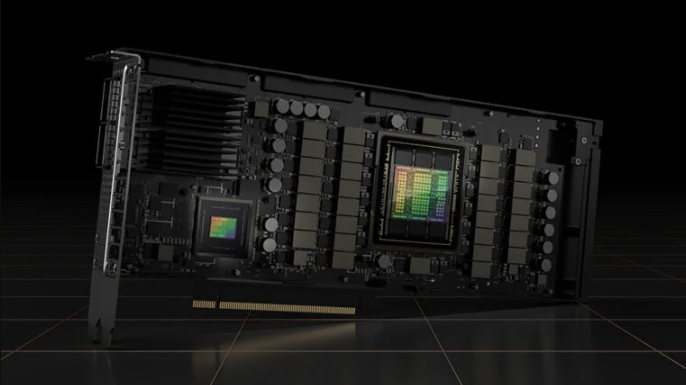

Customers will have new hardware options besides Microsoft’s custom chips. In a separate announcement, the company said it’s partnering with Nvidia Corp. to provide access to its newest H100 Tensor Core GPU-based virtual machines. These are targeted at mid- to large-scale AI workloads, such as Azure Confidential virtual machines. It’s also going to provide access to Nvidia’s H200 Tensor Core GPU next year to support inference for larger AI models with the lowest possible latency.

A third option for AI workloads will be the new MI300 accelerator built by Advanced Micro Devices Inc. The MI300 was announced earlier this year, and will become available on Azure early next. Microsoft said the addition of this high-performance chip gives customers greater optionality for running their AI applications.

“Customer obsession means we provide whatever is best for our customers, and that means taking what is available in the ecosystem as well as what we have developed,” Borkar said. “We will continue to work with all of our partners to deliver to the customer what they want.”

Microsoft had lots more on the Azure infrastructure side to share, announcing the general availability of the Oracle Database@Azure service in its US East Azure region. It will launch in December and give Azure customers in that region direct access to Oracle Corp’s database services on Oracle Cloud Infrastructure deployed in its own data centers. The company promised it will match the performance, scale and workload availability of the Oracle Exadata Database Service on OCI, while adding all of the benefits Azure has to offer, including its security, flexibility and services.

Meanwhile, VMware Inc. customers will soon be able to access VMware vSphere on Azure Arc. The service, which is generally available now, melds Azure’s and VMware’s infrastructure to empower developers, so they can build more sophisticated applications using Azure’s services. In addition, Azure IoT Operations enabled by Azure Arc is also being made available, in preview now. With this, customers can built internet of things applications and services with less complexity, enabling remote devices to make real-time decisions driven by AI-powered insights.

Finally, Microsoft shared some brief details of Microsoft Copilot for Azure, which is launching in preview today. It’s based on generative AI technology and will make it easier for customers to perform a multitude of tasks in Azure. Microsoft Copilot for Azure will simplify how users can design, operate and troubleshoot applications and infrastructure in any environment. Customers can apply to access the service ahead of its general availability now.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.