AI

AI

AI

AI

AI

AI

Sourcegraph Inc., a startup best known for developing a universal code search tool, today announced it’s releasing the company’s generative artificial intelligence-powered chatbot coding assistant Cody into general availability.

With Cody, developers get an AI assistant that uses intelligent code context to provide answers to technical questions, provide code completion in editors, generate code blocks, provide test cases and more.

What separates Cody from other AI assistants in the market is that the chatbot is aware of more than just the code in the file the developer is looking at or a single repository. It can search and navigate a company’s entire codebase using Sourcegraph’s specialized technology.

In an interview with SiliconANGLE, co-founder and Chief Executive Quinn Slack said that because the company started with code search, it’s exactly that foundation that set it on the course to prepare Cody to make it as useful and aware as it is.

“To build code search, we had to build a deep understanding of code,” he said. “And with advances in AI, we can take that deep understanding and feed it to the AI to help it understand questions, autocomplete better or write tests better. Overall, that’s what’s making Cody the most powerful and accurate AI available and we’re the first to connect an AI to this code context.”

The company’s name itself, Sourcegraph, refers to a knowledge graph of the code, which provides a crucial context-aware source of information for both the AI and developers when it comes to the code.

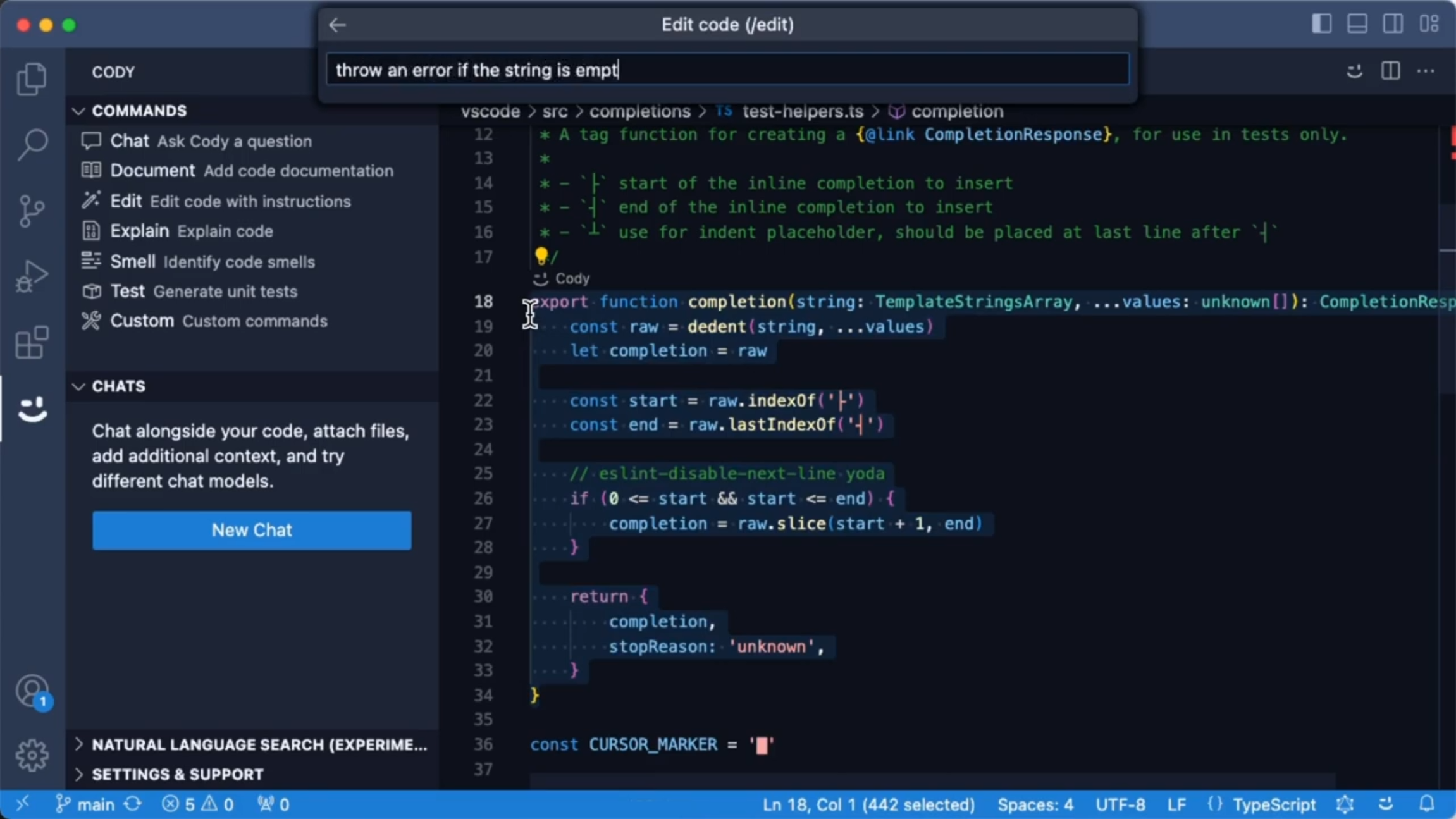

Cody is currently available as an editor extension for Visual Studio Code in general availability. The AI assistant is also available in JetBrains editors and Neovim in beta mode, and can be enabled in Sourcegraph’s Code Search engine. It provides both code completion within the editor and a chatbot experience on the side, where users can ask questions and it will generate code snippets or complete, built functions that take in the entire context of their codebase.

When a user asks Cody a technical question about their codebase, it uses Sourcegraph’s knowledge context to go through a company’s repository of code and understands the underlying information there to provide a conversational response to questions. That means users no longer need to write out technical search strings to find functions or source references.

Every reply from Cody about code search also comes with a series of references from the codebase that provides deeper context related to the technical question that allows the developer to dive directly into the code. So if developers ask a question about how a function operates or about an app in their codebase, Cody can give them an easy-to-read explanation of what it is, alongside a series of references from the codebase that they can then use to find exactly where to see find dependencies and elements in the code itself.

“Sometimes these context links are just as helpful as the answer it gives because I can actually go and dig into the source of truth, the primary source material,” said Slack.

Developers can also update Cody with custom commands, making its functionality extendable, with custom LLM prompts that fit their workflows. For example, they can prompt the AI assistant to write commit messages for them to summarize code changes between two open tabs to reduce tedium, or ask Cody to compare the open tabs. That allows developers to create commands for Cody that they do often, reducing overall toil in their everyday work.

To make sure customers aren’t beholden to one generative AI provider, customers can choose the large language model that powers Cody, which can include Anthropic’s Claude 2.1 and OpenAI’s GPT-4 Turbo. Developers can also choose from a different LLM provider through Microsoft Corp.’s Azure and Amazon Web Services Inc.’s Bedrock.

According to Slack, deep code context is only the beginning. In the future, Cody should be able to see everything related to the developer stack and assist across the entire workflow.

“We don’t want to stop there,” Slack said. “And the really exciting thing is how can we get all the information that a human developer knows and bring that into the AI? For example, bringing in information from Confluence, Splunk, Datadog and Jira so that Cody can answer a question about how to fix an error. All of this data is critical. There’s way more of that data than there is in code and no human could do their job without it.”

As for now, Cody is available for free for individual developers for local context only, with monthly limits on autocomplete and chat. There is also a Cody Pro pricing tier available at $9 per user per month with no monthly limits and allows users to choose their LLM provider, which is free to use until February. A Cody Enterprise tier will be available in early 2024.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.