INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

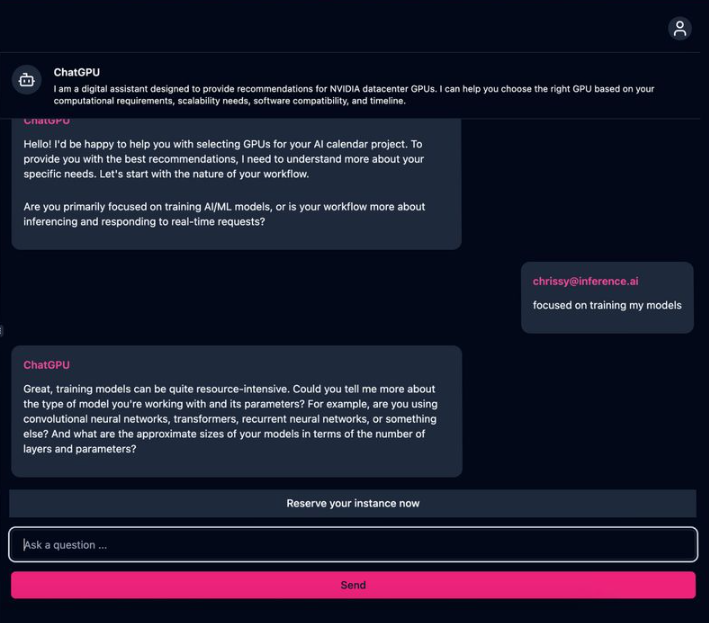

GPU cloud operator Inference.ai said today its customers can now take advantage of a free generative artificial intelligence-powered assistant to help them select the most appropriate graphics processing unit for their training and inference workloads.

The company is one of a growing number of startups operating GPU clouds for companies that want to access the critical infrastructure for AI models without making significant investments in the necessary hardware. GPUs are not only expensive, but they’re also in short supply, so the idea of renting them only when they’re needed has a lot of appeal.

Inference.ai offers an extensive selection of GPUs, with a fleet of 18 different processors from Nvidia Corp., including its most advanced H100 model. It also offers four models from Nvidia’s rival Advanced Micro Devices Inc., including the all-new MI300X that was specifically designed to handle generative AI workloads.

The company has also built a globally distributed network of data centers, enabling its customers to access its GPUs with low-latency from anywhere in the world, which can make a real difference for real-time applications. Moreover, it claims to offer some of the lowest prices in the industry, with rates that are up to 82% cheaper than those offered by cloud infrastructure giants such as Amazon Web Services Inc., Microsoft Azure and Google Cloud.

Now, Inference.ai intends to stand out from the competition even more, providing access to a new generative AI tool that’s designed to bring greater clarity to customers when it comes to choosing the most appropriate GPU based on their performance requirements and budgets.

According to the startup, its generative AI chatbot asks users simple questions in natural language about their product concept in order to try to identify their specific compute requirements. It then suggests the most optimal GPU setup to bring their AI projects to life the fastest. The company said it’s all about adding transparency to the process and reassuring customers that they’re investing in the right GPU hardware.

Inference.ai claims that there is a lot of uncertainty at present because most customers are embarking on AI projects for the first time and lack the experience to know what kind of infrastructure they need. Many customers have no idea which GPU model is most suitable, how many of the chips they’ll actually need or how long it will take to train their AI models.

Inference.ai Chief Executive John Yue likens the process of selecting GPU resources to buying a car, saying that no one would open their wallet without taking it for a test drive first. “People are buying two-year GPU commitments based on blind guesswork for how much processing power they need, and GPUs are much more expensive than a car,” he pointed out. “Our solution makes it clear exactly what GPU is needed before they spend anything.”

The generative AI bot, available in beta testing now, is designed to guide users through the entire process of GPU sourcing. The first step involves the customer describing the AI product they aim to create in plain English, the company said. The bot will then respond by asking questions about the project to clarify their exact computing needs — for instance, “Is the AI model being trained from scratch, or does it leverage an existing model?”

Once the bot has identified the customer’s computational requirements, budget, scalability needs, software compatibility and timeline, it will then offer the customer a more refined list of the most appropriate GPUs. That includes a specific recommendation and a couple of alternatives in case the customer decides it wants to prioritize costs, speed or some other factor. Finally, once the customer makes a choice, the bot will connect it to Inference.ai’s sales team to take care of payment and deployment.

As an example, Inference.ai said a customer might instruct the bot that it’s aiming to create an AI application that uses large language models to illustrate pictures for comic book artists. The bot will ask pointed questions about the training requirements for the model, including the number of pages it will be asked to illustrate, the resolution of the artwork and the total file size of the training data to be used. These insights will then help it to select the best GPU based on the customer’s responses.

In addition, the bot can cater to more knowledgeable buyers who already have a good idea of their hardware needs, the company said.

“Our goal is to make it easy for anyone to create AI products, without having to worry about making mistakes with their infrastructure investments,” Yue said. “Guesswork should never be the foundation of your company’s future.”

Holger Mueller of Constellation Research Inc. said AI is increasingly being used in almost every industry, so it’s no surprise to see that the technology is now being applied to further the adoption of AI itself. “In this instance the innovation is coming from the startup field, with Inference.ai using AI to help companies calculate their GPU capacity needs to optimize costs and ensure their projects are completed on schedule,” the analyst said. “It’s addressing what is undoubtedly a significant challenge for many enterprises. How well it actually works remains to be seen, but it’s an interesting differentiator and it’s always good to see new innovations.”

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.