AI

AI

AI

AI

AI

AI

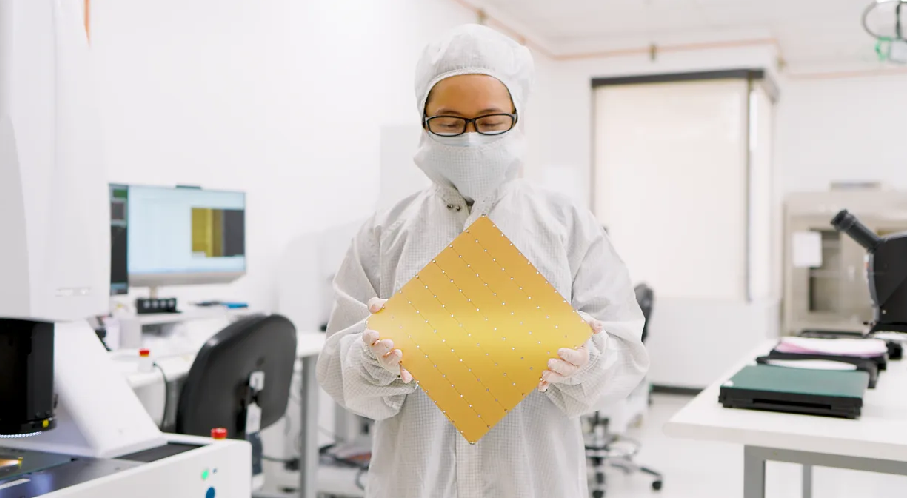

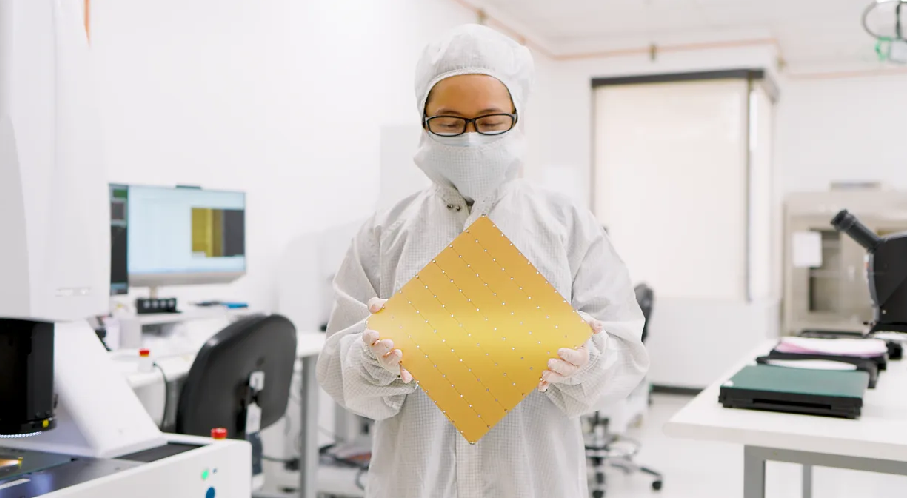

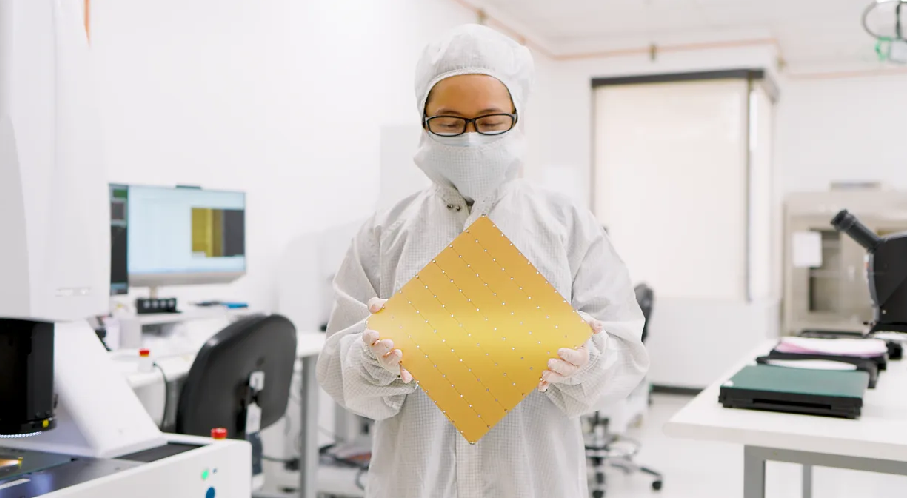

Semiconductor startup Cerebras Systems Inc. today debuted a new wafer-sized chip, the WSE-3, that features 4 trillion transistors organized into nearly 1 million cores.

The processor is optimized for artificial intelligence training workloads. Cerebras claims a server cluster equipped with 2048 WSE-3 chips can train Llama 2 70B, one of the most advanced open-source language models on the market, in under one day. That’s significantly less than the 1.7 million graphics card hours the model originally took to develop.

The WSE-3 (pictured) is an improved version of a chip called the WSE-2 that Cerebras debuted in 2021. It features 1.4 trillion more transistors, 100,000 more compute cores for a total of 900,000 and 44 gigabytes of onboard SRAM memory. The enhanced specifications are partly the result of the fact that Cerebras switched from a seven-nanometer manufacturing process to a newer five-nanometer node.

According to the company, the WSE-3 provides up twice the performance of its predecessor for AI workloads. It can achieve peak speeds of 125 petaflops, with 1 petaflop equal to 1,000 trillion computations per second. The WSE-3 promises to provide that performance at the same price as its predecessor and without drawing more power.

Cerebras will sell its chip as part of data center appliance called the CS-3 that is about the size of a mini fridge. Each system includes a single WSE-3, as well as built-in liquid cooling and power delivery modules. The latter components are redundant, which means there’s a standby unit ready to take over in the event of an outage, and can be taken offline for maintenance without shutting down the entire system.

Cerebras positions the WSE-3 as a more efficient alternative to Nvidia Corp.’s market-leading graphics cards. The chip features about 52 times more cores than a single Nvidia H100 GPU. According to Cerebras, using one large processor instead of multiple graphics cards makes the AI training workflow more efficient.

An AI model is a collection of relatively simple code snippets known as artificial neurons. Those neurons are organized into collections called layers. When an AI model receives a new task, each of its layers performs a portion of the task and then combines its results with the data generated by the others.

The most advanced neural networks are too large to run on a single graphics card. As a result, their layers are distributed across upwards of hundreds of GPUs. The different AI fragments coordinate their work by frequently exchanging data with one another.

A given AI model layer can only begin analyzing a piece of data once that data arrives from the layer that processed it previously. If the two layers run on separate chips, information can take a significant amount of time to travel between them. The larger the physical distance between the chips, the more time it takes data to move from one GPU to the other, which slows down processing.

Cerebras’ WSE-3 promises to shorten that distance. If all of an AI model’s layers run on a single processor, data only has to travel from one corner of the chip to another rather than between two graphics cards that may be located several feet apart. Reducing the distance that data must cover reduces travel times, which in turn speeds up processing.

“When we started on this journey eight years ago, everyone said wafer-scale processors were a pipe dream,” said Cerebras co-founder and Chief Executive Andrew Feldman. “WSE-3 is the fastest AI chip in the world, purpose-built for the latest cutting-edge AI work, from mixture of experts to 24 trillion parameter models.”

Cerebras detailed the chip today alongside a new partnership with Qualcomm Inc. As part of the collaboration, the companies will enable customers to run AI models trained with the WSE-3 on the AI Cloud 100 Ultra, an inference processor that Qualcomm introduced last year. They will also offer several software tools designed to improve the performance of customers’ AI models.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.