AI

AI

AI

AI

AI

AI

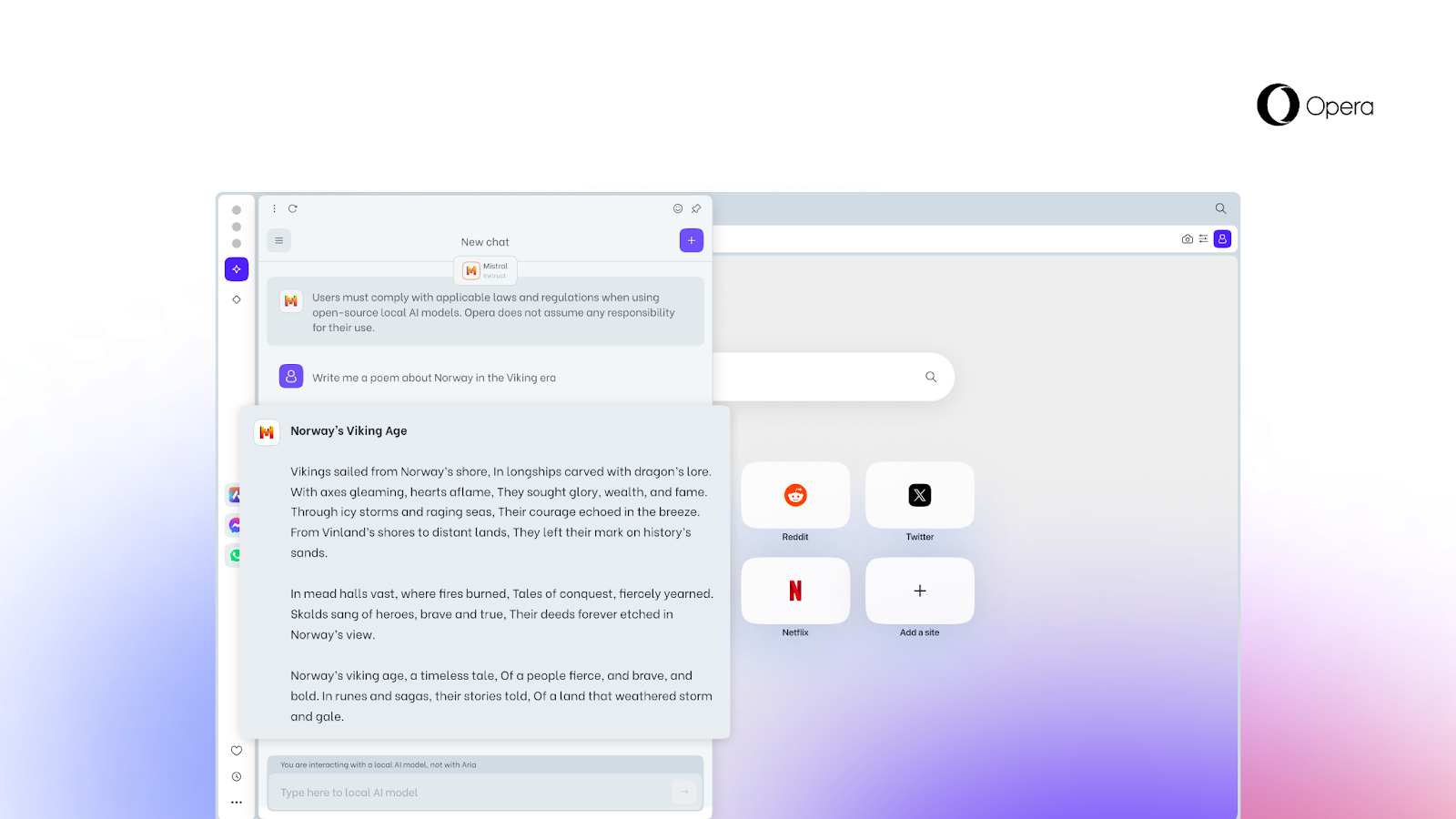

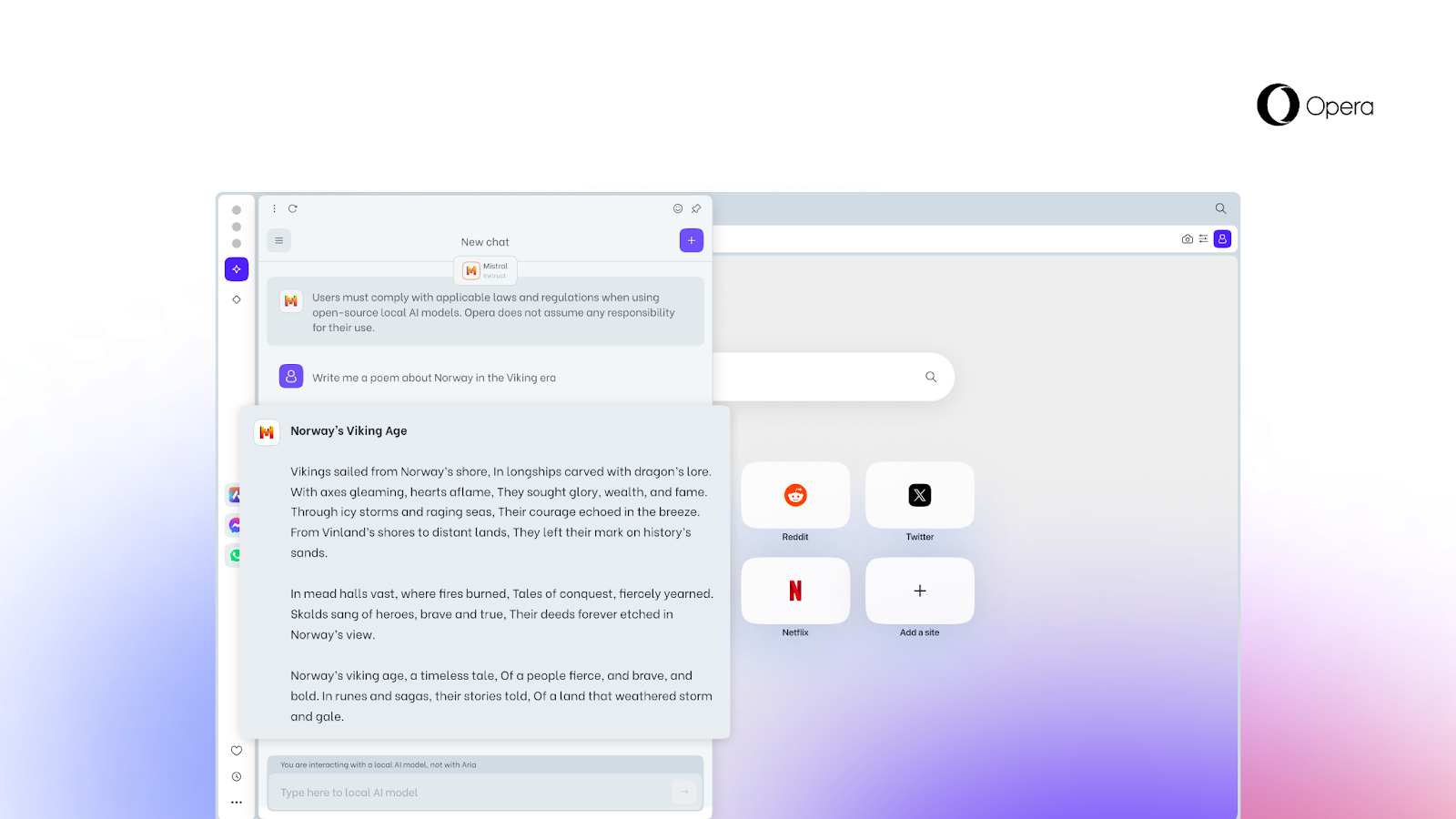

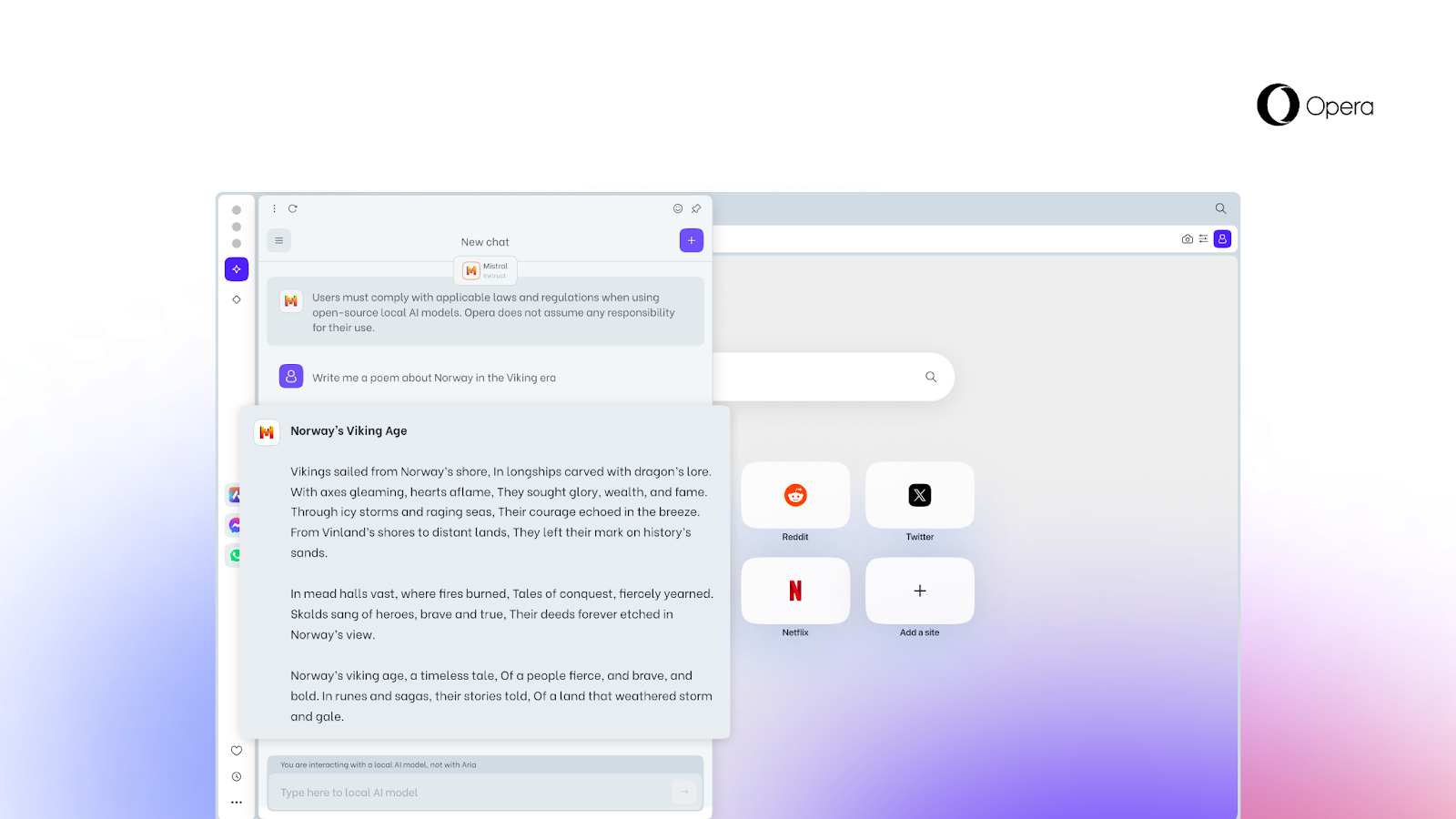

Web browser developer Opera announced today that it’s adding experimental support for 150 local large language model variants from about 50 families of models to its Opera One browser.

Local LLMs allow users to access AI models directly from their computer without the prompt processing ever leaving their local machine. That means that there’s lower latency because any data used is not sent across the internet. It also means it can’t be used to train another model.

Users seeking the enhanced privacy of being able to process prompts locally will be able to test the new LLMs even while not connected to a network.

The new feature is part of Opera’s AI Feature Drops Program and is accessible as part of the Opera browser’s developer stream, the company said. Interested users will need to upgrade to the newest version of Opera Developer to access local LLMs.

“Introducing Local LLMs in this way allows Opera to start exploring ways of building experiences and knowhow within the fast-emerging local AI space,” said Krystian Kolondra, executive vice president of browsers and gaming at Opera.

Local models supported in Opera One include Llama from Meta Platforms Inc., Gemma from Google LLC, Mixtral from Mistral AI, and Vicuna. Most local LLMs require between 2 and 10 gigabytes of local storage space per variant and take the place of Aria, Opera’s native browser AI.

To download and activate one of the new variants, users will need to open Aria Chat, choose “local mode” from a drop-down and pick a model to download from settings. From there it will be possible to download and then activate various models.

There are numerous local LLMs to check out, including Code Llama from Meta, which is an extension of Llama that allows users to discuss coding in languages such as Python, C++, Java, PHP and C#. There’s also Phi-2 from Microsoft Corp. with a 2.7B parameter model that is capable of reasoning and language capabilities for question and answering, chat and code assistance. In addition, there’s Mixtral, which is a model that excels in natural language processing such as versatile text generation for answering questions, classification, writing poetry, assisting with answering emails and writing tweets.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.