AI

AI

AI

AI

AI

AI

OpenAI today debuted a set of new tools that will make it easier to optimize its large language models for specific tasks.

Most of the additions are rolling out for the company’s fine-tuning application programming interface, which it launched last August. The API allows customers to supply OpenAI language models with additional data not included in their built-in knowledge base. A retailer, for example, could provide information about its merchandise to GPT-4 and then use the model to field customers’ product inquiries.

Fine-tuning an LLM using external data is a complicated process with the potential for technical errors. If a malfunction emerges, the LLM may fail to properly ingest the information it’s given, which can limit its usefulness. The first new enhancement that OpenAI introduced for its fine-tuning API today is designed to address that challenge.

An AI fine-tuning project is typically divided into phases known as epochs. In each such phase, the model analyzes the dataset with which it’s being fine-tuned at least once. Malfunctions often emerge not during the first epoch of a fine-tuning project but rather in subsequent training sessions.

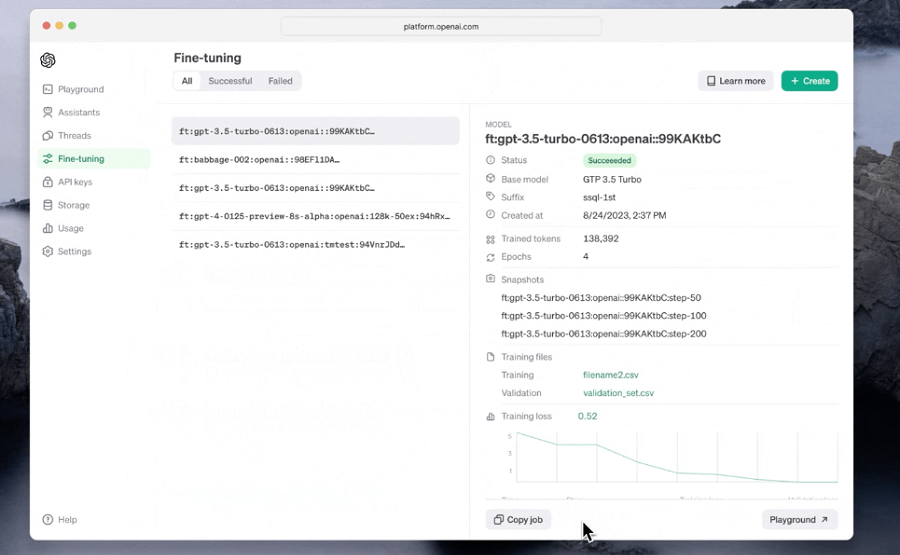

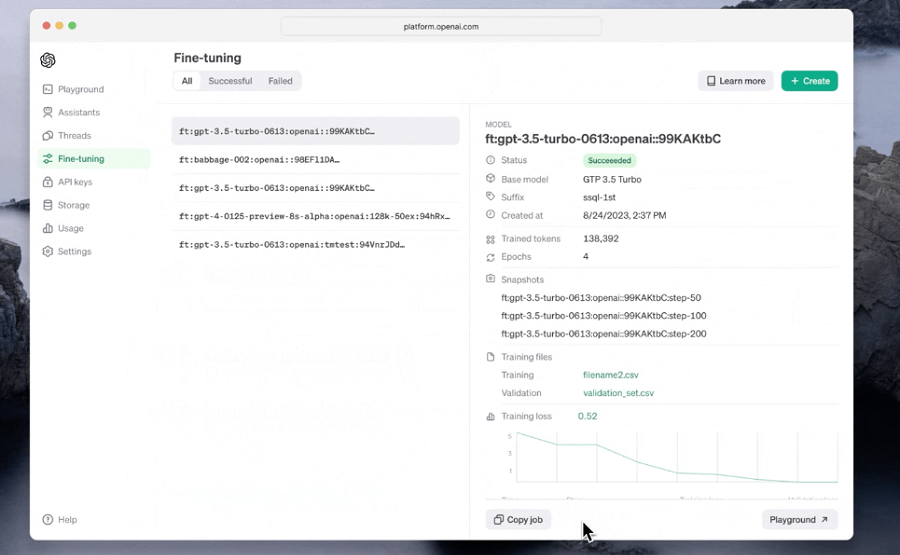

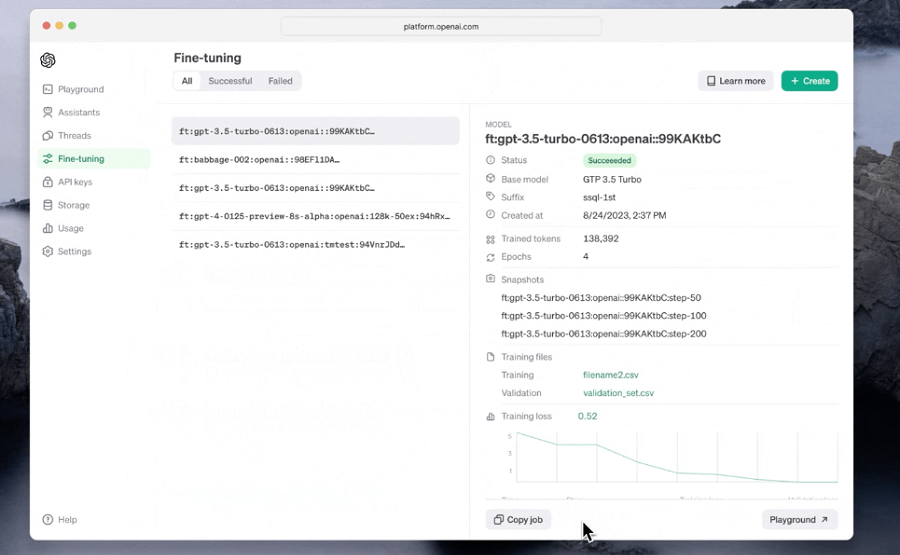

Using OpenAI’s enhanced fine-tuning API, developers can now save a copy of an AI model after each of the epochs in a training run. If a malfunction emerges in the fifth epoch, the project can be restarted from the fourth epoch. That removes the need to start everything from scratch, which in turn reduces the amount of time and effort needed to correct fine-tuning errors.

The feature is rolling out alongside a new interface section known as the Playground UI. According to OpenAI, developers can use it to compare different versions of a fine-tuned model. A software team could, for example, test how a model answers a question after the fifth epoch and then enter the same question two epochs later to determine if the response accuracy has improved.

OpenAI is also enhancing its existing AI fine-tuning dashboard. According to the company, developers can now more easily customize models’ hyperparameters. Those are configuration settings that influence the accuracy of an LLM’s responses.

The overhauled dashboard also provides access to more detailed technical data about fine-tuning sessions. For added measure, OpenAI has added the ability to stream that data to third-party AI development tools. The first integration is rolling out for Weights and Biases, a model creation platform developed by a well-funded startup of the same name.

For enterprises that require more advanced model optimization features, OpenAI today introduced a new offering called assisted fine-tuning. It provides the ability to extend a model’s capabilities by equipping it with additional hyperparameters. Customers can also optimize their LLMs using a technique called PEFT, which makes it possible to fine-tune only certain parts of a model rather than its entire code base.

Support our open free content by sharing and engaging with our content and community.

Where Technology Leaders Connect, Share Intelligence & Create Opportunities

SiliconANGLE Media is a recognized leader in digital media innovation serving innovative audiences and brands, bringing together cutting-edge technology, influential content, strategic insights and real-time audience engagement. As the parent company of SiliconANGLE, theCUBE Network, theCUBE Research, CUBE365, theCUBE AI and theCUBE SuperStudios — such as those established in Silicon Valley and the New York Stock Exchange (NYSE) — SiliconANGLE Media operates at the intersection of media, technology, and AI. .

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a powerful ecosystem of industry-leading digital media brands, with a reach of 15+ million elite tech professionals. The company’s new, proprietary theCUBE AI Video cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.