AI

AI

AI

AI

AI

AI

Nvidia Corp. today disclosed that it has acquired Run:ai, a startup with software for optimizing the performance of graphics card clusters.

The terms of the deal were not disclosed. TechCrunch, citing two people familiar with the matter, reported that the transaction values Run:ai at $700 million. That’s nearly six times the amount of funding the Tel Aviv-based startup had raised prior to the acquisition.

Run:ai, officially Runai Labs Ltd., provides software for speeding up server clusters equipped with graphics processing units. According to the company, a GPU environment powered by its technology can run up to 10 times more AI workloads than would otherwise be possible. It boosts AI performance by fixing several common processing inefficiencies that often affect GPU-powered servers.

The first issue Run:ai addresses stems from the fact that AI models are often trained using multiple graphics cards. To distribute a neural network across a cluster of GPUs, developers split it into multiple software fragments and train each one on a different chip. Those AI fragments must regularly exchange data to one another during the training process, which can lead to performance issues.

If an AI fragment must exchange data with a different part of the neural network that currently isn’t running, it will have to suspend processing until the latter module comes online. The resulting delays slow down the AI training workflow. Run:ai ensures all the AI fragments needed to facilitate a data exchange are online at the same time, which removes unnecessary processing delays.

The company’s software also avoids so-called memory collisions. Those are situations where two AI workloads attempt to use the same section of a GPU’s memory at once. GPUs resolve such errors automatically, but the troubleshooting process takes time. Over the course of an AI training session, the time expended on fixing memory collisions can add up significantly and slow down processing.

Running multiple AI workloads on the same GPU cluster can also lead to other types of bottlenecks. If one of the workloads requires more hardware than anticipated, it might use infrastructure allocated to the other applications and slow them down. Run:ai includes features that ensure each AI model receives enough hardware resources to complete its assigned task without delays.

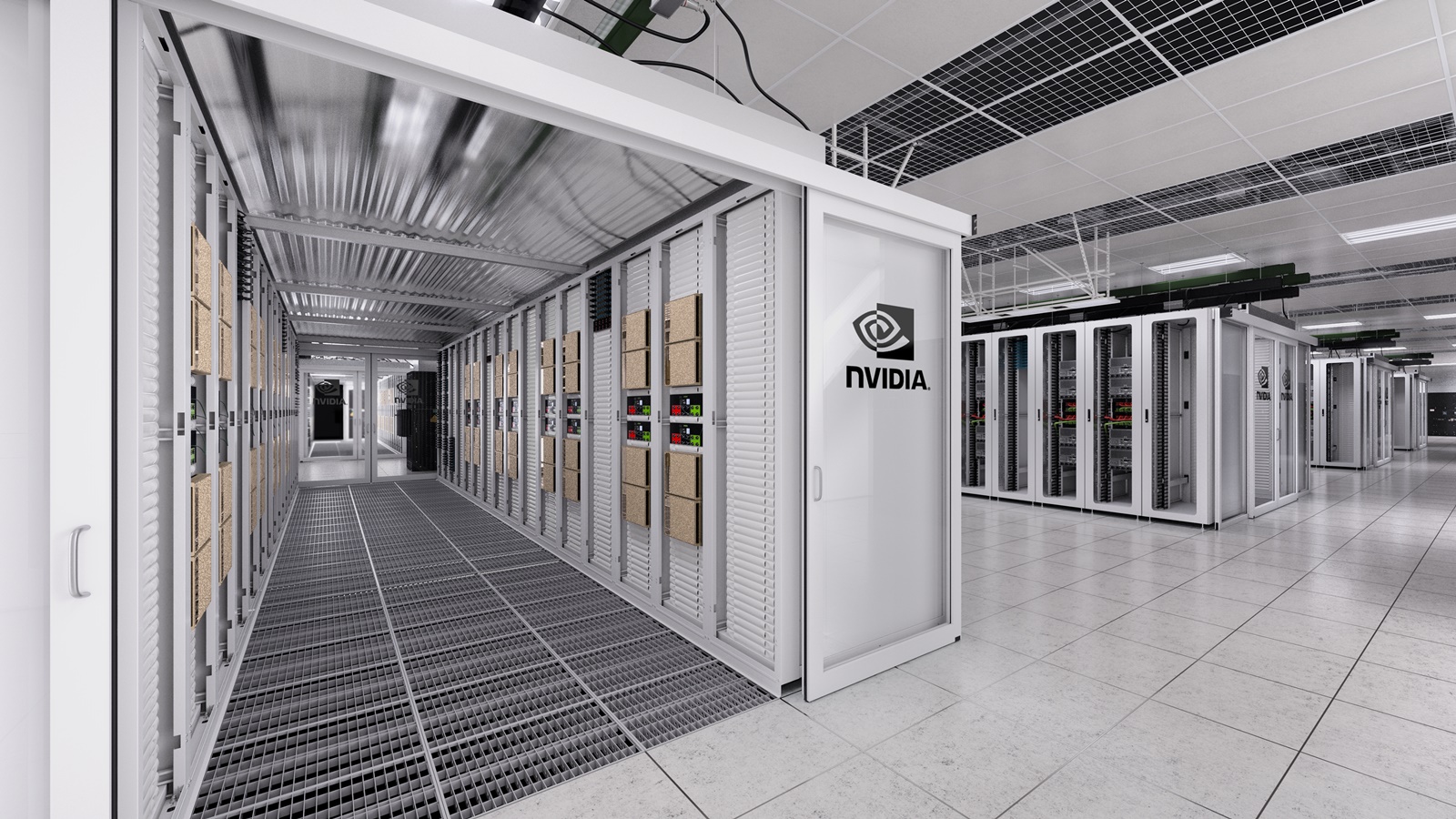

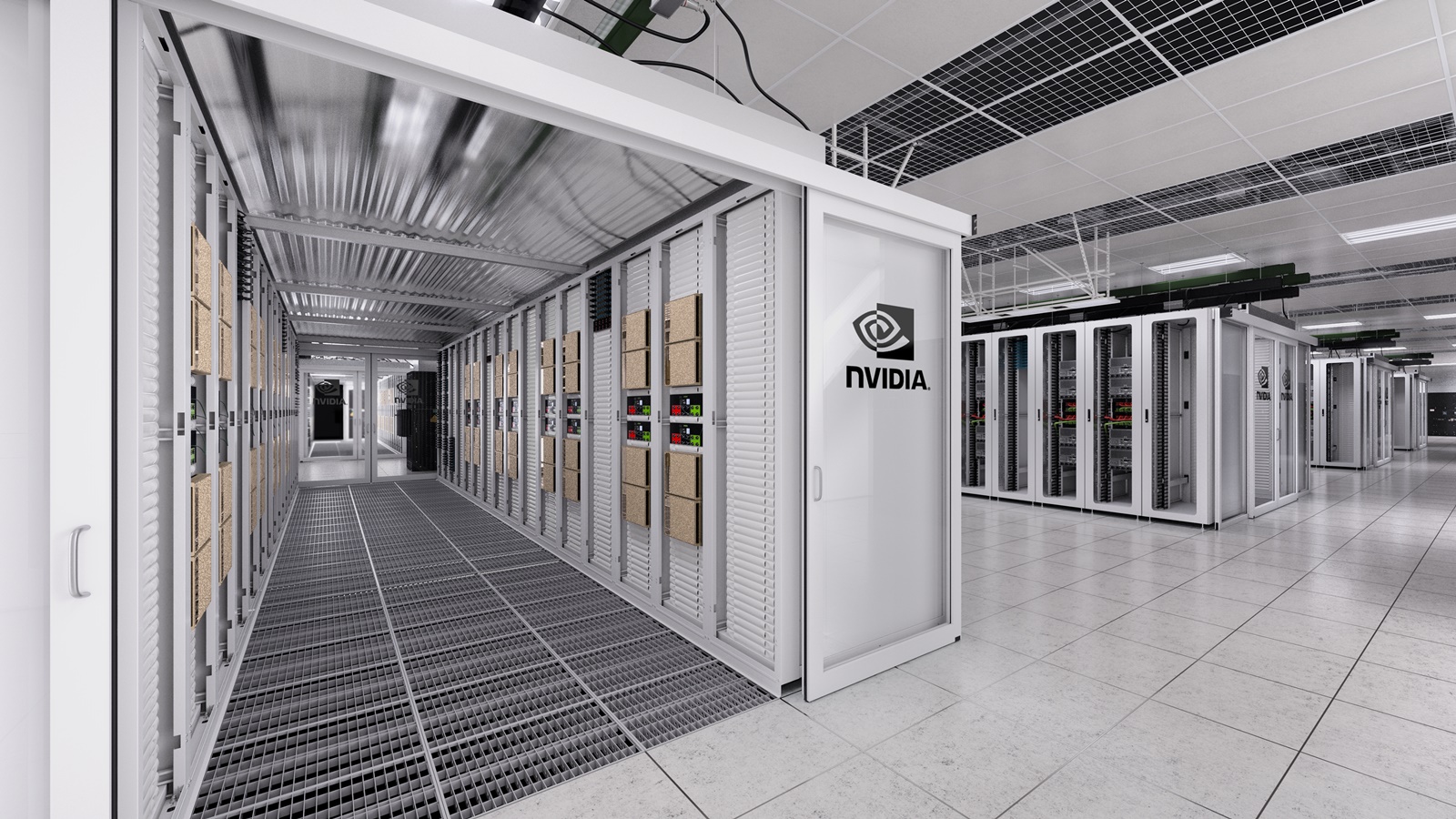

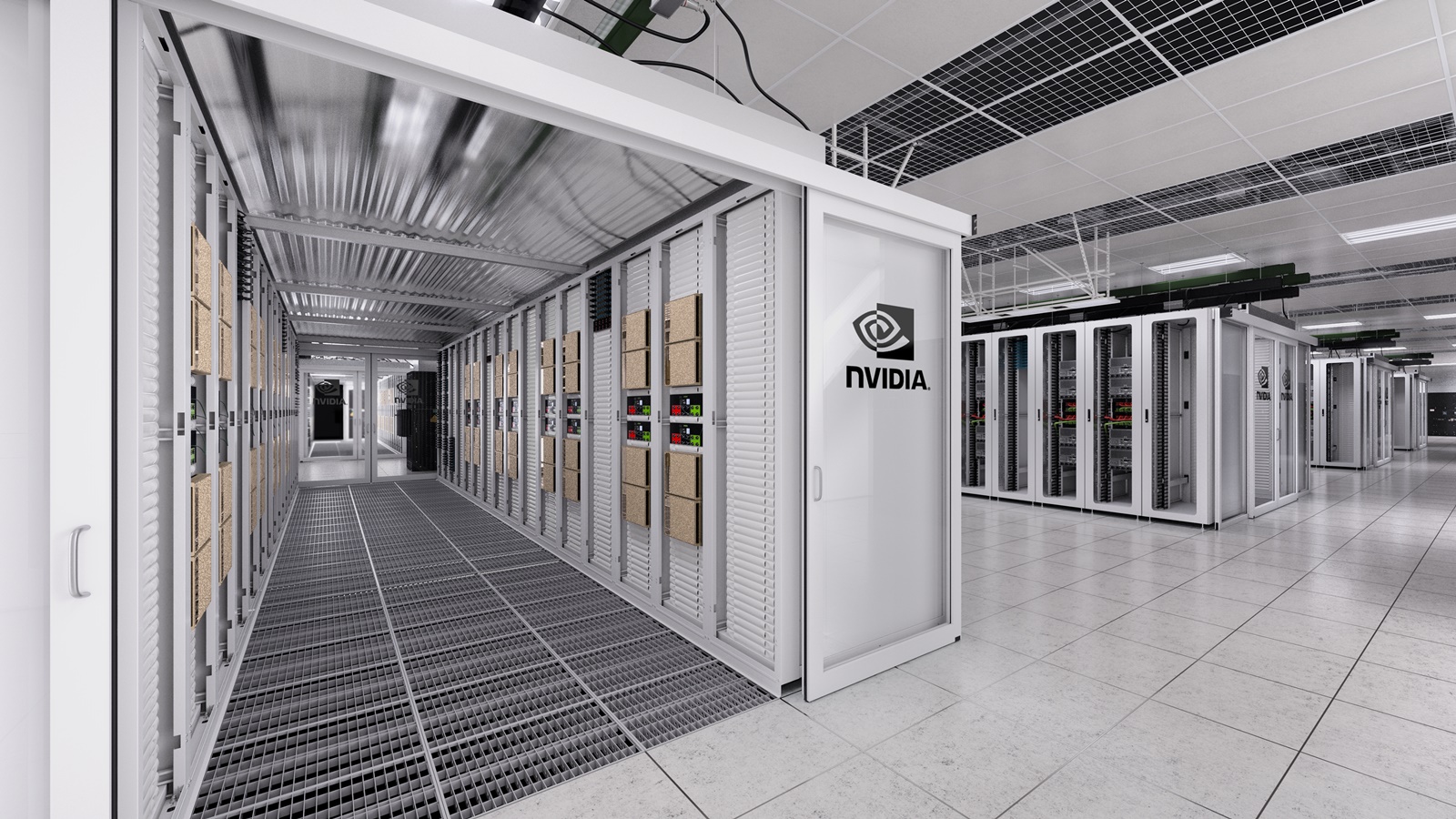

“The company has built an open platform on Kubernetes, the orchestration layer for modern AI and cloud infrastructure,” Alexis Bjorlin, vice president and general manager of Nvidia’s DGX Cloud unit, detailed in a blog post. “It supports all popular Kubernetes variants and integrates with third-party AI tools and frameworks.”

Run:ai sells its core infrastructure optimization platform alongside two other software tools. The first, Run:ai Scheduler, provides an interface for allocating hardware resources to development teams and AI projects. The company also offers Run:ai Dev, which helps engineers more quickly set up the coding tools they use to train neural networks.

Nvidia ships Run:ai’s software with several of its products. The lineup includes Nvidia Enterprise, a suite of development tools that the chipmaker provides for its data center GPUs, as well as its DGX series of AI-optimized appliances. Run:ai is also available on DGX Cloud, an offering through which companies can access Nvidia’s AI appliances in the major public clouds.

Bjorlin said the chipmaker will continue offering Run:ai’s tools under the current pricing model “for the foreseeable future.” In parallel, Nvidia will release product enhancements for the software with a focus on features that can help optimize DGX Cloud environments.

“Customers can expect to benefit from better GPU utilization, improved management of GPU infrastructure and greater flexibility from the open architecture,” Bjorlin detailed.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.