AI

AI

AI

AI

AI

AI

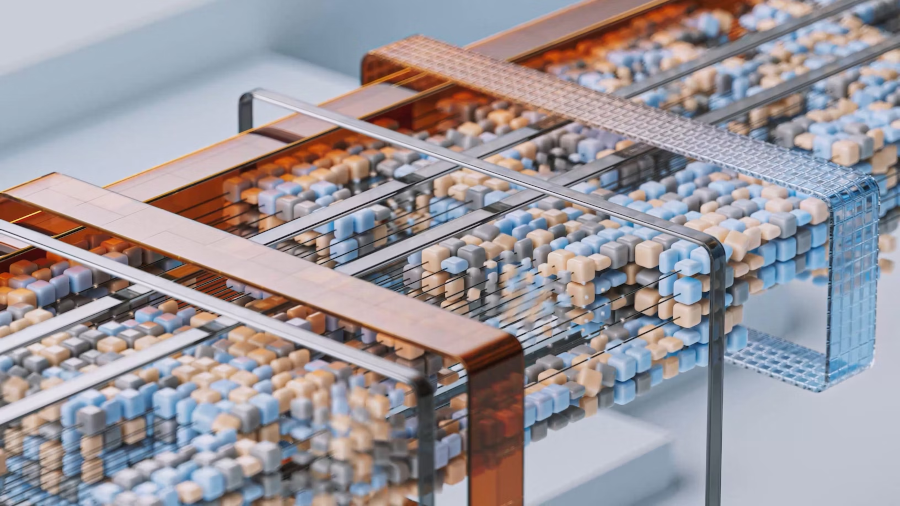

Startup Zyphra Technologies Inc. today debuted Zyda, an artificial intelligence training dataset designed to help researchers build large language models.

The startup, which is backed by an undisclosed amount of funding from Intel Capital, plans to make Zyda available under an open-source license. Zyphra says that the database comprises 1.3 trillion tokens’ worth of information. A token is a unit of data that usually corresponds to a few letters or numbers.

The challenge Zyda aims to address is that the large training datasets necessary to build LLMs can be highly time-consuming to assemble. The reason is that developers must not only collect the required data, but also filter any unnecessary and inaccurate information it may contain. By removing the need to perform the task from scratch, Zyda can potentially reduce the amount of time required to build new LLMs.

Zyda incorporates information from seven existing open-source datasets created to facilitate AI training. Zyphra filtered the original information to remove nonsensical, duplicate and harmful content. According to the company, the result is that an LLM trained on Zyda can perform better than models developed using other open-source datasets.

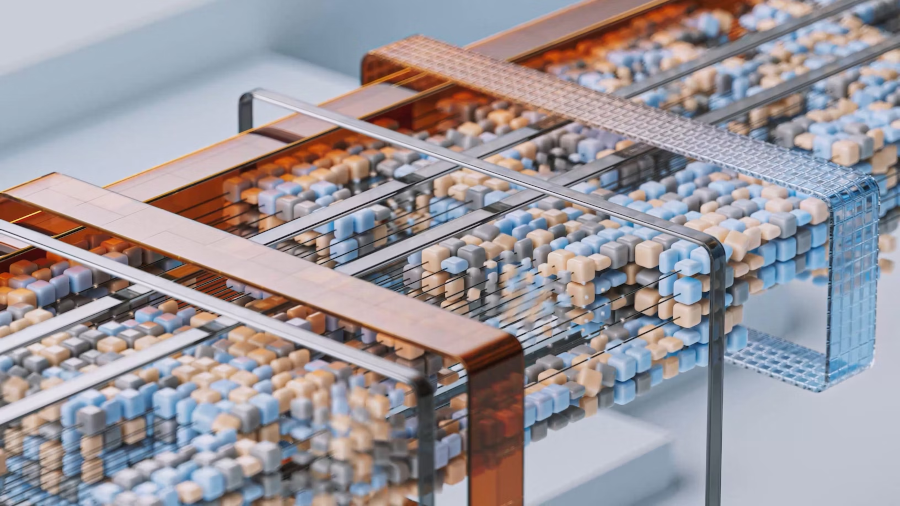

In the first phase of the data preparation process, Zyphra filtered the raw information it collected for the project using a set of custom scripts. The main goal was to remove nonsensical text produced by document formatting errors. According to Zyphra, its scripts automatically removed items such as long sequences of punctuation marks and seemingly random number collections.

In the second phase of the project, the company deleted harmful content from the dataset. It detected such content by creating a safety threshold based on various textual criteria. When a document exceeded the threshold, Zyphra’s researchers deleted it from the dataset.

After removing nonsensical and harmful content, the company deduplicated the data. The process was carried out in two steps.

First, Zyphra analyzed each of the seven open-source datasets that make up Zyda and identified cases where a document appeared multiple times within the same dataset. From there, the company compared the seven datasets with one another to identify overlapping information. By removing the duplicate files, Zyphra compressed Zyda from the original two trillion tokens to 1.4 trillion.

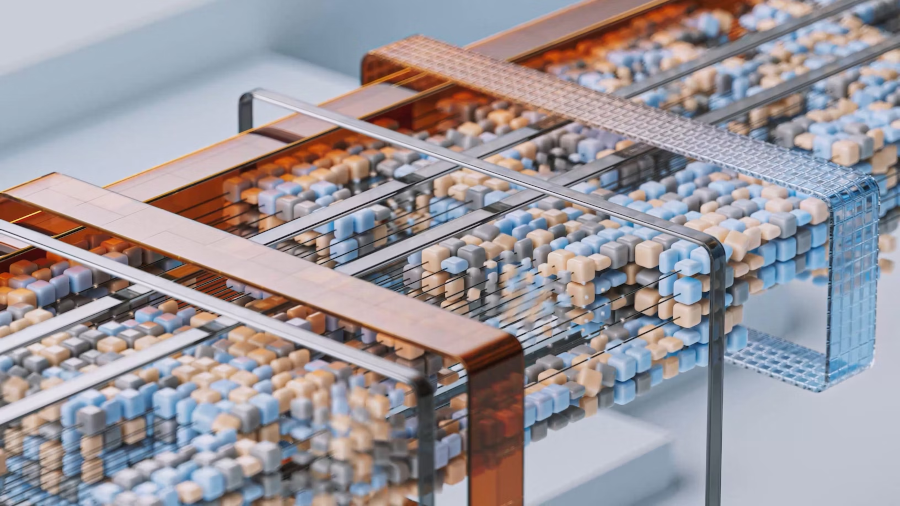

The company tested the dataset’s quality by using it to train an internally developed language model called Zamba. Debuted in April, the model contains seven billion parameters. It was trained on an early version of the Zyda dataset using 128 of Nvidia Corp.’s H100 graphics cards.

Zamba is not based on the Transformer language model architecture that powers the vast majority of LLMs. Instead, it uses an architecture called Mamba that was released in 2019, two years after Google LLC researchers invented Transformers. Mamba has a simpler, less computationally demanding design that allows it to complete some tasks faster.

The model combines Mamba with an attention layer. That’s a mechanism that helps AI models prioritize the information they ingest. An attention layer allows a neural network to analyze a collection of data points, isolate the details that are most relevant to the task at hand and use them to make a decision.

Zyphra evaluated its Zyda dataset’s quality by comparing Zamba with models built using other open-source datasets. The company says that Zamba bested Meta Platforms Inc.’s comparably sized Llama 2 7B despite the fact the latter AI was trained on twice as many tokens’ worth of data. It also outperformed several other open-source language models.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.