BIG DATA

BIG DATA

BIG DATA

BIG DATA

BIG DATA

BIG DATA

Data science and artificial intelligence are experiencing a seismic shift with a growing emphasis on interoperability in data management, as cutting-edge technologies such as generative AI and advanced data governance tools take center stage.

Organizations are now better equipped than ever to democratize AI, making sophisticated data tools accessible to a wider audience while ensuring secure data integrity. This transformative wave is poised to redefine how data is managed, analyzed and utilized across various industries, marking a new era of innovation and efficiency in the AI landscape.

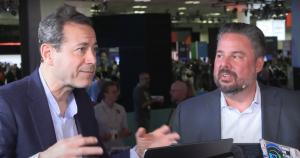

TheCUBE’s George Gilbert and Rob Strechay discuss interoperability in data management.

“This is the story of Databricks evolving with the marketplace,” said John Furrier (pictured, right), executive analyst at theCUBE Research. “It’s a growth markup, huge demand for generative AI. They have the data lake; it’s a natural progression from them, and they’re adding all the puzzle pieces.”

Furrier spoke with his fellow analysts Savannah Peterson (second from right), Rob Strechay (second from left) and George Gilbert (left) during the day one keynote analysis at the Data + AI Summit, during an exclusive broadcast on theCUBE, SiliconANGLE Media’s livestreaming studio. They discussed interoperability in data management and how Databricks is well-positioned to lead the industry into a new era of AI-driven data management and analysis.

Recent advancements highlight Databricks’ role in the transformation of data management. From its early days with Apache Spark to its current focus on generative AI, Databricks has consistently adapted to meet the market’s needs. The company’s latest offerings, particularly in the realm of gen AI, demonstrate its ability to pivot effectively, according to Gilbert.

“What they’ve done astonishingly well is pivot their tool set so that they can harness the skills of the existing personas they were with,” he said. “They can harness all the energy of gen AI and essentially uplevel the data scientists to make them relevant in the era of gen AI.”

Databricks has successfully expanded its toolkit to support a wide range of personas, from data engineers to business intelligence professionals. The introduction of the Mosaic AI tools, which seamlessly integrate into the existing Databricks environment, exemplifies this approach, according to Strechay. These tools facilitate the creation of embeddings and model pipelines directly within the data scientists’ notebooks, enhancing productivity and efficiency.

“I think that when you start to look at where they’re going, they’re trying to expand on where they’ve been really strong, which is the AI/ML data science crew and really move into that persona much more,” Strechay said. “They talked about 7,000 companies using the data lake and using SQL.”

In the competitive landscape, particularly against other data platforms, such as Snowflake Inc., Databricks has managed to leapfrog traditional database management systems by focusing on data governance and open data standards. The introduction of the Unity Catalog, aiming to create a unified source of truth for data assets, is a significant development, according to Gilbert.

“One critical pivot point that started last year when [Matei Zaharia], the creator of spark, got up and gave the keynote last year and he introduced Unity,” he said. “In that moment, the Earth shifted on its axis a little bit, because that was a point where the source of control and the source of truth went from being managed by the database to being managed by the governance engine.”

Databricks’ open-source contributions, including the decision to open-source the Unity Catalog, are pivotal in building a strong ecosystem. By promoting open data formats and interoperability, Databricks enhances its platform while setting a standard that could benefit the entire industry, Furrier explained.

“The open data model is coming fast, and I think that’s going to be a standard,” he said. “If this standard comes in, it might have the same impact that TCP/IP had with networking, where you have a unifying moment of data, where that will change the game. If that happens faster, Databricks, full win, and they’re making the long bet there.”

Here’s the complete video interview, part of SiliconANGLE’s and theCUBE Research’s coverage of the Data + AI Summit:

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.