AI

AI

AI

AI

AI

AI

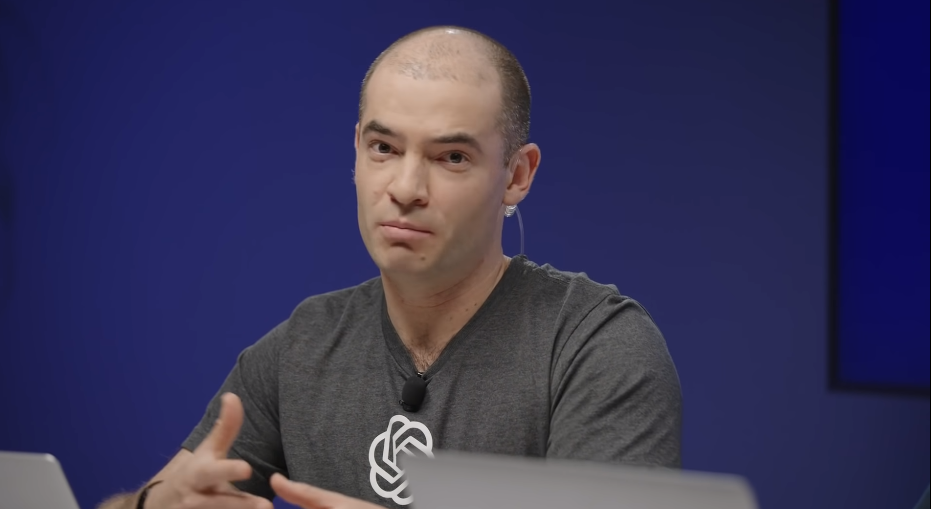

OpenAI co-founder Ilya Sutskever today announced the launch of SSI, a new startup dedicated to building advanced artificial intelligence models.

The development comes a few months after Sutskever left his role as OpenAI’s chief scientist. He earlier resigned from the ChatGPT developer’s board. Sutskever’s departure is believed to be connected to his role in the brief ouster of OpenAI Chief Executive Officer Sam Altman last November.

SSI has offices in Palo Alto and Tel Aviv. The company is incorporated as Safe Superintelligence Inc., a nod to its goal of prioritizing AI safety in its development efforts. “We approach safety and capabilities in tandem,” reads a brief announcement on SSI’s one-page website. “We plan to advance capabilities as fast as possible while making sure our safety always remains ahead.”

Sutskever co-founded OpenAI in 2015 after helping to develop AlexNet, a groundbreaking computer vision model, at the University of Toronto. AlexNet demonstrated that AI models running on graphics processing units can be used for practical tasks. The algorithm is credited with inspiring a significant amount of subsequent research into deep learning.

Sutskever spent the three years that preceded the launch of OpenAI at Google LLC, where he made contributions to TensorFlow. He also worked on Seq2seq, a language model series that preceded the Transformer architecture and shares certain features with the latter technology.

Sutskever established SSI with former OpenAI researcher Daniel Levy and Daniel Gross, a onetime Y Combinator partner who at one point led Apple Inc.’s AI efforts. In the announcement posted on SSI’s website today, the three founders wrote that the company’s “team, investors, and business model are all aligned to achieve SSI.” This hints that the company has secured venture funding.

Sutskever told Bloomberg that SSI “will not do anything else” besides its effort to develop a safe superintelligence. That indicates the company likely won’t seek to commercialize its research in the near future.

In today’s launch announcement, SSI’s three founders didn’t specify what approaches it will take to ensure the safety of its AI models. Before leaving OpenAI, Sutskever co-led a group known as the Superalignment team that explored ways of mitigating the risks posed by advanced neural networks. The since-disbanded group’s research may provide an indication of how SSI plans to tackle AI safety.

In a paper published last November, the Superalignment team detailed an AI safety approach known as weak-to-strong generalization. It involves regulating an advanced neural network’s output using a second, less advanced neural network. In a test carried out as part of the project, the researchers demonstrated how OpenAI’s early GPT-2 algorithm can be used to supervise the newer, significantly more capable GPT-4.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.