AI

AI

AI

AI

AI

AI

OpenAI’s latest innovation is called CriticGPT, and it was built to identify bugs and errors in artificial intelligence models’ outputs, as part of an effort to make AI systems behave according to the wishes of their creators.

Traditionally, AI developers use a process known as Reinforcement Learning from Human Feedback or RLHF to help human reviewers assess the outputs of large language models and make them more accurate. However, OpenAI believes that LLMs can actually help with this process, which essentially involves critiquing AI models’ outputs.

In a research paper titled “LLM Critics Help Catch LLM Bugs,” OpenAI’s researchers said they built CriticGPT to help human AI reviewers in the task of reviewing code generated by ChatGPT. CriticGPT was built using the GPT-4 LLM, and showed encouraging competency when analyzing code and identifying errors, enabling its human colleagues to spot AI “hallucinations” that they may not notice by themselves.

OpenAI’s researchers say they trained CriticGPT on a dataset of code samples that was littered with intentional bugs, so it could learn how to recognize and flag the different coding errors that often creep into software.

For the training process, OpenAI explained, human developers were asked to modify code written by ChatGPT, introducing a range of errors and providing sample feedback, as they would do if the bugs were genuine and they had just come across them. This taught CriticGPT how to identify the most common, and also some not-so-common coding errors.

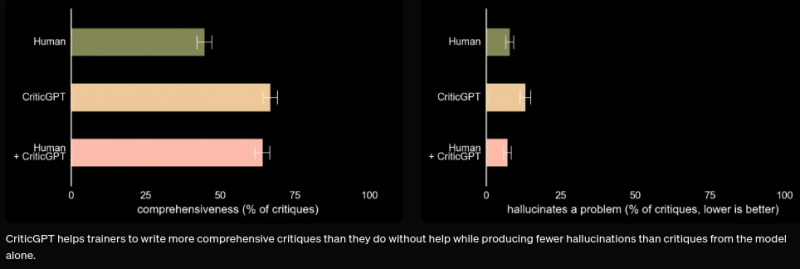

After training CriticGPT, OpenAI then put it to the test and the results were impressive. CriticGPT demonstrated more competency than the average human code reviewer. Its critiques were preferred by human trainers to those written by humans in 63% of cases. According to OpenAI, this was partly because CriticGPT generated fewer unhelpful “nitpicks” about the code and less false positives.

To further their research, OpenAI’s team went ahead and created a new technique it dubbed “Force Sampling Beam Search”, which enabled CriticGPT to write more detailed critiques of the AI-generated code. It also enables more flexibility, as the human teachers could adjust the thoroughness of CriticGPT when looking for bugs, while exerting better control over its occasional tendency to hallucinate, or highlight “errors” that don’t actually exist.

CriticGPT’s thoroughness allowed it to significantly outperform humans. The researchers decided to apply CriticGPT to ChatGPT training datasets that had been marked as “flawless” by human annotators, meaning they should not have even one bug. However, CriticGPT still identified bugs and errors in 24% of those datasets, which were later confirmed by human reviewers.

According to OpenAI, this shows that CriticGPT has the ability to identify even the most subtle mistakes that humans generally miss even when conducting an exhaustive evaluation.

However, it’s worth pointing out that CriticGPT, like those supposedly flawless training datasets, still has some caveats. For one thing, it was trained using relatively short responses from ChatGPT, which may mean it will struggle to evaluate much longer and more complex tasks, which represent the next evolutionary step for generative AI. Moreover, CriticGPT still cannot surface every error, and it still hallucinates in some cases – creating false positives that might result in human annotators making mistakes when labeling data.

One of the challenges that CriticGPT needs to overcome is that it’s more effective at spotting inaccurate outputs that result from errors in one specific piece of code. But some AI hallucinations are the result of errors spread across multiple different code strings, which makes it much more difficult for CriticGPT to identify the source of the problem.

Holger Mueller of Constellation Research Inc. said CriticGPT is just the latest effort in a new trend that sees generative AI being increasingly explored over its potential to actually improve other generative AI systems. “CriticGPT is all about helping AI engineers to make generative AI perform better,” he said. “It helps testers test ChatGPT via RLHF tasks, which is a key step in improving AI models. But even then, CriticGPT still requires human supervision, so it’s not clear if it will really be able to increase AI tester’s velocity or not.”

Still, OpenAI is encouraged by the progress so far and plans to integrate CriticGPT into its RLHF pipeline, meaning its human trainers will get their own generative AI assistant to help them review generative AI outputs.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.