AI

AI

AI

AI

AI

AI

IBM Corp. today announced plans to make Intel Corp.’s Gaudi 3 artificial intelligence processor available in its public cloud platform.

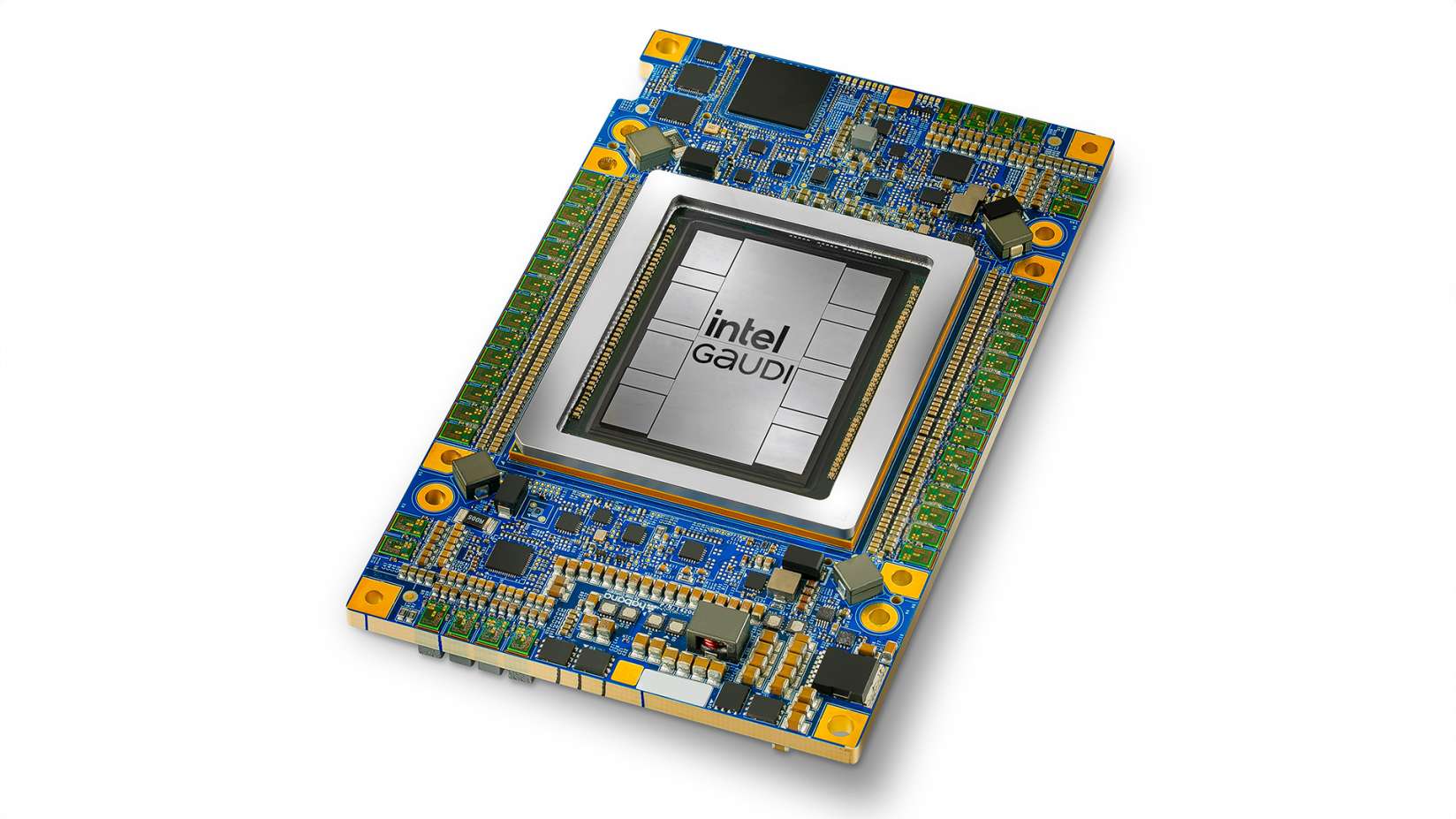

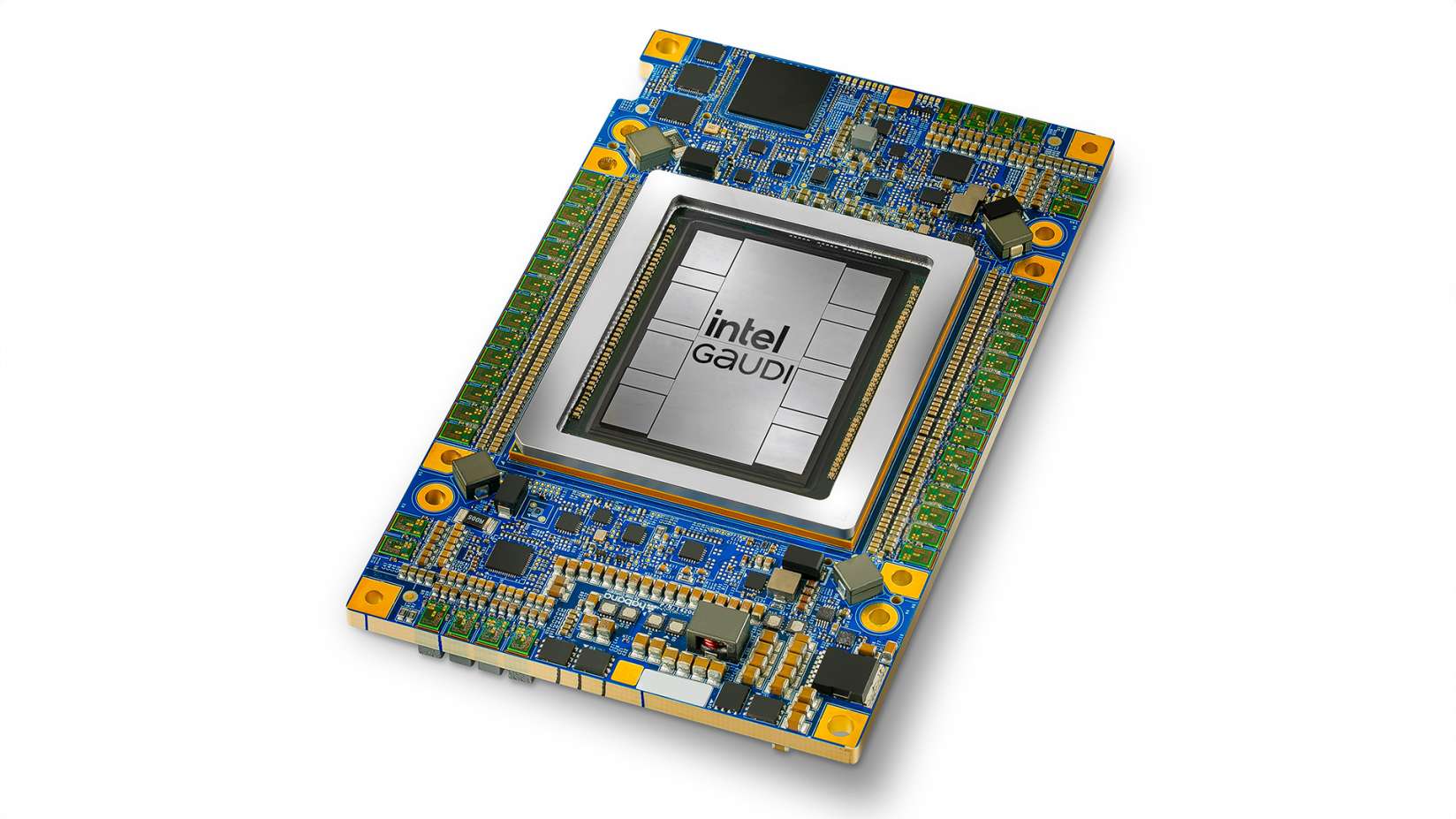

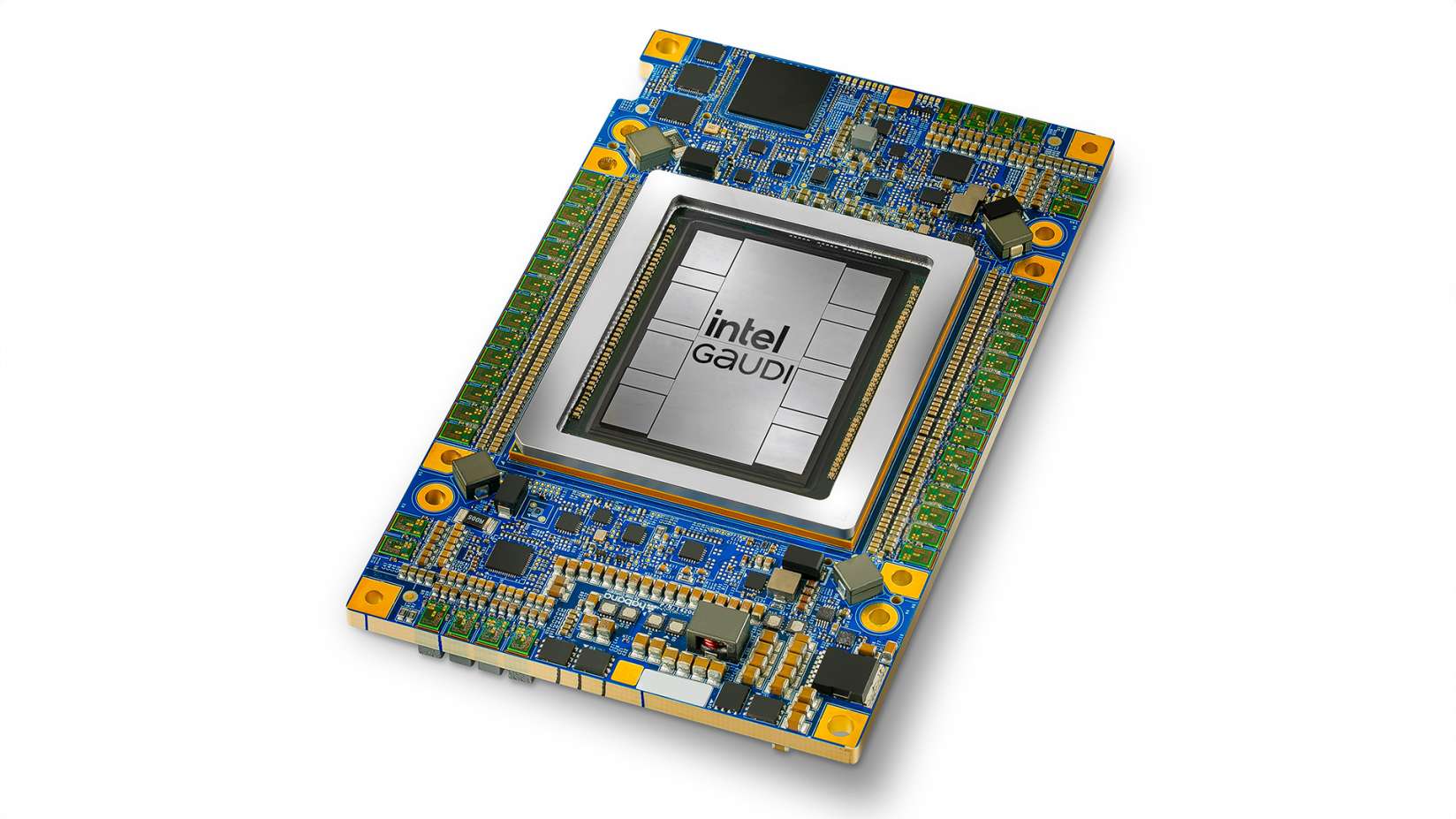

Gaudi 3 is positioned as an alternative to Nvidia Corp.’s bestselling H100 graphics processing unit. The H100 was the chipmaker’s flagship AI accelerator before the March debut of its newest GPU, the Blackwell B200, which is significantly faster but not due out until later this year. Intel says Gaudi 3 can perform inference with up to 2.3 times the power efficiency of the H100, while some large language models take less time to train.

Gaudi 3 is the third iteration of an AI processor series that Intel obtained through a 2019 startup acquisition. The chip is based on Taiwan Semiconductor Manufacturing Co. Ltd.’s five-nanometer node. Gaudi 3’s processing power is provided by two sets of onboard computing modules, dubbed MMEs and TPCs, that are each optimized for a different set of tasks.

The chip’s MME modules are designed to perform matrix multiplications. Those are mathematical calculations that are carried out on collections of numbers organized in rows and columns, similarly to the fields in a spreadsheet. Artificial intelligence models use such calculations to turn input data into decisions.

Certain AI models, such as those used for object recognition tasks, carry out most of their processing with matrix multiplications. More advanced forms of AI such as large language models also use other types of calculations. Gaudi 3’s TPC modules, the second type of computing circuit the chip includes, are optimized for those other calculations.

TPCs are based on a so-called very long instruction word architecture. That’s a type of chip design optimized to carry out multiple computations in parallel. Because performing calculations side-by-side is faster than completing them one after another, Gaudi 3’s TPCs help speed up AI models’ performance.

Gaudi 3 includes 64 TPCs, nearly three times as many as its predecessor. There are also four times as many MMEs, the compute modules optimized for matrix multiplications. The chip’s logic circuits are supported by a 120-gigabyte memory pool that offers higher clock speeds than the RAM in Intel’s previous-generation AI processor.

The company says the upgrades introduced in Gaudi 3 boost its top speed to 1,835 TFLOPS, or trillion computations per second, when it processes BF16 data. That’s a data format commonly used by AI models to store information.

The Gaudi 3’s increased performance is not its only selling point. There’s also an onboard Ethernet module that can be used to link together the Gaudi 3 processors in an AI server, as well as connect several such servers to one another. Intel has doubled the bandwidth of the individual Ethernet networking links in the chip to 200 gigabits per second.

IBM plans to make the Gaudi 3 available early next year in IBM Cloud Virtual Servers for VPC. Those are the compute instances the company provides in its public cloud platform. IBM will also add support for Gaudi 3 to its watsonx product suite, which includes software tools that companies can use to build AI models, deploy them in production and perform related tasks.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.