AI

AI

AI

AI

AI

AI

OpenAI today launched a new large language model series, o1, that can decode scrambled text, answer science questions with better accuracy than PhD holders and perform other complex tasks.

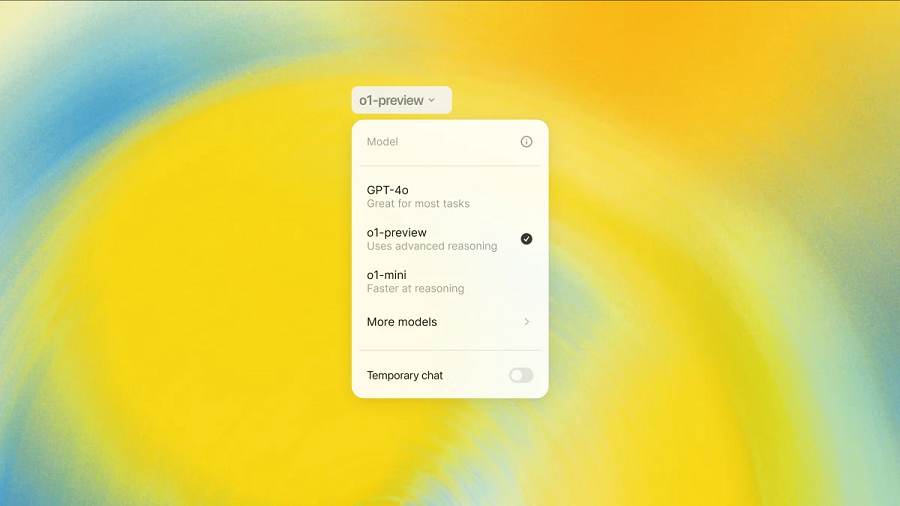

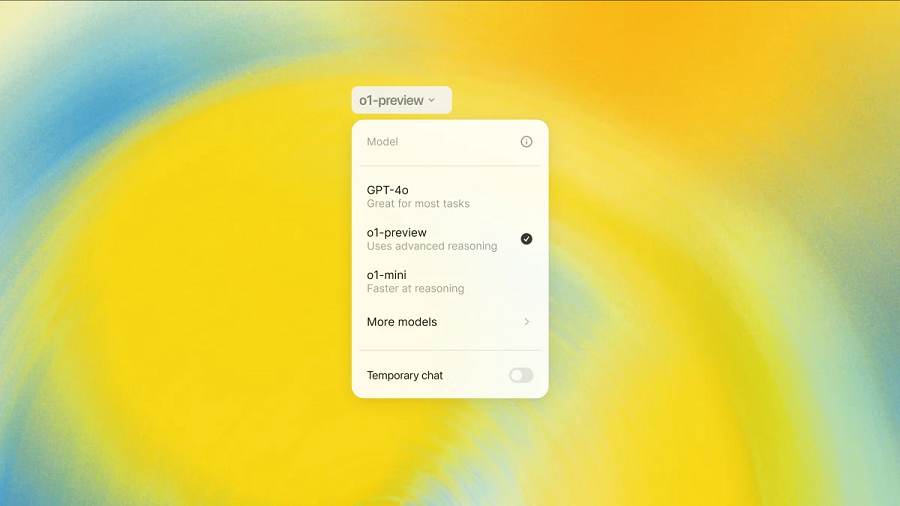

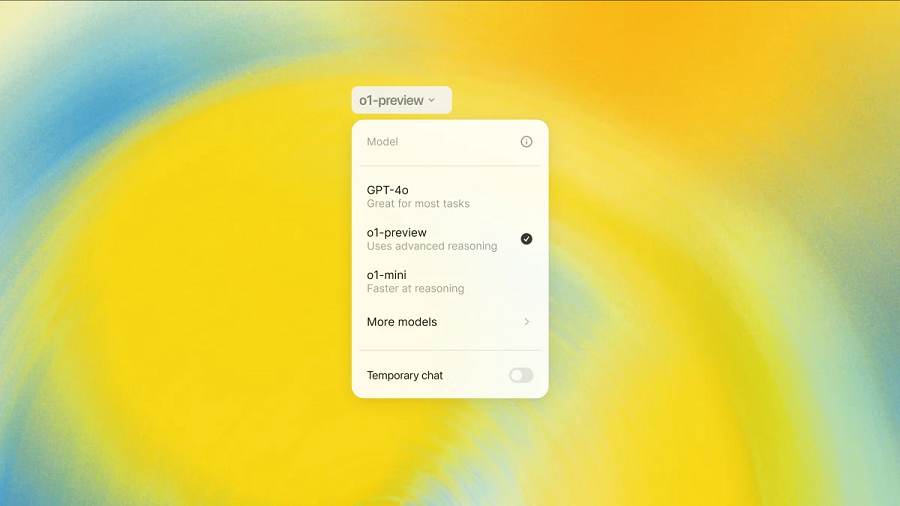

The LLM series better-known by it code name Strawberry, comprises two models on launch: o1-preview and o1-mini. The former is the more capable of the two, while the latter algorithm trades off some response quality for better cost-efficiency. Both models became available today in the paid versions of OpenAI’s ChatGPT chatbot service.

OpenAI says that the o1 series is not a drop-in replacement for the GPT-4o model it debuted in May. The new LLMs currently lack several of the features offered by that model, notably the ability to analyze files uploaded by the user. There are also no integrations that would allow o1 to interact with external applications.

On the other hand, the new LLM series is significantly better at tasks that require reasoning skills.

In one internal test, OpenAI engineers had o1-preview complete a qualifying exam for the U.S. Math Olympiad. The model’s average scores ranged from 74% to 93%, a significant improvement over the 12% achieved by GPT-4o. OpenAI says that o1-preview’s best average score put it among the top 500 test takers in the U.S.

In another evaluation, the ChatGPT developer had o1-preview tackle the GPQA Diamond benchmark, a collection of complex science questions. The model achieved a higher score across a set of physics, biology, and chemistry questions than a group of experts with doctorates.

The company says one of the contributors to o1’s reasoning prowess is its use of a machine learning approach known as CoT, or chain of thought. The technique allows LLMs to break down a complex task into smaller steps and carry out those steps one by one. In many cases, tackling complex prompts this way can help an LLM improve the accuracy of its responses.

OpenAI refined o1’s CoT mechanism using reinforcement learning. This is a machine learning technique that helps LLMs improve their output quality over time through a kind of trial and error training process. In most reinforcement learning projects, a model is given a set of training tasks and receives positive feedback whenever it solves one of them correctly, which helps it become more accurate.

One of the tasks to which o1’s CoT-powered reasoning features can be applied is decoding scrambled text. During an internal test, OpenAI had o1-preview decipher a scrambled version of the sentence “There are three R’s in Strawberry.” The model successfully completed the task by following a line of reasoning that comprised dozens of steps and required it to change tactics multiple times.

OpenAI says o1’s CoT features also make it safer than earlier models. “We conducted a suite of safety tests and red-teaming before deployment,” the company’s researchers detailed in a blog post today. “We found that chain-of-thought reasoning contributed to capability improvements across our evaluations.”

The o1 series is being available in not only ChatGPT but also through its application programming interface, which allows developers to integrate its LLMs into their software. The scaled-down o1-mini model trades some of o1-preview’s accuracy for 80% lower inference pricing. OpenAI says that o1-mini has a smaller knowledge base but is “particularly effective at coding.”

Down the line, the company plans to make o1-mini available in the free version of ChatGPT. It also intends to raise the usage limits on o1 in the paid versions of the chatbot service. On launch, customers can send 30 prompts a day to o1-preview and 50 to o1-mini.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.