INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

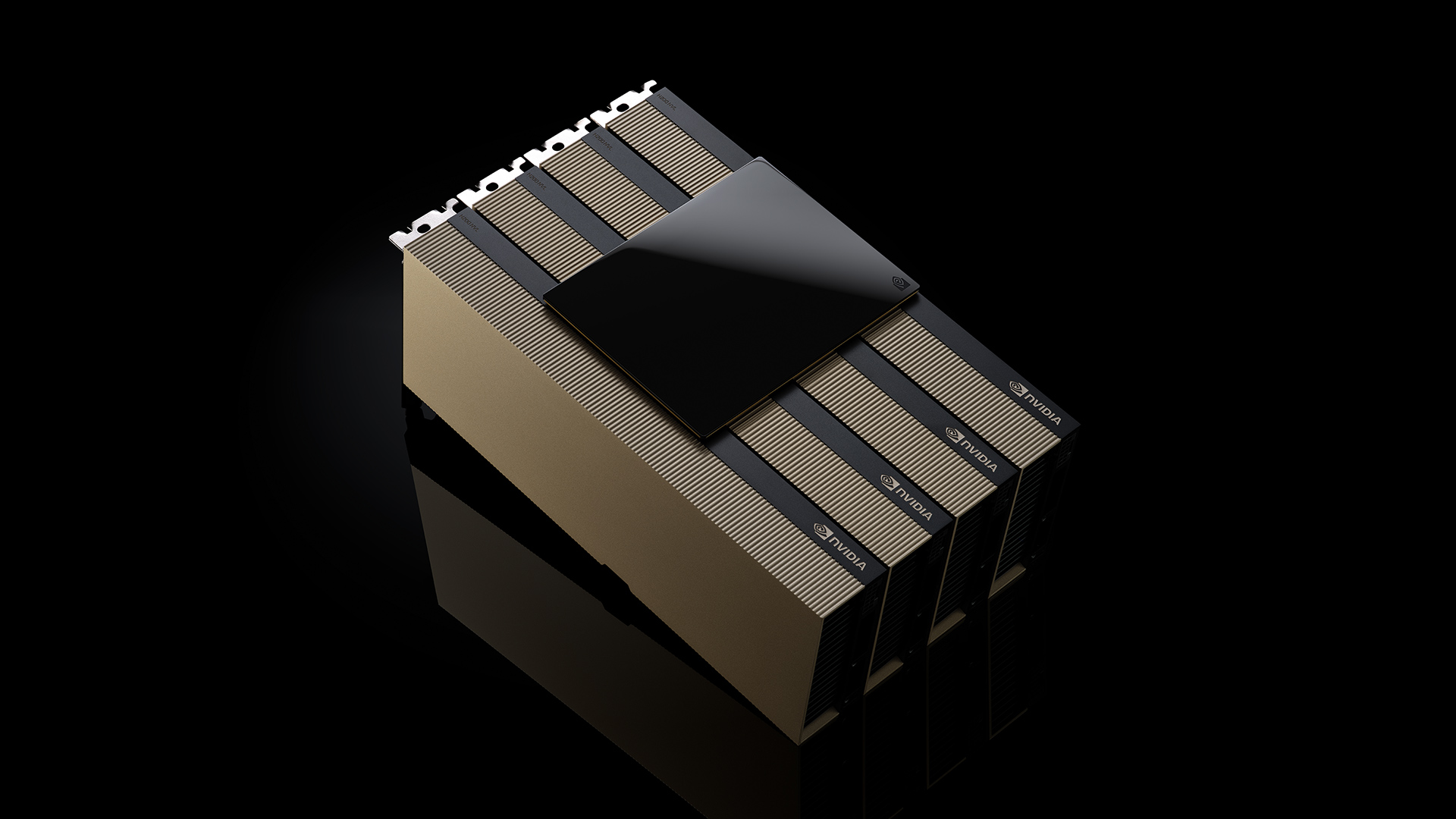

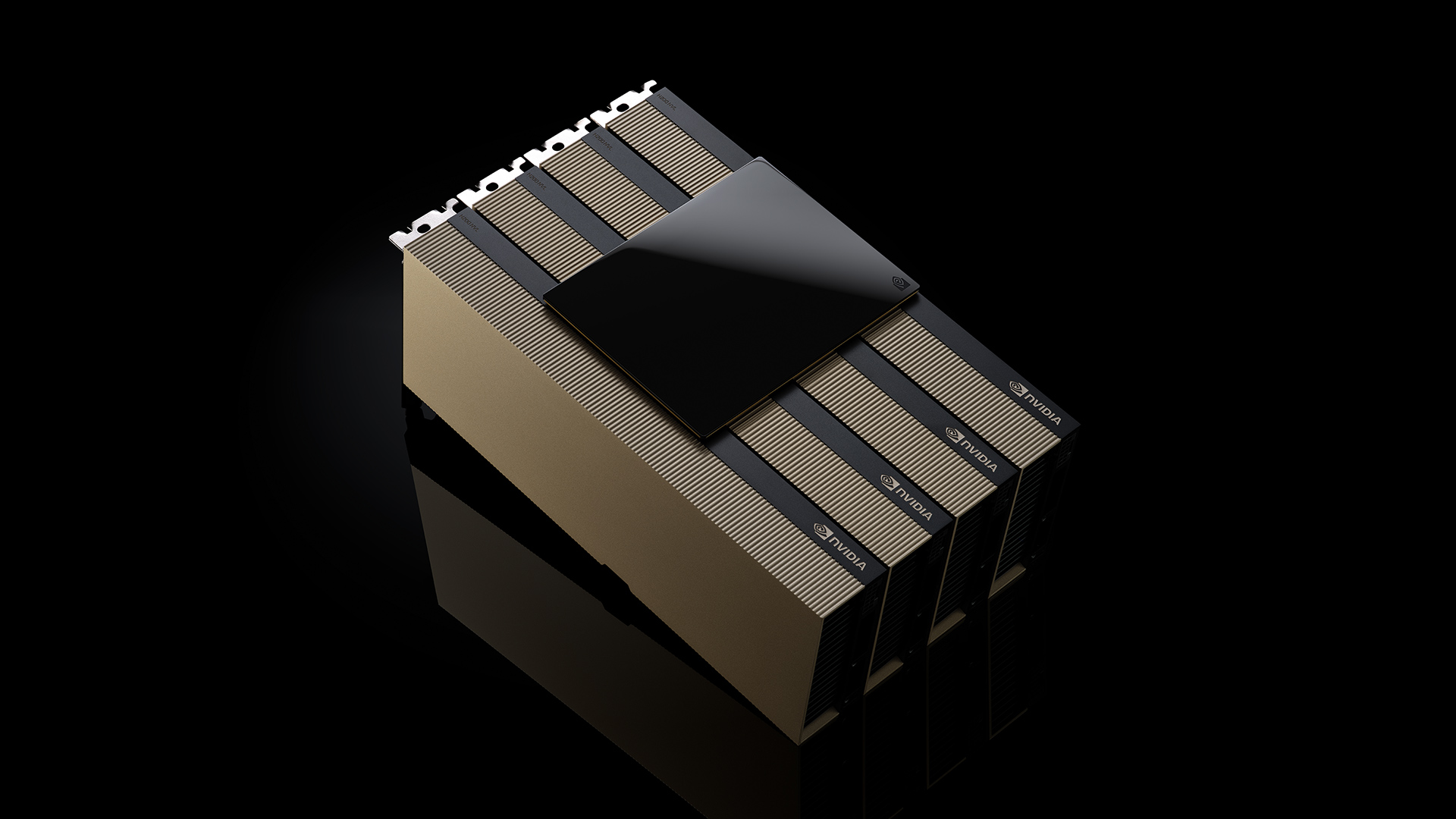

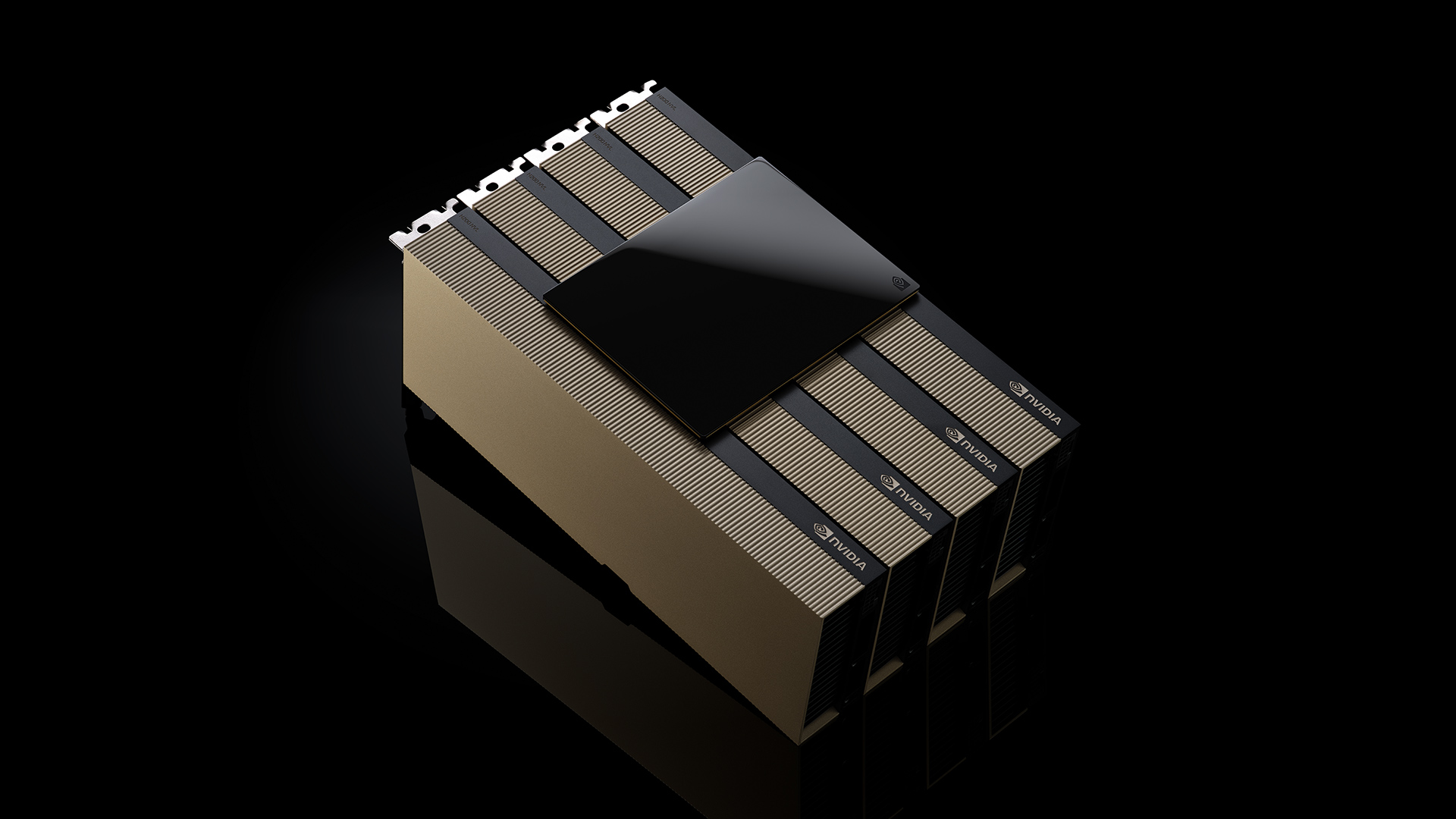

Nvidia Corp. today announced the availability of its newest data center-grade graphics processing unit, the H200 NVL, to power artificial intelligence and high-performance computing.

The company announced its new PCIe form factor card during the SC24 high-performance computing conference in Atlanta alongside a bevy of updates to enable high-performance AI for scientific research and engineering.

The H200 NVL is the latest addition to Nvidia’s Hopper family of GPUs designed for data centers running on lower-power, air-cooled racks. According to the company, about 70% of enterprise data center floors run at 20,000-kilowatt hours and below, which allows for air cooling. This makes PCIe GPUs essential for flexibility, since they don’t require specialized cooling systems.

The new GPU provides a 1.5-times memory efficiency increase and 1.2-times bandwidth increase over the H100 NVL, the company said, and businesses can use it to fine-tune large language models within a few hours with up to 1.7-times faster performance when deployed.

The University of Mexico said it has tapped Nvidia’s accelerated computing technology for scientific research and academic applications.

“As a public research university, our commitment to AI enables the university to be on the forefront of scientific and technological advancements,” said Prof. Patrick Bridges, director of the UNM Center for Advanced Research Computing. “As we shift to H200 NVL, we’ll be able to accelerate a variety of applications, including data science initiatives, bioinformatics and genomics research, physics and astronomy simulations, climate modeling and more.”

To help build on and accelerate the use of AI in scientific research, Nvidia announced the release of the open-source Nvidia BioNeMo framework to assist with drug discovery and molecule design. The platform accelerated computing tools to scale up AI models for biomolecular research to bring supercomputing to biopharma engineering.

“The convergence of AI, accelerated computing and expanding datasets offers unprecedented opportunities for the pharmaceutical industry, as evidenced by recent Nobel Prize wins in chemistry,” said Kimberly Powell, vice president of healthcare at Nvidia.

AI has quickly emerged as a potent discovery mechanism for new chemicals and materials. To explore potential materials within the nearly infinite combinations Nvidia announced ALCHEMI which allows researchers to optimize AI models via an NIM microservice. These microservices enable users to deploy prebuilt foundation models in the cloud, data center or workstations.

Using ALCHEMI, researchers can test chemical compounds and materials in a simulated virtual AI lab without the need to spend extra time trying and failing to build structures in an actual lab. According to Nvidia, the new microservices can be run on an H100 using a pretrained model for materials chemistry testing for long-term stability 100 times faster than with the NIM off. For example, evaluating 16 million structures could take months, with the microservice it would take mere hours.

Extreme weather also presents a problem for scientists, which is why Nvidia announced two new NIM microservices for Earth-2, a platform used for simulating and visualizing weather and climate conditions. With this technology, climate researchers can use AI assistance to forecast extreme weather events – from local to global occurrences.

According to a report from Bloomberg, natural disasters have been responsible for around $62 billion in insured losses during the first half of 2024, or about 70% more than the 10-year average. This included increasing wildfires and severe thunderstorms across the United States with even more costly storms and torrential flooding in Europe.

Nvidia is releasing CorDiff NIM and ForCastNet NIM, which will help weather technology companies build high-resolution and accurate predictions.

CorDiff uses generative AI at kilometer-scale resolution for weather forecasting. Nvidia used the model’s capability to gain better-localized predictions and analysis of typhoons over Taiwan earlier this year. CorDiff was trained on the Weather Research and Forecasting model’s numerical simulation to generate patterns at 12 times higher resolution. According to Nvidia CorDiff NIM runs 500 times faster and is 100,000 times more energy efficient than traditional prediction using central processing units.

FourCastNet provides global, medium-range, lower-resolution forecasts for systems that don’t need highly localized information. For example, if a provider wants to get an idea of what might happen across the next two weeks given current conditions, FourCastNet can provide a forecast 5,000 times faster than traditional numerical weather models.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.