AI

AI

AI

AI

AI

AI

Cloud infrastructure startup Together AI Inc. has raised $305 million in new funding at a $3.3 billion valuation.

Announced today, the Series B was co-led by General Catalyst and Prosperity7. It also included the participation of more than a dozen other investors. The consortium included Nvidia Corp., Salesforce Ventures and former Cisco Systems Inc. Chief Executive Officer John Chambers.

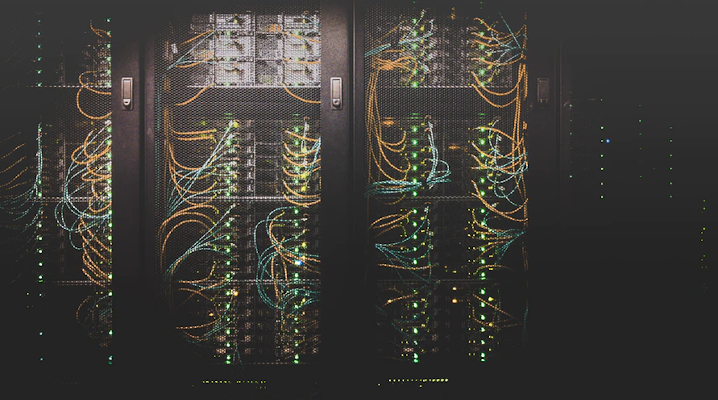

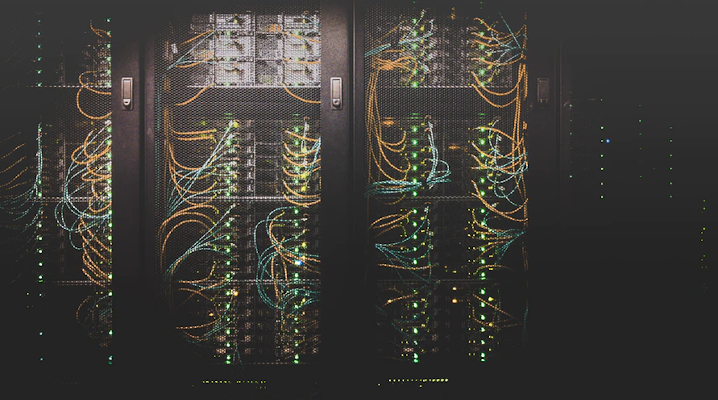

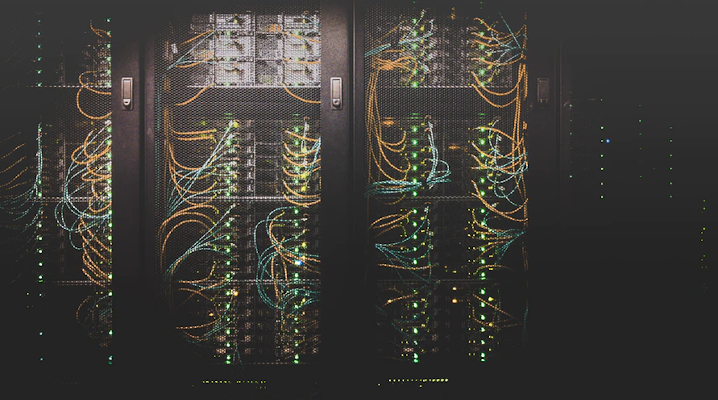

San Francisco-based Together AI operates a public cloud optimized to run AI models. It enables developers to provision server clusters with upwards of thousands of graphics processing units. Together AI has equipped its data centers with several different Nvidia Corp. GPUs including the Blackwell B200, the chipmaker’s latest and most capable processor.

Together AI runs customers’ AI models on a software system called the Inference Engine. According to the company, it can provide more than double the inference performance of the major public clouds. One way the software achieves that speed is by applying an algorithm called FlashAttention-3 to customers’ AI models.

Large language models analyze user input by taking into account contextual data such as past prompts. They do so using a component known as the attention mechanism. FlashAttention-3, the performance optimization algorithm used by Together AI, works by reorganizing the order in which an LLM’s attention mechanism carries out computations. Additionally, the algorithm reduces the amount of data that the LLM has to move between the underlying GPU’s logic circuits and HBM memory.

Together AI’s Inference Engine also implements a second performance optimization technology called speculative decoding. LLMs usually generate prompt responses one token, or unit of data, at a time. Speculative decoding allows LLMs to generate multiple tokens at once to speed up the workflow.

According to Together AI, Inference Engine enables companies to deploy both off-the-shelf and custom models on its platform.

For customers who opt to build custom LLMs, the cloud provider offers a suite of training tools called the Training Stack. Like the Inference Engine, it uses FlashAttention-3 to speed up processing. Together AI has created an open-source dataset with more than 30 trillion tokens to speed up customers’ AI training efforts.

For developers who wish to run an off-the-shelf LLM on its platform, the company provides a library of more than 200 open-source neural networks. A built-in fine-tuning tool makes it possible to customize those algorithms using an organization’s training data. Together AI says that developers can launch fine-tuning projects with one command.

“We have built a cloud company for this AI-first world — combining state-of-the-art open source models and high performance infrastructure, with frontier research in AI efficiency and scalability,” said Together AI CEO Vipul Ved Prakash.

Today’s funding announcement comes on the heels of the company reaching $100 million in annualized recurring revenue. Together AI says that its platform is used by more than 450,000 developers including engineers at Salesforce Inc., DuckDuckGo Inc. and the Mozilla Foundation.

The company will use the new capital to enhance its cloud platform. It recently secured 20 gigawatts of power generation capacity to support new AI clusters. One of those upcoming clusters is set to feature 36,000 of Nvidia Corp.’s GB200 NVL72 chips, which each include two central processing units and four Blackwell B200 graphics cards.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.