AI

AI

AI

AI

AI

AI

Artificial intelligence chip startup SambaNova Systems Inc. said today it has developed a new framework for AI-powered “deep research” that can generate in-depth reports up to three times faster, and at much lower cost, than is possible with existing research-focused systems.

The company said in a blog post that it has partnered with the AI agent developer CrewAI Inc. to create the new framework, which enables companies to develop deep research agents that can analyze even their most private data in a completely secure way.

The research agents won’t rely on Nvidia Corp.’s graphics processing units, of course, but instead use SambaNova’s alternative AI accelerators, which the company says deliver more power and at much lower cost.

SambaNova, which is backed by more than $1 billion in venture funding, is a rival to Nvidia that develops high-performance computer chips that lend themselves to AI model training and inference. The chips can be accessed via the cloud or deployed on-premises via appliances supplied by the company.

Companies can opt to run their deep research in the SambaNova Cloud, where the company says they’ll run three times faster than any GPU-powered agent. Alternatively if they have some of SambaNova’s powerful SN40L processors on-premises, they can do it all in-house. The agents themselves are built using open-source large language models like Meta Platforms Inc.’s Llama 3.3 70B or DeepSeek Ltd’s R1, which further help to reduce costs.

SambaNova explains that existing solutions for AI-based deep research are extremely costly, as they require 10 times and sometimes even 100 times the number of tokens required by traditional chat applications. Moreover, such tools are not always as fast as their creators suggest, the company says.

One of the key ingredients of SambaNova’s deep research framework is its “Agentic Router,” which is able to plan and route requests to the most capable agent to return results with the highest degree of accuracy. By default, the framework comes with three agents – a general search agent, a Deep Research agent and a Financial Analyst, but companies are free to add their own AI agents into the mix and connect them to their own, private data sources.

The company offered the example of a financial trader wanting to generate a report on the latest market trends. To begin with, that trader might start by entering a query such as “summarize the latest market news about Amazon.” That will first be sent to the general-purpose agent, which will use maybe three search queries to find all of the latest news at the cost of around 1,000 tokens.

Once the trader has that basic information, they might want to dig deeper and ask it to “generate a financial analysis of Amazon.” In this case, the query will be routed to the Financial Analyst agent, which will conduct more in-depth research. It offers much more detail, and so it may use about 15 prompts to dig up all of the information it needs, increasing the number of tokens used by about 20 times.

Based on that deeper analysis, the trader might want to generate a more comprehensive report that summarizes and cites findings from various articles. In this case, the Deep Research Agent kicks in, compiling information from hundreds of sources to generate a final report, which is then cleaned up and submitted to our trader. This might require up to 50,000 tokens, SambaNova says.

The company stresses that each of these steps will be “lightning fast,” completed in a matter of seconds rather than the minutes it might take similar AI-based research systems. Moreover, because the user remains in the loop, they can ensure tokens are not wasted on inaccurate reports.

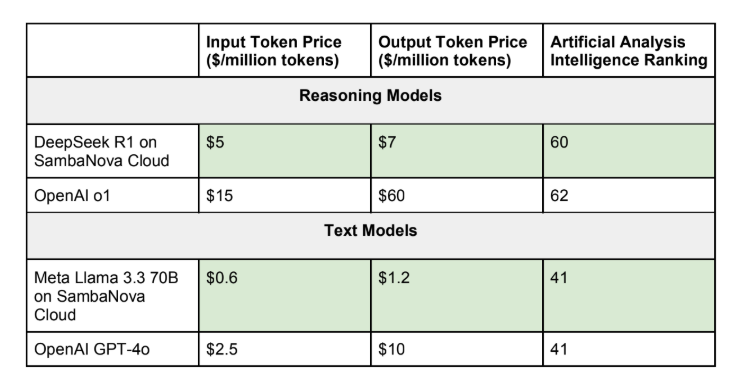

Because the research process uses so many tokens, costs are a major concern for users. That explains why SambaNova only relies on open-source LLMs, which it points out are much more affordable than proprietary alternatives:

The company says the savings it provides can quickly add up. In the case of a firm with 200 employees, each performing 20 deep research queries per day that average around 20,000 tokens, that would amount to a total of 80 million tokens every day, SambaNova says. Over the course of a year, such a company could save more than $1 million annually by using Llama 3.3 on SambaNova, instead of running OpenAI’s GPT-4o.

A demo of SambaNova’s deep research framework is available for companies to try out here, while enterprises looking to integrate it with their own data can get started by cloning the GitHub repository here.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.