AI

AI

AI

AI

AI

AI

Nvidia Corp. Chief Executive Jensen Huang took the stage today at Nvidia Corp.’s annual GTC keynote with his characteristic blend of technical mastery, visionary ambition and a touch of humor.

This year’s event underscored not just how fast the AI revolution is moving but how Nvidia continues to redefine computing itself. The keynote by Huang (pictured) was a master class in scaling AI, pushing the limits of hardware, and building the future of artificial intelligence-driven enterprises.

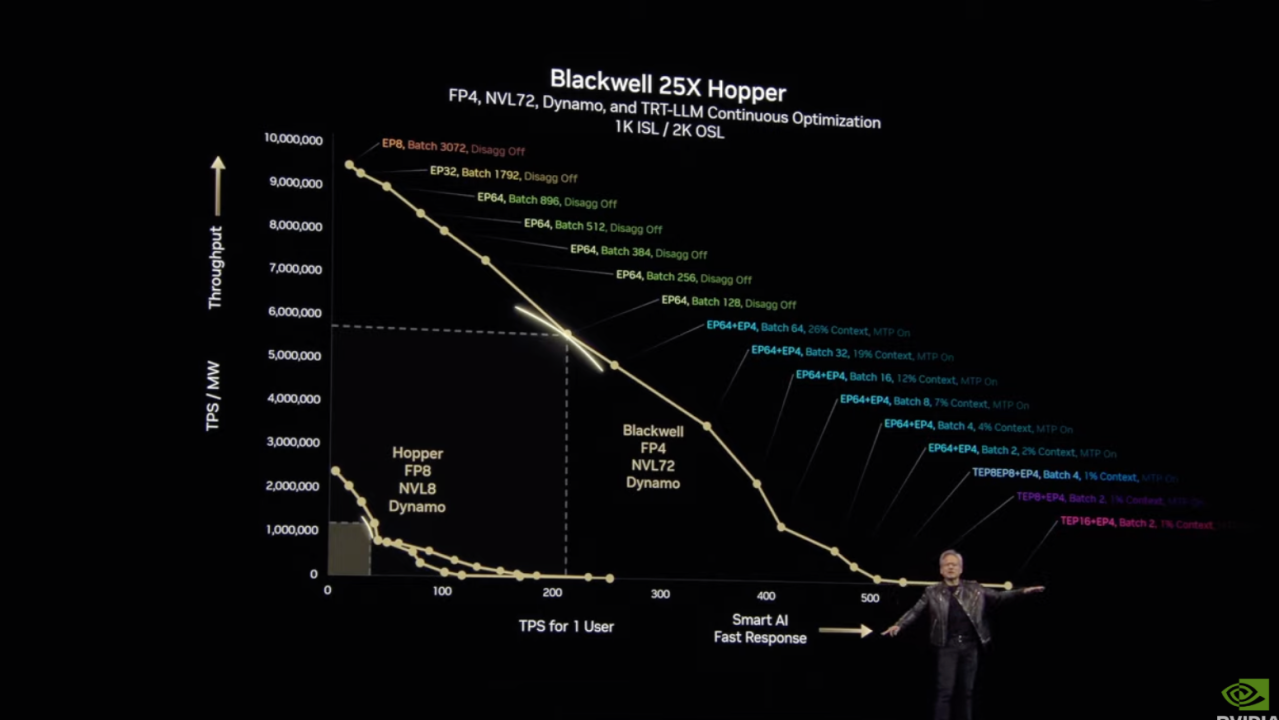

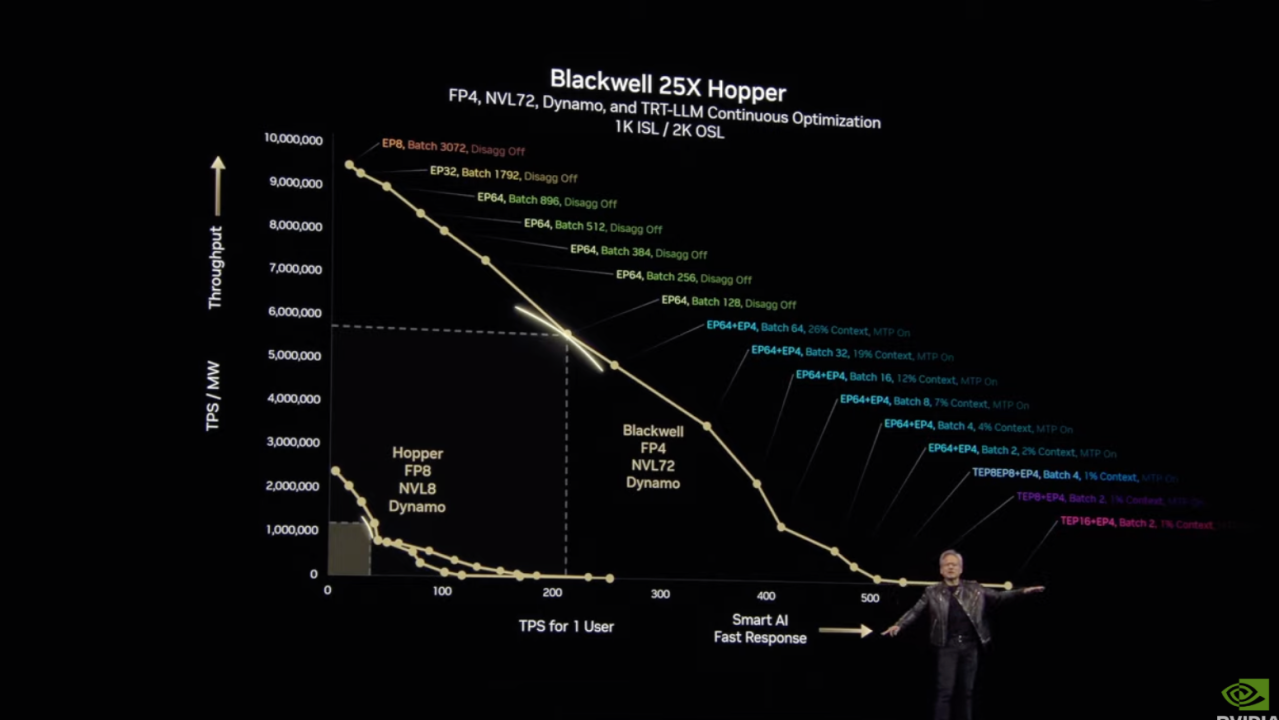

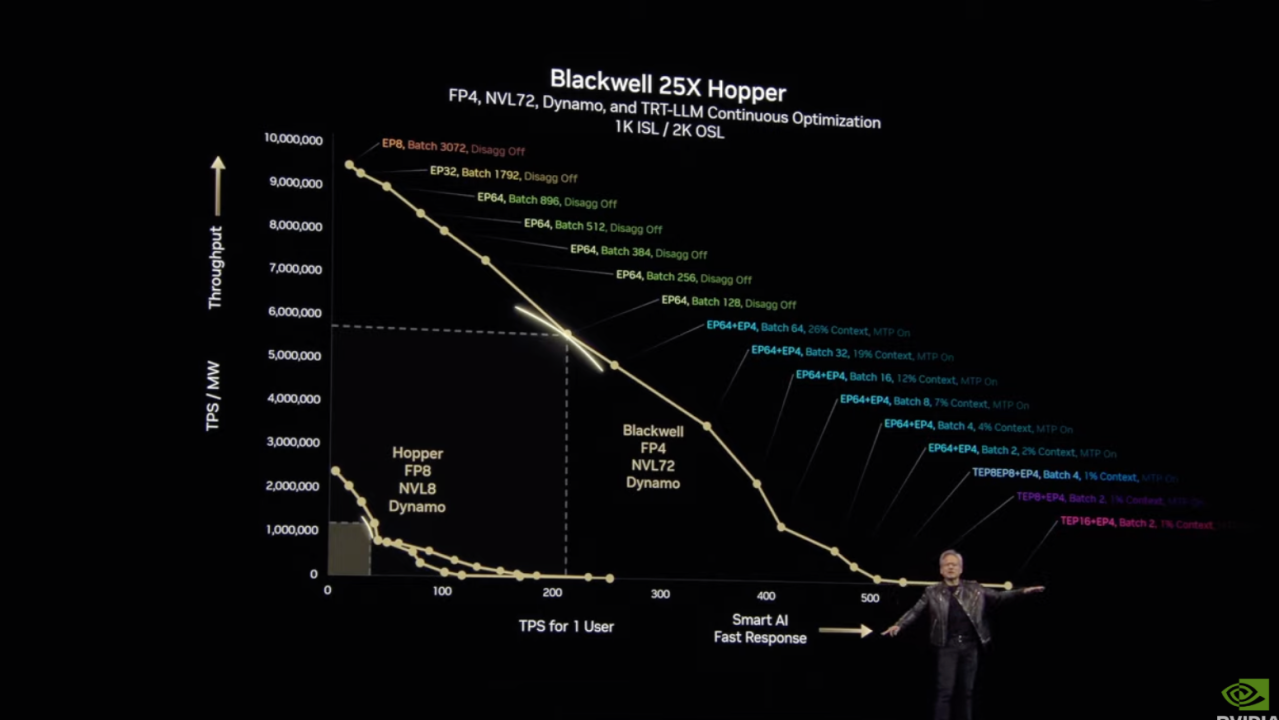

At the heart of the keynote was the Blackwell system, the latest in Nvidia’s graphics processing unit evolution. This isn’t just another generational upgrade; it represents the most extreme scale-up of AI computing ever attempted. With the Grace Blackwell NVLink72 rack, Nvidia has built an architecture that brings inference at scale to new heights. The numbers alone are staggering:

The shift from air-cooled to liquid-cooled computing is a necessary adaptation to manage power and efficiency demands. This is not incremental innovation; it’s a wholesale reinvention of AI computing infrastructure.

Huang emphasized that AI inference at scale is extreme computing, with an unprecedented demand for FLOPS, memory and processing power. Nvidia introduced Dynamo, an AI-optimized operating system that enables Blackwell NVL systems to achieve 40 times better performance. Dynamo represents a breakthrough in delivering an operating system software to run on the AI Factory engineered hardware systems. This should unleash the agentic wave of applications and new levels of intelligence.

Dynamo manages three key processes:

Jensen made a point to emphasise the importance of Nvidia laying out a predictable annual rhythm for AI infrastructure product and technology evolution, covering cloud, enterprise computing, and robotics.

The roadmap includes:

Each milestone is an exponential leap forward, resetting industry KPIs for AI efficiency, power consumption, and compute scale.

Networking is the next bottleneck, and Nvidia is tackling this head-on:

As Huang pointed out, datacenters are like stadiums, requiring short-range, high-bandwidth interconnects for intra-factory communication, and long-range optical solutions for AI cloud scale.

Huang predicted that AI will reshape the entire computing stack, from processors to applications. AI agents will become integral to every business process, and Nvidia is building the infrastructure to support them.

This isn’t just about replacing humans; it’s about enabling enterprises to scale intelligence like never before.

Nvidia’s ultimate vision is to move from traditional datacenters to AI factories — self-contained, ultra-high-performance computing environments designed to generate AI intelligence at scale. This transformation redefines cloud infrastructure and makes AI an industrial-scale production process.

Huang’s new punchline, “The more you buy, the more revenue you get,” was a comedic yet poignant reminder that AI’s value is directly tied to scale. Nvidia is positioning itself as the architect of this new era, where investing in AI computing power isn’t an option — it’s an economic necessity.

Storage must be completely reinvented to support AI-driven workloads, shifting towards semantic-based retrieval systems that enable smarter, more efficient data access. This transformation will define the future of enterprise storage, ensuring seamless integration with AI and next-generation computing architectures. Look for key ecosystem partners like Dell Technologies, Hewlett Packard Enterprise and others to step up with new products and solutions for the new AI infrastructure wave. Michael Dell was highlighted by Jensen in showcasing Dell as having a complete Nvidia-enabled set of AI products and systems.

Finally, Nvidia is applying its AI leadership to robotics. Huang outlined a future where general-purpose robots will be trained in virtual environments using synthetic data, reinforcement learning and digital twins before being deployed in the real world. This marks the beginning of AI-driven automation at an industrial scale.

Huang’s GTC keynote wasn’t just about the next wave of GPUs — it was about redefining the entire computing industry. The shift from datacenters to AI factories, from programming to AI agents, and from traditional networking to AI-optimized interconnects, positions Nvidia at the forefront of the AI industrial revolution.

The Nvidia CEO has set the tone for the next decade: AI isn’t just an application — it’s the future of computing itself. As we have been saying on theCUBE Pod, AI infrastructure has to deliver the speeds and feeds and scale to open the floodgates for innovation in the agentic and new AI applications that sit on top.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.