SECURITY

SECURITY

SECURITY

SECURITY

SECURITY

SECURITY

Red-teaming automation startup Yrikka AI Inc. has just launched its first publicly available application programming interface after closing on a $1.5 million pre-seed funding round led by Focal and Garuda Ventures.

The startup provides API access to artificial intelligence agents that can aid in red-teaming efforts to try and identify vulnerabilities in AI systems.

Red-teaming is a cybersecurity practice that simulates attacks on AI systems and models, trying to uncover any problems that might occur with them under real-world conditions. Unlike standardized safety benchmarks and controlled model testing, red-teaming goes beyond evaluating an AI model’s accuracy and fairness, scrutinizing the entire lifecycle and supply chain to ensure every component is resilient.

Besides simply trying to hack AI systems, red-teaming also simulates “prompt injection attacks,” which is where malicious users attempt to trick a model into bypassing its safety guardrails and output harmful or offensive content. By taking an adversarial stance, AI red-teaming can proactively uncover hidden weaknesses and security flaws in AI systems that weren’t apparent during the testing phase.

AI red-teaming has traditionally been performed by teams of human specialists, but that makes the process expensive and time-consuming. In a world where AI agents, which can automate tasks on behalf of their users with minimal supervision, are rapidly taking over key business functions at thousands of enterprises, this reliance on human experts simply won’t be able to scale to secure every AI that needs to be secured.

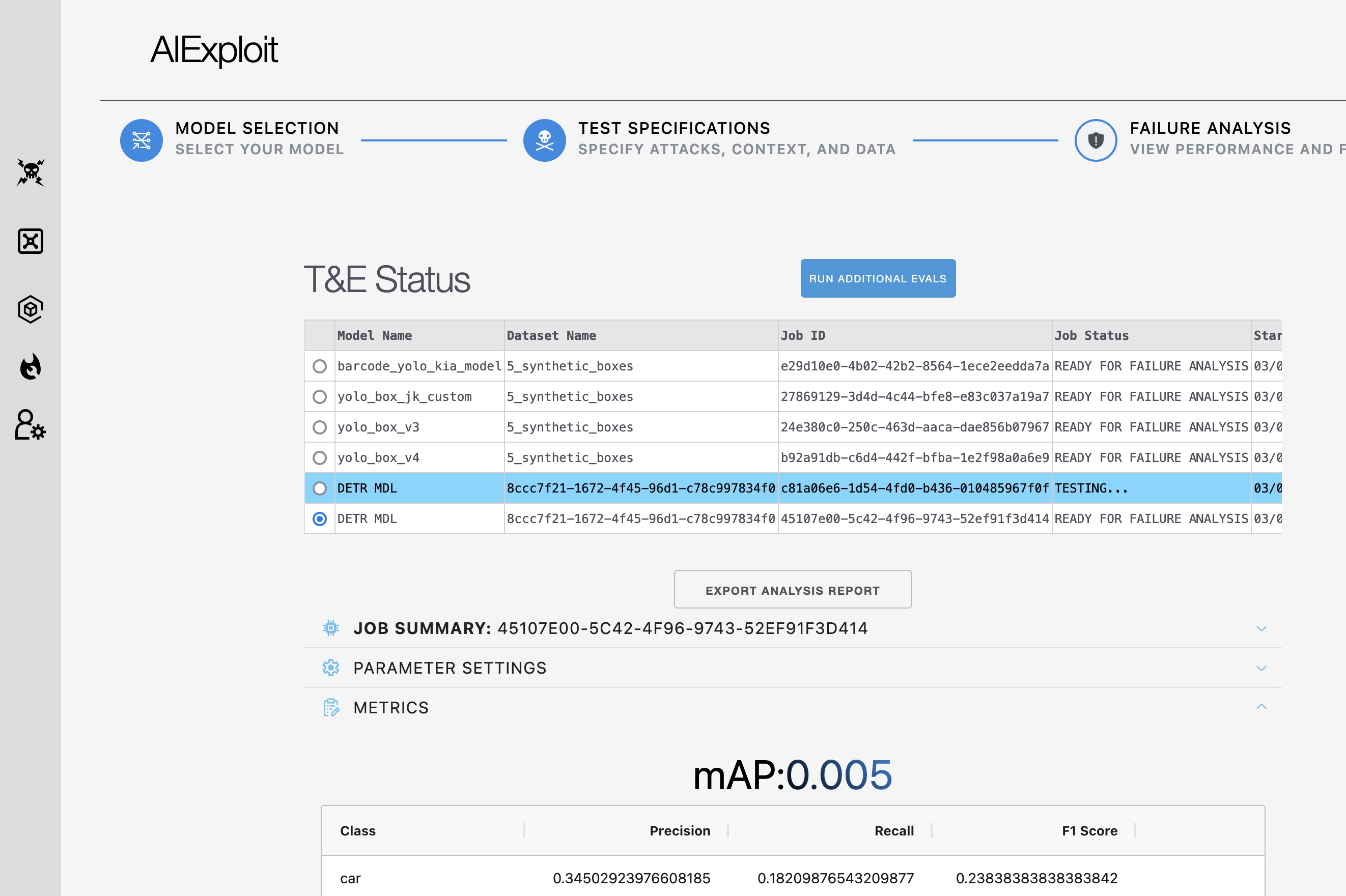

That’s where Yrikka comes in, using AI agents to assist in the red-teaming process to help rapidly ensure that models are not just functional, but also safe, reliable and resilient to changes.

The startup says it’s looking to serve industries where AI decision-making needs to be robust, such as autonomous defense systems, clinical diagnostics, industrial automation and more. Its platform enables what it calls “human-AI teaming,” where humans oversee the work of AI agents, reducing the time it takes to validate new models from months to as little as a few minutes.

Using its API, companies can automate both the risk assessment and return on investment of any AI model before and after it’s integrated into an application or system. Once the company is reassured the model is working as it expected, Yrikka will continuously monitor its performance in user workflows to try and detect any data drift, concept drift or adversarial attacks against it.

Yrikka was founded by its Chief Executive Dr. Kia Khezeli and Chief Technology Officer John Kalantari, who previously led machine learning projects at organizations such as Google LLC, Intel Corp., the National Aeronautics and Space Administration and the Mayo Clinic.

Khezeli and Kalantari said they were inspired to create Yrikka after realizing there’s a lack of tools and expertise for organizations to build trust in their AI systems both before and after they have been deployed. With Yrikka, AI decision makers can assess the reliability and security of visual AI assets in minutes, then continue to monitor them in dynamic environments to try and improve their performance.

“Yrikka’s goal is to ensure organizations can deploy AI with confidence by providing intuitive tools that help decision makers understand when and to what extent they can rely on their AI assets,” Khezeli said.

Despite operating under the radar to date, Yrikka has already secured a $1.9 million contract with the U.S. Department of Defense to automate the testing and evaluation of its computer vision models for defense applications.

“Yrikka is building a critical suite of tools to help decision makers put these models into production with conviction,” said Arpan Punyani of Garuda Ventures. “It’s an ambitious team tackling an important problem in AI.”

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.