AI

AI

AI

AI

AI

AI

Microsoft Corp. has released three new advanced small language models artificial intelligence models extending its “Phi” range of AI models that include reasoning capability.

The new model releases Wednesday introduce Phi-4-reasoning, Phi-4-reasoning-plus and Phi-4-mini-reasoning, which add a thinking capability to the models allowing them to break down complex queries and reason through them efficiently. The model family is designed to provide users with a model that can run locally on a PC graphics processing unit or mobile device.

This release follows Microsoft’s last release of Phi-3, which added multimodality to the efficient and compact model series.

Phi-4-reasoning is a 14 billion-parameter open-weight model the company says rivals larger models on complex tasks. Phi-4-reasoning-plus is a more advanced version, at the same parameter weight, and was trained with reinforcement learning, to use 1.5 times more tokens to deliver higher accuracy than the base model. That will also increase response time and compute.

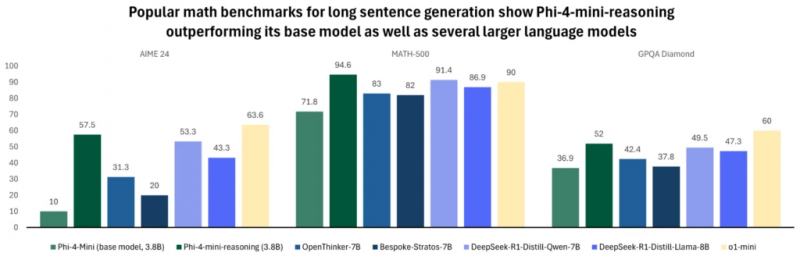

The smallest of the models, Phi-4-mini-reasoning, is designed to be loaded onto mobile and small-footprint devices. It is only a 3.8 billion-parameter open-weight model and was optimized for mathematical reasoning with an eye for educational applications.

“Phi-reasoning models introduce a new category of small language models. Using distillation, reinforcement learning, and high-quality data, these models balance size and performance,” the Microsoft team said in a blog post. “They are small enough for low-latency environments yet maintain strong reasoning capabilities that rival much bigger models.”

To reach these critical capabilities, Microsoft trained its Phi-4-reasoning model using web data and curated demos from OpenAI’s o3-mini model. The Phi-4-mini reasoning model was fine-tuned with synthetic teaching data generated by Deepseek-R1 and was trained on more than 1 million diverse math problems spanning multiple difficulty levels from middle school to Ph.D.

Synthetic data is frequently used to train AI models by leveraging a “teacher AI” that curates and augments the training material for student AI. This teacher model can generate thousands, even millions, of practice math and science problems, ranging from simple to complex.

In reasoning-based scenarios, it provides step-by-step solutions rather than just final answers, enabling the student AI to learn how to solve problems, not just what the answers are. By tailoring the problems and solutions to a diverse set of mathematics, physics and science curricula the resulting model can achieve high performance while remaining compact and efficient in terms of size.

Microsoft said that despite the significantly smaller size, Phi-4-reasoning and Phi-4-reasoning-plus outperformed OpenAI o1-min and DeepSeek1-Distill-Llama-70B on most benchmarks for mathematical and science reasoning at Ph.D. level questions. The company went on to say the models could also exceed the full DeepSeek-R1 model, which weighs in at 671 billion parameters, on the AIME 2025 test, which was used as a 15-question, three-hour qualifier for the USA International Mathematics Olympiad.

The new Phi-4 models are available today on Azure AI Foundry and HuggingFace.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.