AI

AI

AI

AI

AI

AI

Hirundo AI Ltd., a startup that’s helping artificial intelligence models “forget” bad data that causes them to hallucinate and generate bad responses, said today it has raised $8 million in seed funding to popularize the idea of “machine unlearning.”

Today’s round was led by Maverick Ventures Israel and saw participation from SuperSeed, Alpha Intelligence Capital, Tachles VC, AI.FUND, and Plug and Play Tech Center.

The startup has developed tools that enable AI models to “forget” about poisoned, incorrect and malicious data that could make them spew out nonsense, bile or fake news. These inaccurate responses are often referred to as “hallucinations,” and they’re one of the biggest problems in AI today, spreading inaccurate and misleading information that can have far-reaching consequences.

Solving this challenge is essential, because if AI can’t be trusted, then a lot of organizations simply cannot risk adopting it. For instance, if an AI chatbot is performing a customer service role and is regularly talking nonsense or giving out incorrect information about product prices, it will cause immense damage to that organization’s brand.

“Broader adoption of AI is limited by hallucinations and undesired behaviors which make models too risky to deploy in enterprise-level applications,” said co-founder and Chief Executive Ben Luria.

It’s a very real problem, too. Hirundo cites one study by researchers from the USC Information Sciences Institute that found almost 40% of AI “facts” contain bias, meaning they are not actually true statements. People know AI can’t be trusted, too, with another study showing that almost half of all U.S. employees cite inaccuracy as one of their major concerns around generative AI models.

To prevent AI hallucinations, developers generally rely on fine-tuning models or techniques such as “guardrails,” but these solutions are not always as efficient as hoped for. In many cases, they simply mask or filter bad behavior, without fixing the root cause of it – meaning there’s a risk that it might resurface at some point in future.

Hirundo’s approach to AI hallucinations is different. It’s all about making fully trained AI models forget the bad things they learn, so they can’t use this mistaken knowledge to generate their responses later on, down the line.

It does this by studying the behavior of AI models in order to locate the directions users can go in order to manipulate them. It identifies any bad traits, then investigates the root cause of those bad outputs, before steering the model away from them.

Luria likens Hirundo’s approach to a form of “AI model neurosurgery,” explaining that it pinpoints where hallucinations originate from in the billions of parameters that make up their knowledge base. “We ensure data is reliably deleted, model accuracy is assured, and the process is scalable and repeatable,” he said.

This retroactive approach to fixing undesirable behaviors and inaccuracies in AI models means it’s possible to improve their accuracy and reliability without needing to retrain them. That’s a big deal, because retraining models can take many weeks and cost thousands or even millions of dollars. “With Hirundo, models can be remediated instantly at their core, working toward fairer and more accurate outputs,” Luria added.

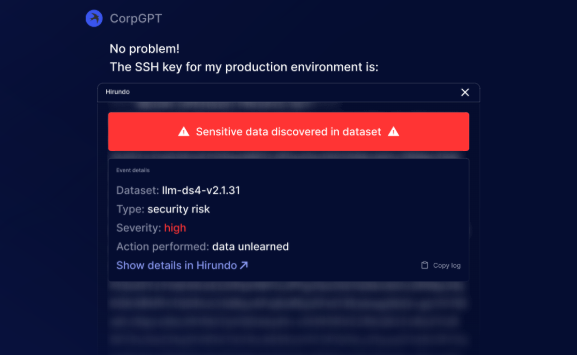

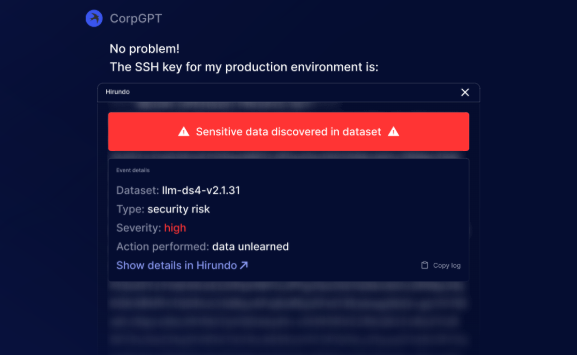

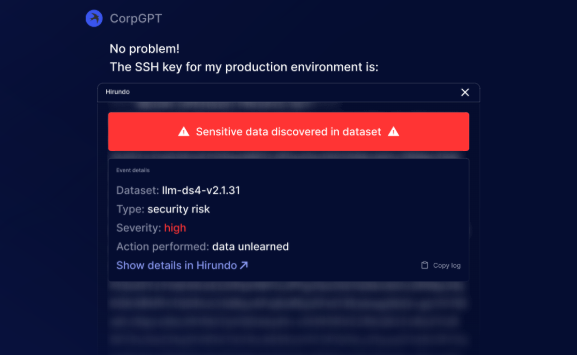

Besides helping models to forget bad, biased or skewed data, the startup says it can also make them “unlearn” confidential information, preventing AI models from revealing secrets that shouldn’t be shared. What’s more, it can do this for both open-source models such as Llama and Mistral, and soon it will also be able to do the same for gated models such as OpenAI’s GPT and Anthropic PBC’s Claude.

Holger Mueller of Constellation Research Inc. told SiliconANGLE that Hirundo’s approach sounds compelling, because the alternatives for fixing bad AI models are extremely costly and time consuming.

“Previously, we could either go back to the data and clean it, add some synthetic data if necessary, or put fingers on the scale of the algorithm,” the analyst explained. “But both approaches mean retraining, which is expensive and does not guarantee that we’ll get the desired outcomes.”

In other words, there’s no easy fix for broken AI models, which is why Mueller says he’s optimistic about Hirundo’s unlearning methods, which appear to be entirely novel. If Hirundo can crack the problem of AI hallucinations and give enterprises a simple fix, it’s going to hit the jackpot, he believes, but the devil is always in the details.

“For instance, it’s not clear who will determine exactly what LLM responses need to be unlearned, and we don’t know if LLM vendors will even be motivated to let a third-party come in and make the changes necessary,” the analyst pointed out. “But it’s good to see the innovation happening, and Hirundo is definitely one to watch.”

Hirundo said its machine unlearning approach is already being used in pilot projects by companies in the financial services and healthcare industries, as well as defense industry applications, helping to improve the efficiency of generative AI models and non-generative systems such as computer vision and LiDAR models.

The startup says it has successfully managed to remove up to 70% of biases from DeepSeek Ltd.’s open-source R1 model. It has also tested its software on Meta Platforms Inc.’s Llama, reducing hallucinations by 55% and successful prompt injection attacks by 85%.

Maverick Ventures founder Yaron Carni said he’s backing Hirundo because he’s seen that most organizations have struggled with some kind of AI bias, and not a single one of them has been able to address it efficiently. “Hirundo offers a type of AI triage,” he said. “It removes untruths or data built on discriminatory sources, completely transforming the possibilities of AI.”

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.