INFRA

INFRA

INFRA

INFRA

INFRA

INFRA

Amazon Web Services Inc. is gearing up to refresh two of its custom processor families, CNBC reported late Tuesday.

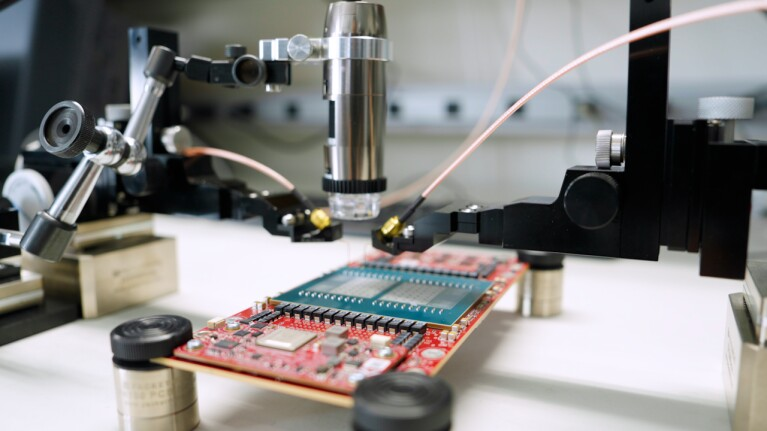

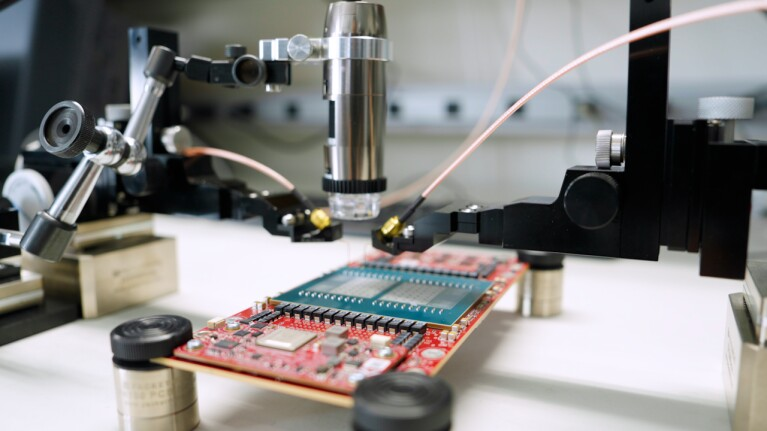

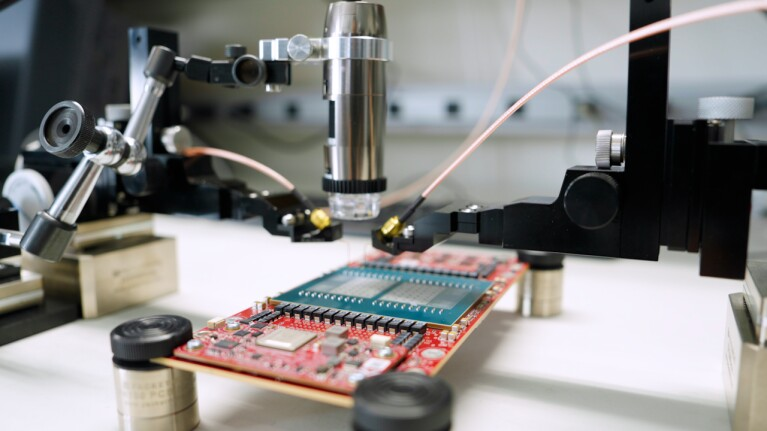

The cloud giant plans to release an updated version of its AWS Graviton4 central processing unit. It will also debut a new addition to the AWS Trainium chip series, which is optimized for training artificial intelligence models.

Both chip families are developed by AWS’ Annapurna Labs unit, which became part of the cloud provider through a 2015 acquisition. When Graviton4 debuted last June, AWS detailed that it has deployed more than 50 million Graviton-series processors to power 150-plus instance types. The Trainium series, in turn, powers an AI training cluster with 400,000 chips that AWS started building for Anthropic PBC last year.

Graviton4 is the fourth iteration of the Graviton CPU series. It features 96 cores based on Arm Holdings Inc.’s server-optimized Neoverse N2 core design. The onboard L2 cache can hold 192 megabytes of data, up from the 8 megabytes offered by the first-generation Graviton chip. AWS says that the Graviton4 contains a total of 73 billion transistors across its components.

The processor also includes cybersecurity optimizations. An Arm technology called BTI, or Branch Target Identification, blocks cyberattacks that attempt to use Graviton4’s branch prediction mechanism. This is a CPU component that completes some calculations before the results are needed to save time. Additionally, the hardware interfaces through which Graviton4 sends data traffic to other components of AWS’ infrastructure are encrypted.

The updated version of Graviton4 that the cloud giant plans to announce will reportedly offer 600 gigabits per second, or Gbps, of network bandwidth. Current Graviton4-powered instances provide up to 50 Gbps. Each chip also offers up to 536.7 Gbps of memory bandwidth, which determines the rate at which data moves to and from RAM. That metric can heavily influences application performance.

According to CNBC, AWS will announce the release schedule of Graviton4 later this month. Trainium2, the Amazon.com Inc.’s other new chip, is expected to launch by year’s end.

AWS’ current-generation AI training chip, Trainium2, provides 1.29 petaflops of performance when processing FP8 floating point numbers. Those are data units that neural networks commonly use to perform calculations. One petaflop corresponds to one quadrillion, or a thousand trillion, computations per second.

Trainium2’s processing capacity is provided by eight cores based on a design called NeuronCore-V3. They’re supported by 96 gibibytes of onboard memory. Developers may optically combine the compute and memory resources of multiple cores into a single virtual core, an arrangement that streamlines some large-scale AI training projects.

AWS expects its next-generation Trainium3 chip to provide double the performance of Trainium2. Additionally, it’s expected to be 50% more energy-efficient.

AWS also uses other custom chips besides Graviton and Trainium processors to power its cloud platform. The Amazon unit’s Nitro Security Chip, for example, protects its servers from tempering attempts. AWS data centers also contain Nitro Cards, devices that use custom processors to manage the flow of network traffic.

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.