AI

AI

AI

AI

AI

AI

From a glass-lined conference room high above Amazon’s campus, Matt Garman firmly emphasizes one word: “speed.”

With a full year under his belt, the new chief executive of Amazon Web Services Inc. (pictured) has just finished what we called a “halftime huddle” — two days of video exclusive meetings and interviews with top AWS lieutenants racing to keep the world’s largest cloud provider ahead of an artificial-intelligence boom that is redrawing the technology industry’s boundaries.

The video below is part of our editorial series AWS and Ecosystem Leaders Halftime to Re:Invent Special Report digital event. Look for other articles from the event on SiliconANGLE.

In a year when six months feels like 12, Garman, 48, is presiding over the most frenetic product cycle in AWS’ 18-year history. “The innovation pace is breathtaking,” he tells me, his voice equal parts engineer’s precision and sales executive’s assurance. “For our customers it’s no longer optional — AI is a business imperative.”

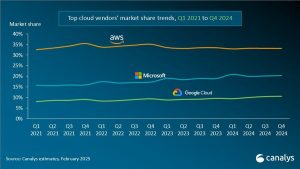

That imperative is reshaping everything from Amazon’s capital-spending plans to how startups secure their first customers and how heavily regulated health-care systems think about patient data. It is also forcing AWS — the profit engine that long subsidized Amazon’s e-commerce build-out — to defend its lead against rivals Microsoft Azure and Google Cloud while navigating antitrust scrutiny and geopolitical crosswinds.

At the midpoint of 2025, AWS finds itself in a rare position: still growing double-digit percentages on a base of more than $100 billion in annual revenue, yet facing the steepest technology transition since it commercialized cloud computing in 2006.

The catalyst is “agentic computing,” a term for software agents that can reason over huge data sets, take actions on behalf of users and — crucially — consume vast amounts of the digital “tokens” that power large-language models. Agents, Garman argues, will dwarf the impact of the software-as-a-service wave that made Salesforce Inc. and Workday Inc. household names.

“If generative AI delivered 20% efficiency gains, agents could deliver 200%,” he says. “When a developer ships three times faster, the extra compute is a rounding error.”

Speed costs money. In my interview, Garman said AWS would invest more than $30 billion in infrastructure in North Carolina and Pennsylvania — as part of its commitment to spend more on AI — to satisfy surging demand for high-performance chips and the electricity to run them. Analysts show AWS leading capital spending compared with its rivals.

Garman insists the spending is “demand-driven” rather than a build-it-and-they-will-come gamble. “Running short of capacity is painful for customers,” he said, referencing the number of regions that AWS is operating and plans to open soon — Mexico, Thailand, Chile and, most notably, a European Sovereign Cloud set to debut later this year. The fully isolated region, staffed only by European Union citizens, aims to satisfy governments nervous about U.S. surveillance and ever-expanding privacy rules such as the EU’s GDPR.

Still, the bill is staggering. Amazon’s total capital expenditures reached $71 billion last year, up 31%, according to public filings. “We’re in uncharted territory,” says Dave Vellante, chief analyst at theCUBE Research. “No company has attempted a global build-out on this scale, at this speed, with regulators watching their every move.”

To rein in costs — and reliance on Nvidia’s dominance — AWS is doubling down on chips it designs in-house. The company’s Graviton porcessors and Trainium AI accelerators promise to cut customers’ cloud bills by as much as 40%, Garman says, by squeezing more work out of each watt of power. Amazon built a five-times-larger Trainium cluster for Anthropic, maker of the Claude chatbot, than the startup’s previous generation.

Those savings matter as AI applications devour compute cycles. “Tokens are just a proxy for compute time,” Garman said. “We have to keep driving the cost curve down — orders of magnitude.” Microsoft and Google have similar efforts — Azure’s Maia, Google’s tensor processing unit — but Amazon’s vertical integration in silicon, servers, networking and software remains its strongest economic moat, analysts say.

Paradoxically, the AI rush is reviving the most elementary but important part of Amazon’s business: persuading companies to move old databases and back-office apps to the cloud. “Less than 20% of global workloads have migrated,” Garman said, repeating a statistic AWS has quoted for years but insists is still roughly accurate. What has changed is urgency. “Enterprises realize they either get their data into a cloud format or get left behind,” he added.

Startups, meanwhile, are bypassing the old build-an-app-then-find-an-enterprise playbook. Their first customers often are Fortune 500 companies looking to unlock proprietary data through tailored AI models. To court that constituency, AWS in March quietly open-sourced Amazon Strands, an agent framework written by engineers who needed a faster way to prototype AI workflows internally. The project jumped from an eight-hour internal approval to tens of thousands of external downloads within weeks.

No sector illustrates the new dynamics better than health care and life sciences — industries long chided for lagging in technology adoption. Compliance regimes such as HIPAA, which forced hospitals to label and archive records exhaustively, now look prescient in the AI era.

Consulting firm McKinsey first pegged generative AI’s upside for pharma and medtech at $60 billion to $110 billion a year in July 2023, and by early 2024 had mapped more than 20 high-impact use cases spanning drug discovery, marketing and clinical workflows. Yet a late-summer 2024 survey of more than 100 industry executives shows the promise is still mostly theoretical: Though every respondent has run pilots and roughly a third are moving to scale, only 5% say generative AI already gives them a consistent, bottom-line edge.

“They were sitting on well-structured data before they knew AI would need it,” Garman said. Pharmaceutical companies are using AWS to discover proteins and simulate drug trials; health-care providers are experimenting with AI scribes that cut paperwork for clinicians.

Regulation remains a hurdle. The same privacy concerns that birthed the European Sovereign Cloud weigh heavily on U.S. hospital systems wary of sharing patient data with Big Tech. AWS pitches its compliance toolkits and air-gapped regions as antidotes, but skeptics note that mistakes — even rare ones — carry outsized political risk.

Internally, Mr. Garman is applying Amazon’s famous “two-pizza” team rule to AI: Every group must experiment with generative tools or justify why not. He recently told employees that “every single job inside Amazon is going to change.” Customer-support scripts, recruiter workflows, even the layout of vast fulfillment centers are undergoing AI-driven rewrites, executives say.

Mr. Garman describes this ethos as the “Why” culture, a nod to former CEO Andy Jassy’s 2025 shareholder letter. Ask why something is done a certain way, then ask why not do it differently. When a small engineering trio proposed open-sourcing Strands, it skipped the usual months-long gauntlet and secured approval in less than a day. “The world is moving too fast for the old playbook,” he says.

Speed, however, is a two-way street. Microsoft struck a nerve in January by bundling its Copilot AI tools deeply into Office, threatening AWS’ traditional foothold among developers with a mainstream productivity suite. Google Cloud, meanwhile, is touting the success of its Gemini models and security specialties to win financial-services deals. All three face questions about training-data provenance, model hallucinations and the ethics of unleashing autonomous agents.

According to Garman, AWS’ response rests on breadth. Bedrock, its managed service for running multiple models — including Anthropic’s Claude, Meta’s Llama and Amazon’s own Titan family — positions the company as a neutral arms dealer. Customers can switch models with a single applications programming interface call, a flexibility AWS says will matter as algorithms specialize and costs diverge.

The finish line for this year’s AI sprint is Las Vegas in December, when Garman will host his first full re:Invent conference as chief executive. He promises a “lot of agentic news” and hints at partnerships to embed AI into industries “where regulation once slowed adoption.”

For now, he is focused on keeping AWS’sfoot on the accelerator without veering off the fiscal road. Investors applauded Amazon’s 23% operating margin last quarter but will scrutinize capital-spending returns. “We’re thoughtful about risk,” Mr. Garman says. “But the bigger risk is failing to scale when customers need us.”

He closes out my exclusive interview with, again, the word “speed.” Outside, window washers swing over a new office tower at the AWS re:Invent building — one more sign that, in Amazon’s vision of the cloud, halftime is no time to catch one’s breath.

No matter how the rest of the year plays out, the one metric Garman cites over and over again is time. AWS is organizing to move with the reflexes of a startup while carrying the bulk of a trillion-dollar enterprise — refreshing chips, spinning up sovereign regions and open-sourcing frameworks in the span of weeks, not quarters.

In the agentic era, the winner won’t be the cloud with the biggest data centers or flashiest models, but the one that can turn a customer’s idea into production code fastest — at global scale and under tightening regulation. For Amazon, that means staying at least one sprint ahead on silicon, capacity and culture, proving that even the largest provider can still outrun the clock when the whistle blows for halftime.

Here’s the full interview with Garman:

Support our mission to keep content open and free by engaging with theCUBE community. Join theCUBE’s Alumni Trust Network, where technology leaders connect, share intelligence and create opportunities.

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a dynamic ecosystem of industry-leading digital media brands that reach 15+ million elite tech professionals. Our new proprietary theCUBE AI Video Cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.